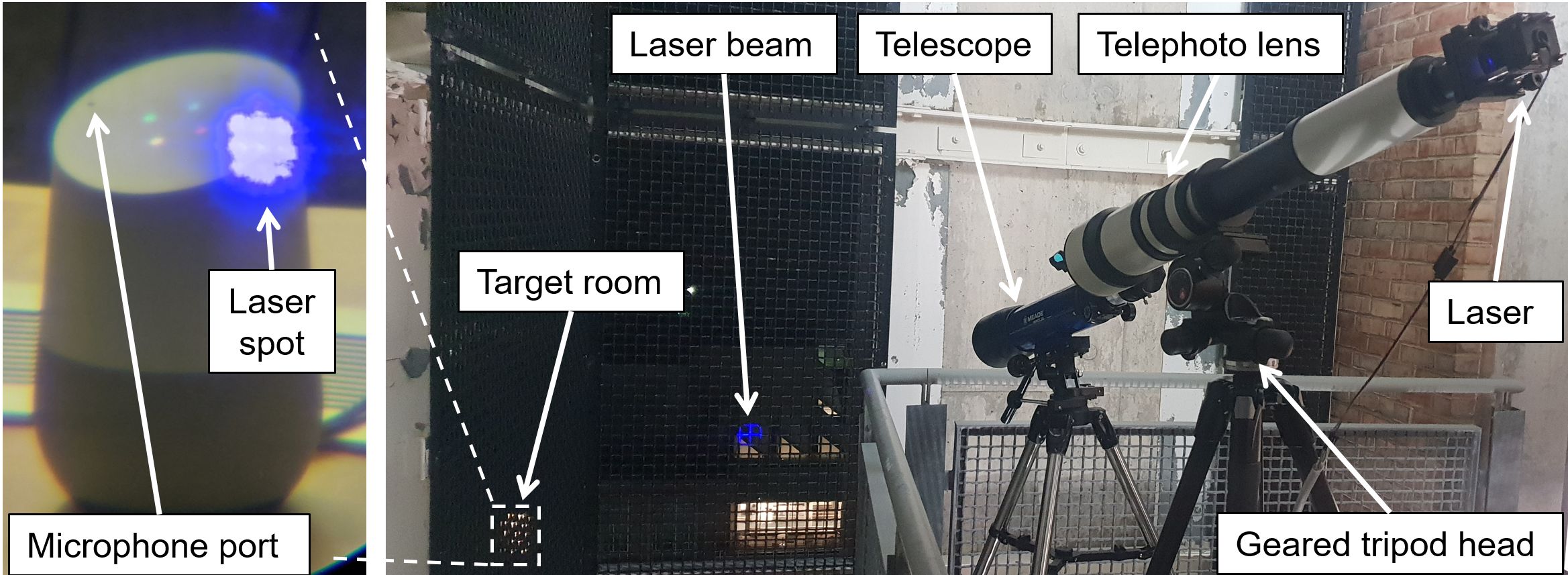

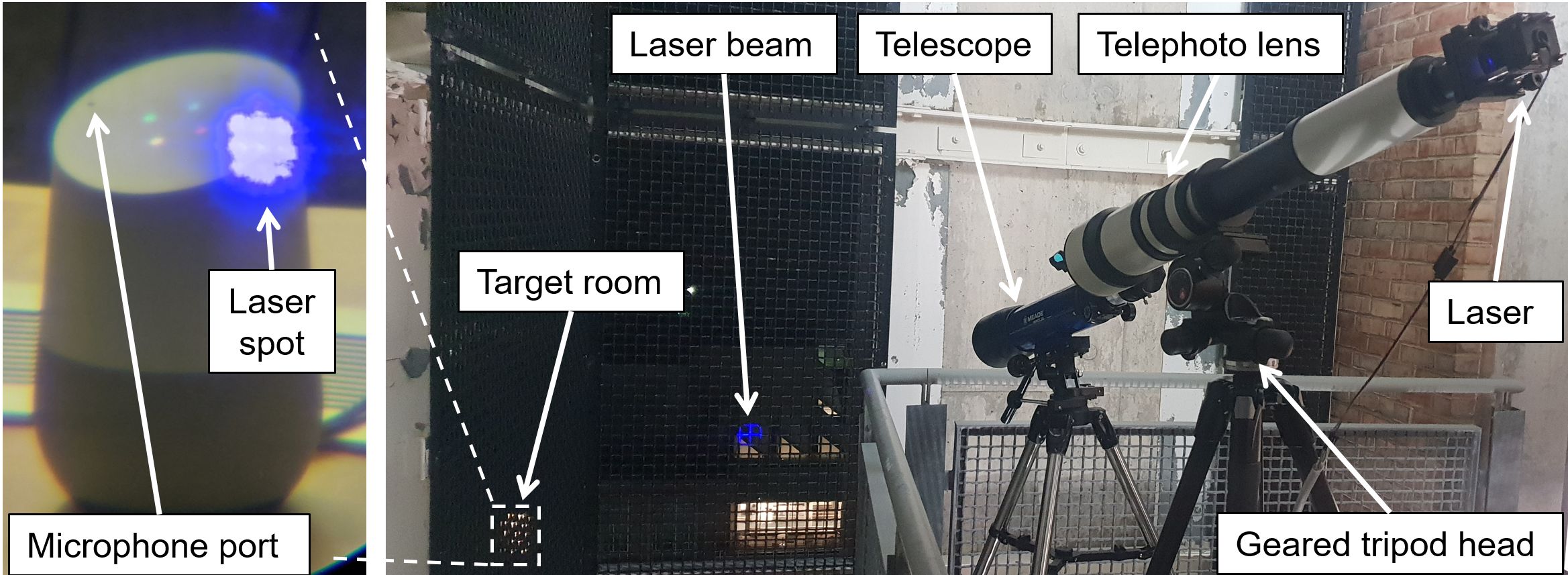

Researchers have found that, subject to a number of conditions, sensitive microphones of voice-activated home devices respond to laser radiation in the same way as they do to sound. You can modulate the radiation power so that remotely, silently, even through a double-glazed window, transmit voice commands to the microphone. Then everything depends on the capabilities of a smart system. You can imagine options from Alexa, order pizza to Hey Google, open the door. Work at a large distance (from 100 meters) requires some preparation, in particular, the accuracy of focusing the laser beam is of critical importance. This was achieved for two of the 18 devices examined; special equipment was required, but inexpensive – everything can be bought on the Internet, and the total budget will not exceed $ 500.

The study provides links to previous research papers on the topic. It all started with simple attempts to play a voice command on a smartphone without the knowledge of its owner. This is quite easy to do by embedding additional functionality in an application that does not require special privileges in the operating system. The next step: hide the voice signal in the noise so that for a person it seemed an incomprehensible malfunction, and a smart speaker or phone recognized the command. Ultrasound attacks, which do not bother a person at all, are another level higher. The drawback of all these methods was the extremely limited range of action, and the laser attack solves this problem to a certain extent. You can send a command through a window, from a neighboring building, from a car. Yes, you first need to accurately focus on a vulnerable point with an area of a ruble coin, and not all devices will be able to attack from a long distance. So, phones respond to radiation worse than smart speakers. However, you can get closer – so that the goal remains 20 meters, or increase the laser power.

The statement at the beginning of the post about the lack of authorization tools for the owners in the voice assistant is not entirely true. In home devices, the ability to recognize the owner even by the nature of the pronunciation of the “code word” is usually turned off, in smartphones it is turned on by default. This limitation was circumvented using the Text-to-Speech generator. In fact, “brute force” was conducted – different voices generated by a computer were used sequentially until a match was found. The study describes ways to bypass and authorization using a PIN code. If so, then you can crack the protection – for example, in the car’s voice assistant (the latest systems in Ford and Tesla cars are mentioned), which requires a pin code to start the engine or open the doors. And this already looks like a possible attack in practice: you can shine with a laser in a car parked on the street for a long time without attracting attention orderlies.

In addition to magic with lasers, the study shows that the security of voice assistants roughly corresponds to the security of early protocols on the Internet: it was once considered normal to receive mail through POP3 without encrypting data, pass passwords and credit card numbers over an insecure connection, and store user passwords in the database in the open form. Everything changed after several serious hacks and leaks, and then the transition to secure HTTPS-only connections is not yet complete. Suppose that it will be the same with voice control: at first there is no security, since no one is trying to break such systems. Or try, but with such scientifically unlikely methods. Then there are practical attacks: car theft, voice theft and the like. And only after that protection technologies begin to be developed. Laser research is an attempt to start developing defense methods BEFORE practical attacks on voice control systems become possible.

What else happened:

Amazon Ring found a vulnerability in smart doorbells during initial setup. In this mode, you need to connect to the device from your smartphone via an unsecured access point and transfer the parameters of the “normal” home Wi-Fi network. The password from the access point is transmitted in clear text and can be eavesdropped at a short distance.

A number of vulnerabilities in the universal Das U-Boot bootloader allow you to gain full control over the device. This bootloader uses many devices, such as Amazon Kindle readers, Chromebooks and others.

Child vulnerability in Office 2016 and 2019 for Mac OS X. The option “prohibit the execution of all macros without notification” does not prohibit all macros: XLM code will still run without the user's knowledge.

Another set of serious vulnerabilities in Nvidia drivers has been closed. Denial of service, data leakage, and arbitrary code execution are possible.

Vice journalists complain about requests to advertise fake websites that promise iPhone owners the possibility of jailbreak (with consequences for victims from installing an adware to taking control of a device through mobile device management).

The Kaspersky Lab blog shares the results of an experiment based on the latest Terminator. Is it possible to protect a mobile phone from surveillance if you carry it in a bag of chips? Spoiler: possible, but two sachets will be needed.