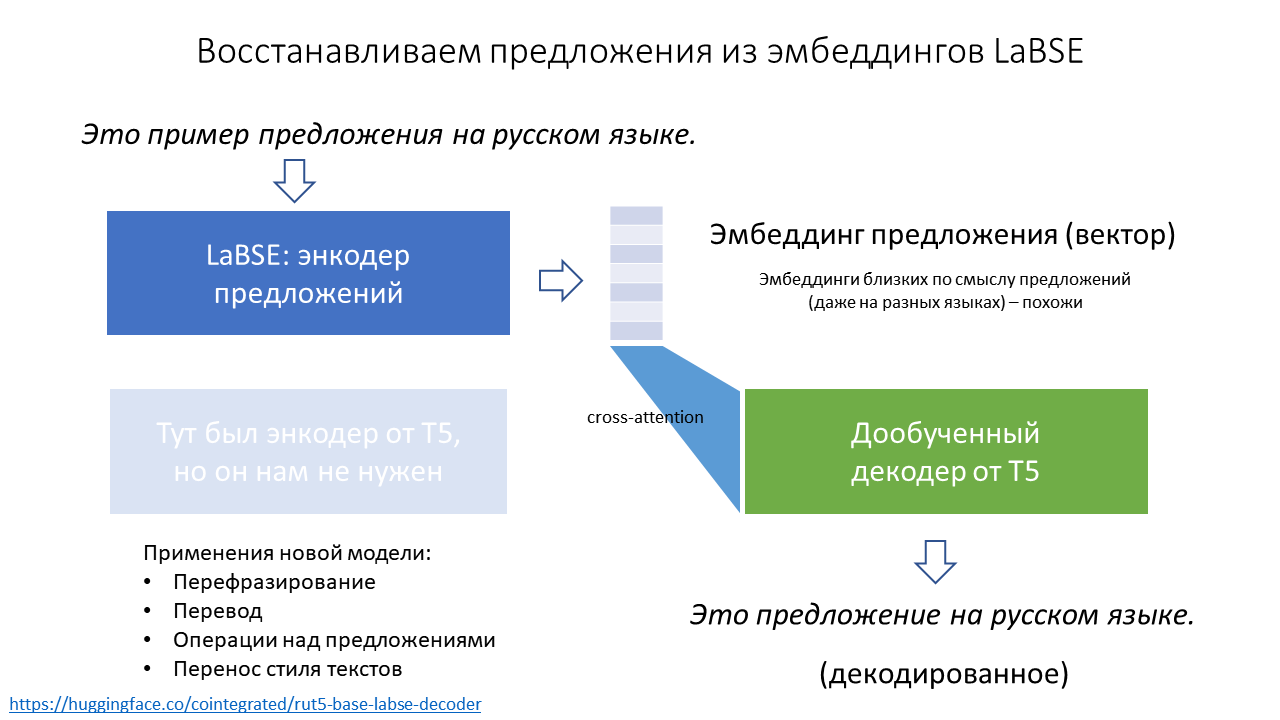

Recovering sentences from LaBSE embeddings

Last week I was asked twice how to recover the text of a sentence from its LaBSE embedding. Twice I answered no. But in fact, of course, you can train the decoder to generate text from its embedding. What for? For example, to:

-

translate from 100 different languages into Russian;

-

summarize many similar sentences in one;

-

realistically replace phrases in sentences;

-

change the meaning or style of sentences.

A model for recovering sentences from embeddings is published as cointegrated/rut5-base-labse-decoderand the details are under the cut.

LaBSE and other sentence encoders

A sentence encoder (sentence encoder) is a model (usually a neural network) that receives the text of a sentence as input, and outputs a multidimensional vector (for example, 768-dimensional) that roughly describes the meaning of this sentence. That is, such that sentences that are similar to each other in meaning have vectors similar to each other geometrically. Sentence encoders can be used for text classification and a host of other useful tasks; read more in my posts about small BERT and sentence encoder rating.

LaBSE (language-agnostic BERT sentence embeddings) is modelproposed in article of 2020 from researchers at Google. By architecture, this is BERT, and it was trained on a selection of texts in 100+ languages in multitasking mode. The main task is to bring together embeddings of sentences with the same meaning in different languages, and the model copes with this task very well. Thanks to this ability, you can, for example, train the model to classify English texts, and then apply it to Russian, or find pairs of sentences in different languages in a large corpus that are translations of each other.

But what LaBSE does not know how to do at all is to generate texts. Once we turn the text into a vector, we can no longer get text back from it. This requires a separate model. And it’s not a fact that she will cope with it: how used to say Professor Raymond J. Mooney, You can’t cram the meaning of a single $&!#* sentence into a single $!#&* vector! But we’ll still try.

Decoder training

A decoder in NLP is just a model that generates texts from vectors, i.e. solves the problem inverse to the encoder problem. There are several decoders for the Russian language, of which I chose the T5 model that I once trained, because. it required minimal changes to the code. As an alternative, I could try to educate the Russian GPT; if you try it, please tell me!

Encoding texts into vectors is absolutely standard: we extract the CLS token embedding from LaBSE and normalize it.

bert_name="sentence-transformers/LaBSE"

enc_tokenizer = AutoTokenizer.from_pretrained(bert_name)

encoder = AutoModel.from_pretrained(bert_name)

def encode(texts, do_norm=True):

encoded_input = enc_tokenizer(texts, padding=True, truncation=True, max_length=512, return_tensors="pt")

with torch.no_grad():

model_output = encoder(**encoded_input.to(encoder.device))

embeddings = model_output.pooler_output

if do_norm:

embeddings = torch.nn.functional.normalize(embeddings)

return embeddingsDecoding looks just as easy. This is standard text generation using T5 (or any other seq2seq transformer), only we feed embeddings from LaBSE to the input, which “pretend” to be embeddings from the T5 encoder (fortunately, the dimension of both of them turned out to be 768, so I don’t even had to modify the cross-attention layers in T5).

t5_name="cointegrated/rut5-base-labse-decoder"

dec_tokenizer = AutoTokenizer.from_pretrained(t5_name)

decoder = AutoModelForSeq2SeqLM.from_pretrained(t5_name)

def decode(embeddings, max_length=256, repetition_penalty=3.0, num_beams=3, **kwargs):

out = decoder.generate(

encoder_outputs=BaseModelOutput(last_hidden_state=embeddings.unsqueeze(1)),

max_length=max_length,

num_beams=num_beams,

repetition_penalty=repetition_penalty,

)

return [dec_tokenizer.decode(tokens, skip_special_tokens=True) for tokens in out]Naturally, without T5 fine-tuning, sentences generated in this way will be meaningless, because T5 was trained to look at embeddings from another space, and not at one, but at a whole sequence of embeddings (for each token).

For additional training, I took 2 million short texts: opus100, Leipzig collectionand comments from Odnoklassniki. As an augmentation, I added another 400K of individual words. And on all this in a standard way (teacher-forced cross-entropy) I trained T5 to generate source text from embedding. Trained with batch 8 for about a million steps; it took 2.5 days on google colab. Notebook – tut.

After additional training, the T5 copes with the new task quite tolerably. You can, for example, encode the following texts:

embeddings = encode([

"4 декабря 2000 года",

"Давно такого не читала, очень хорошо пишешь!",

"Я тогда не понимала, что происходит, не понимаю и сейчас.",

"London is the capital of Great Britain.",

])

print(embeddings.shape)

# torch.Size([4, 768])After decoding, the texts change, but their meaning is approximately reproduced by the model:

for text in decode(embeddings):

print(text)

# После 4 декабря 2000 года

# Не так давно, это многое читала!

# Я не понимала того, что происходит сейчас, тогда же.

# Британская столица Англии.Application examples

Okay, the neural network is trained, now what? In general, nothing, because I did this experiment in the first place just for fun. But if you want to have more fun, in this notebook collected several examples of the use of such a decoder. The most obvious is paraphrasing, but more creative uses are possible.

Translation

LaBSE can “translate” texts from different languages into a common vector space, and our decoder can translate from this space into Russian. This means that together we can translate this couple of models into Russian from any of the 109 languages known to LaBSE!

Below is an example of a dozen languages. The model does not quite understand the difference between the words “Mr.a” and “gaboutgosh,” but otherwise it does the job well.

|

Source text |

Translation |

|

Lord, I haven’t eaten in 6 days! |

God, I haven’t eaten in 6 days! |

|

Panov, I’m not їv 6 days! |

God, I haven’t eaten in 6 days! |

|

Gentlemen, I haven’t eaten for 6 days! |

Lord, I haven’t fed in 6 days! |

|

Messieurs, je n’ai pas mange depuis 6 jours! |

God, I haven’t eaten for 6 days! |

|

Meine Herren, ich habe seit sechs Tagen nichts gegessen! |

Lord, I haven’t eaten anything for six days! |

|

Khudovando, man 6 ruz boz chise nakhurdaam! |

God, I haven’t had 6 more days! |

|

Tanrım, 6 gundür yemek yemedim! |

God, I haven’t eaten in 6 days! |

|

אלוהים, לא אכלתי 6 ימים |

God, I didn’t have 6 days! |

|

主啊,我已经6天没吃东西了 |

God, I haven’t eaten in 6 days. |

|

हे प्रभु, मैंने 6 दिनों से कुछ नहीं खाया है! |

God, I didn’t eat anything from that day! |

Summarization

Sometimes it is necessary to understand from a multitude of sentences what their main general idea is. For example, do not read 50 product reviews, but read one “average review”. Our model was not really designed for summarization at all – but what if it succeeds?

For example, I took product reviews data from the M.Video hackathon. I collect all the product reviews into one text, split the offers, calculate the embedding of each offer, average them all into one vector, normalize it, and decode this vector with my model. Here are examples of what happened:

# соковыжималка Braun J500

# примеры отдельных предложений

Дизайн строгий, но это понятно - фирма-то немецая.

Из 5 кг яблок выходит меньше литра сока, а пены больше чем сам сок!!!

Жмых сухой, сеточка мелкая и не пропускает куски.

...

# декодированное усреднённое предложение

Устройство очень хорошее, потому что выбрасывать яблоки неплохо.

# Планшет Lenovo Tab 3 Plus

# примеры отдельных предложений

Минусов никаких не заметно пока что.

Экран, Lte, gps, ГЛОНАСС, 2 сим, быстрый, шустрый, размер, тонкий, сборка.

Замечательный планшет!

...

# декодированное усреднённое предложение

Всё очень красиво, у нас на экране есть сенсорная картинка.

# Планшет Prestigio MultiPad

# примеры отдельных предложений

Не очень понравилось что динамик только 1, стерео нет(

На расстоянии пары сантиметров видны пиксели, батарейка заряжается конечно долго.

Зарядное устройство стандартвое без изъян.

...

# декодированное усреднённое предложение

Встроенный экран очень хороший, даже несмотря на то, что у меня есть сенсорная камера.As you can see, the average offers are not very informative, but in general they reflect the mood of the reviews and some aspects of the products described well.

Addition and subtraction of sentences

We remember and love word2vec for supporting cool algebraic operations on word vectors, in the spirit of “king + woman – man = queen”. It turns out that LaBSE can do this too!

embeddings = encode(['король', 'женщина', 'мужчина'])

print(decode(embeddings[[0]] + embeddings[[1]] - embeddings[[2]]))

# ['королева']

embeddings = encode(['Лондон', 'Франция', 'Англия'])

print(decode(embeddings[[0]] + embeddings[[1]] - embeddings[[2]]))

# ['Париж']Moreover, LaBSE can add and subtract not only words, but also small phrases. With long texts, it does worse, but sometimes the decoder thinks out the details in a fun way:

embeddings = encode([

'Это произошло во время правления Петра Первого.',

'Иван Грозный',

'Пётр Первый',

])

print(decode(embeddings[[0]] + embeddings[[1]] - embeddings[[2]]))

# ['Это произошло в режиме правления Ивана Грозного.']

embeddings = encode([

'Кошка обучает своих котят охотиться за мышами.',

'белый медведь',

'кот',

])

print(decode(embeddings[[0]] + embeddings[[1]] - embeddings[[2]]))

# ['Белый Медведь обучает медведям следить за охотой на оленей.']

embeddings = encode([

'Я не хочу делать прививку, потому что не доверяю врачам.',

'Я верю в народную медицину.',

'Я не доверяю никаким врачам.',

])

print(decode(embeddings[[0]] + embeddings[[1]] - embeddings[[2]]))

# ['Я верю в вакцинацию, потому что я хочу лечиться.']Text style transfer

As we saw in the testimonials example, LaBSE vectors store information about the style and mood of texts. It turns out that if we take several pairs of texts with a similar meaning, but in a different style, then the average difference between their vectors can reflect the difference between styles. Maybe it can be used to change the meaning of other texts, as in articles about TextSETTR or DIFFUR?

For example, let’s take from here examples of restrained and emotional texts. The examples are in English, but LaBSE doesn’t care.

texts_reserved = [

"That is a very pretty painting.",

"I’m excited to see the show.",

"I’m surprised they rescheduled the meeting.",

"This specimen is an example of the baroque style.",

"After the performance, we ate a meal.",

]

texts_emotive = [

"OMG, that’s such a beautiful painting!",

"I’m sooo excited to see the show, it’s going to be stellar!!",

"I absolutely can not believe that they rescheduled the meeting!",

"This wonderful specimen is a truly spectacular example of the baroque style.",

"After the superb performance, we ate a delicious meal.",

]

delta = encode(texts_emotive).mean(0) - encode(texts_reserved).mean(0)

print(decode(encode('Этот фильм произвёл на меня хорошее впечатление.') + delta * 1))

# ['Этот фильм мне очень понравился хорошим впечатлением!']

print(decode(encode('Внешний долг США достиг рекордной величины.') + delta * 1.5))

# ['Увеличенная США долговая задолженность в целом достигла рекордных размеров!']We see that the paraphrased texts have indeed become more emotional and expressive.

Another example is the transformation of formal texts into informal ones:

texts_formal = [

"This was a remarkably thought-provoking read.",

"It is certainly amongst my favorites.",

"We humbly request your presence at our gala on the 12th.",

]

texts_informal = [

"reading this rly makes u think",

"Its def one of my favs",

"come swing by our bbq next week if ya can make it",

]

delta = encode(texts_formal).mean(0) - encode(texts_informal).mean(0)

print(decode(encode('Убедительно просим вас покинуть помещение учреждения.') - delta * 0.5))

# ['пожалуйста, уходите из помещения']

print(decode(encode('Был рад нашей с Вами встрече!') - delta * 0.5))

# ['Хорошо встретился с тобой!']As you can see, it also approximately works.

I also tried this approach for detox textsbut it turned out that LaBSE does not understand the meaning of rough texts in Russian very well – apparently, there were few of them in its training sample.

Conclusion

Sentence encoders have been getting a lot of work lately, and so are text generators (like GPT). But for such decoders, which would invert the operation of the encoder, interest has faded recently (although autoencoders were once a fashionable thing). Perhaps in vain: as you can see, for an inverted encoder in 2022, it is quite possible to find interesting applications.

My decoder (cointegrated/rut5-base-labse-decoder) posted on HF; you can use it in tandem with a lightweight Russian-English encoder cointegrated/LaBSE-en-ru or with a full-fledged model in 100+ languages sentence-transformers/LaBSE. In any case, like the models you like, and write in the comments about interesting cases of their use. Subscribe to my channelwear sunscreen and fight for the world!