Pizza as a service: how Amazon migrated to Redshift

Hi, my name is Victoria, and I am responsible for marketing at CROC Cloud Services. Now we regularly host cloud mitaps. I recently got on the coolest performance of Dmitry Anoshin, who now works at Amazon, and I want to share it.

I had a strong feeling that large commercial companies decided to collect generally all the possible data in the world that they could reach. On the one hand, this translates into advanced analytics, increased sales and attractiveness of products. On the other hand, the data has become so bold and comprehensive that jokes about trucks with CD-ROMs have long been commonplace.

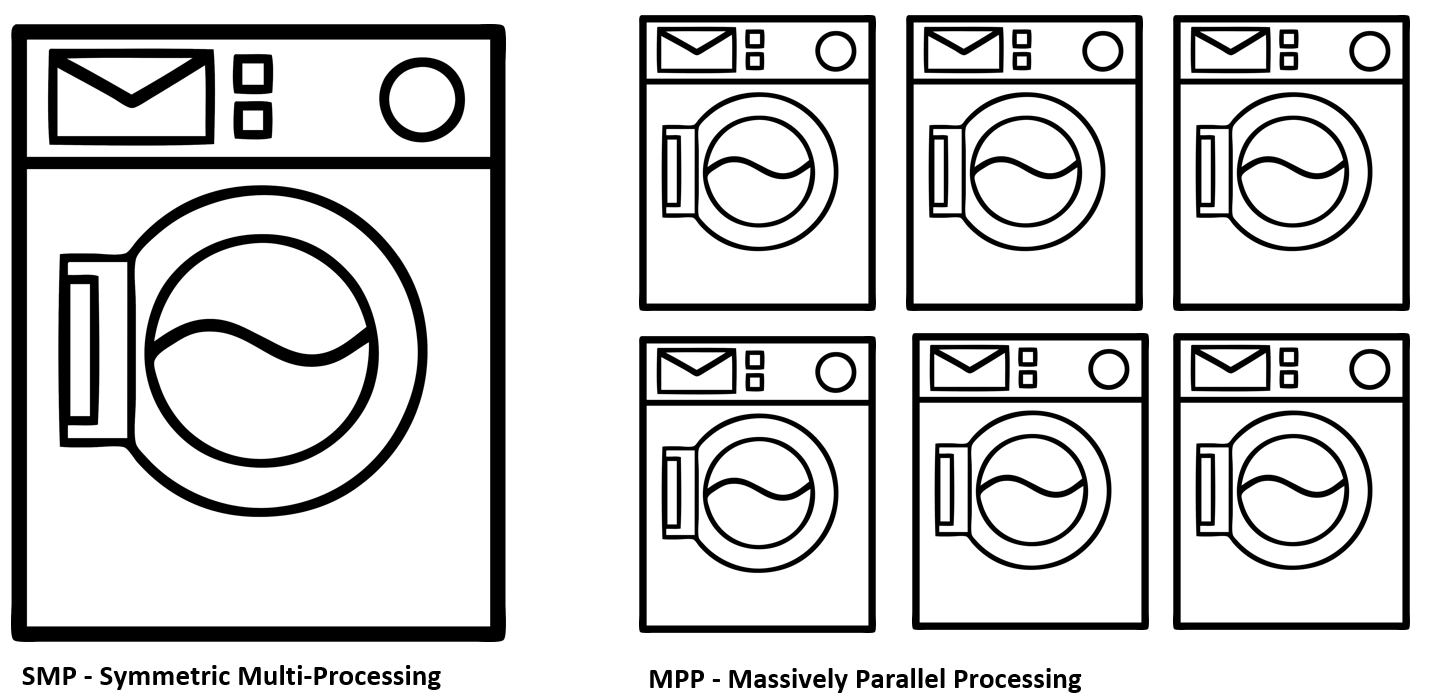

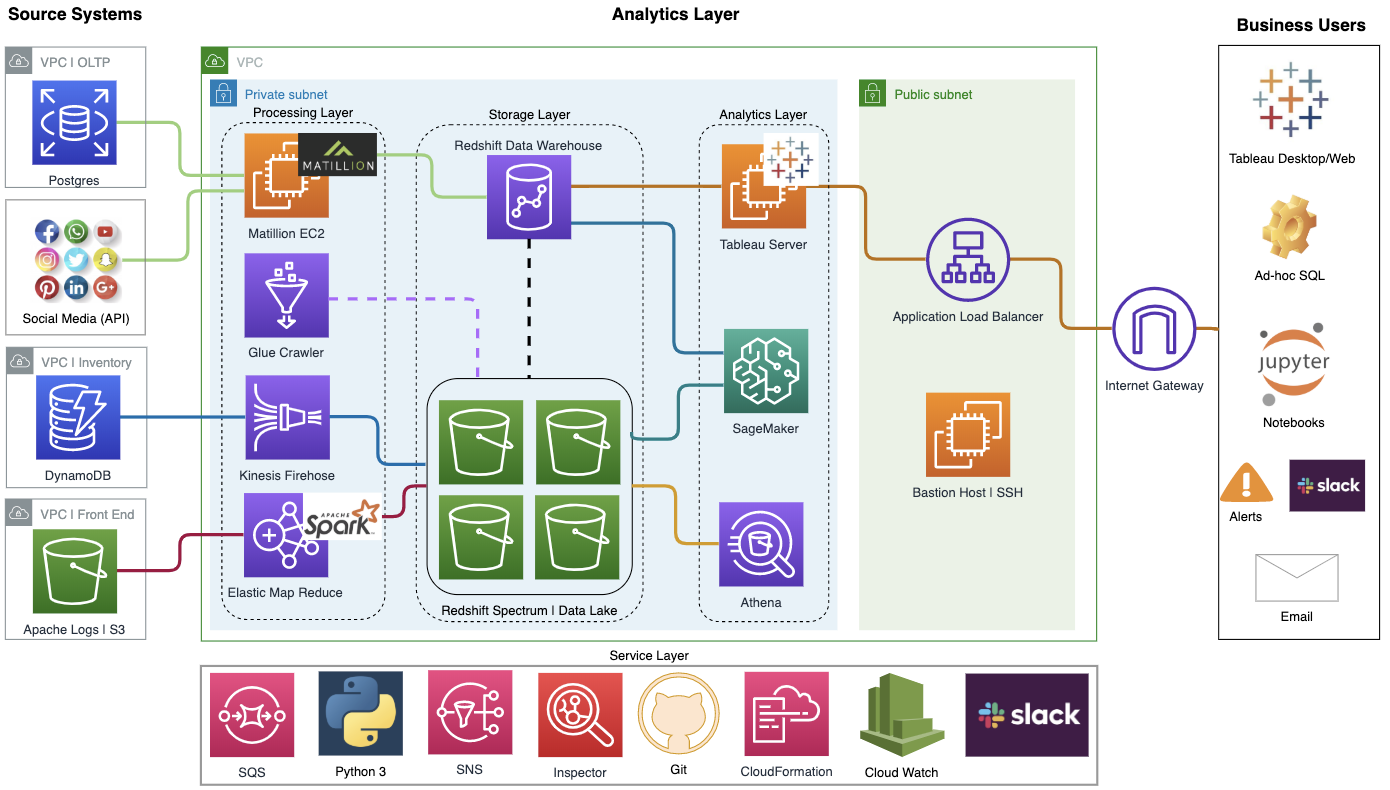

Let's see why it might be necessary to migrate to the cloud, and what Amazon got from moving the internal infrastructure to Redshift and NoSQL DynamoDB. Let's analyze the difference between the concepts of SMP and MPP, ETL and ELT and try to understand why clouds are needed for big data.

Well, if you are aware of what has been happening in the industry in recent years, then browse immediately to a specific case. Come under the cut, I prepared a summary of the main points of the performance.

Telemetry from each light bulb

Large companies have a very noticeable trend towards the formation of integrated ecosystems around their users. That is, you woke up, went to brush your teeth and at the same time you look through the news in a multimedia mirror. The Alexa column includes peppy music in the morning and reminds of today's meetings. Here you order fresh coffee with home delivery, as the old one is already running out. You get into the car, and then again Alexa, which is integrated with the car multimedia system and continues to accompany on the road. Plus a smart bracelet, headphones, applications in the phone and thousands of other sources of information.

This is at the same time a slightly frightening future, which is rapidly coming from all directions, an attempt to create additional value for the end consumer from companies. Agree, it's cool when, for example, under the Amazon Key In-Car program, your purchases will be delivered directly to the car trunk in the parking lot. I now live in Canada, and such integrations make life much more comfortable. For the company, this is also very valuable data in terms of sales targeting, demand forecasting, logistics optimization and more. Win-win.

One problem. As I said, there is a strong feeling that companies often collect data on an excessive scale in the hope of monetizing them in the future. And these are terabytes. In reality, terabytes of poorly structured information that continuously flows onto the company's servers, devouring network, computing and storage resources. That is why the problem of optimal utilization of resources and ensuring the speed of computing is so important. And you also need to give business analysts a normal interface that does not require them to have expert knowledge in building cloud infrastructure. Therefore, many large companies have moved towards the clouds.

There is no cloud

Cloud technology is the buzz-word that pretty much got everyone. No, no doubt, he looks solid in the financial statements of the company and at official presentations. Nevertheless, at the iron level, these are all the same good old servers located in data centers around the world. However, cloud computing needs more than just a convenient virtualization console. The main feature of the clouds is the fully dynamic management of resources and their automatic scaling when necessary:

- The calculation.

- Storage.

- Network resources and transport.

- Database.

When you have such an infrastructure, you will utilize your resources much more fully, which with large-scale business cases can result in significant savings.

For small companies, this approach can also be very attractive. Imagine that you are planning to purchase new iron for your infrastructure next year. At the same time, it is very difficult for you to predict the exact load, which can vary from many factors. For example, your product suddenly suddenly becomes wildly popular due to a successful publication on Habré, a whole crowd of customers rush into you and wildly disappointed because you did not plan such peak loads. And there may be a reverse situation when you overestimate demand, buy excess capacity and eventually get idle equipment, which actually removes much-needed money from the company's turnover. A bet solely on the purchase of iron capacities is almost always an extremely inert process, and it certainly loses in adaptability in a rapidly changing market.

Particular or complete migration to the cloud is suitable for such situations, which serves as a kind of capacitor that smooths out peak consumption spikes. Or even completely provides you with infrastructure.

Types of clouds

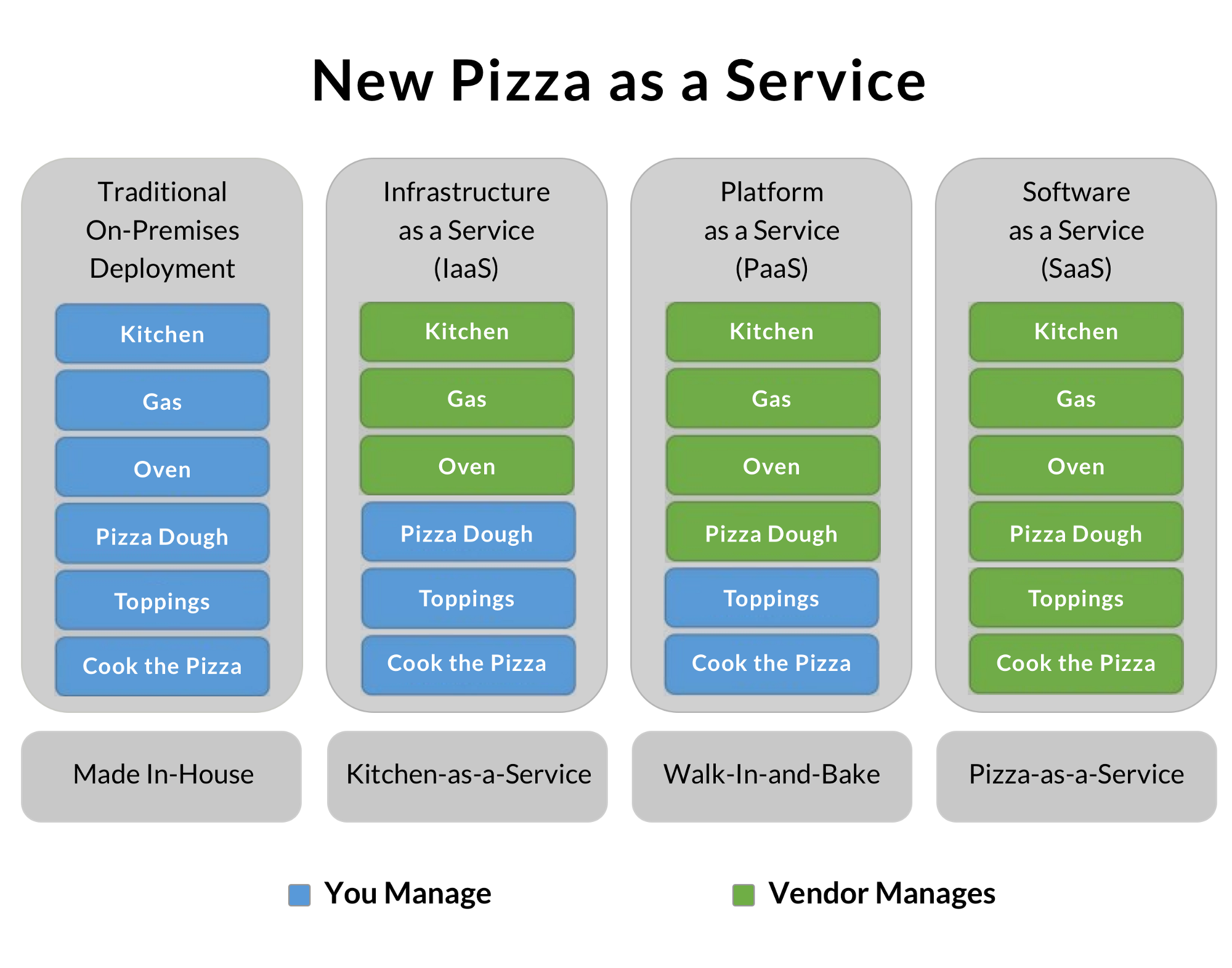

In fact, depending on their business model, companies usually come to one of three forms of building cloud systems. A small business usually uses public clouds and saves on the appropriate specialists, focusing on its product. Particularly large ones in themselves are similar to many separate companies connected by a common goal and brand. Therefore, they often build private clouds, achieving optimal resource utilization. Part uses hybrid models, which allow you to process particularly sensitive, legally protected data locally and transfer minor tasks to external clouds. Pizza as a service:

I always really liked this illustration, which shows well the degree of delegation of your company's infrastructure tasks to the vendor.

The traditional On-Premises option is to go buy food, preheat the oven, and cook pizza yourself. Perfect! But you need to have all the equipment, ingredients and more.

IaaS is an infrastructure rental option. You rented a kitchen with all the equipment, brought your own products and prepared an excellent pizza. Specially trained people will wash the oven from fat, and you do not need to worry about the sharpness of knives and other trifles.

PaaS is a platform as a service. The service provides you with some additional goodies in addition to bare infrastructure. For example, Amazon Redshift – as a data warehouse, which allows you to save on DBA and focus on the product. In our pizza example, it can be, for example, a ready-made shaped dough that can only be thawed, spread with aromatic sauce, sprinkled with mushrooms, slices of tender bacon and grated parmesan.

The final option is SaaS. In this case, you get the most finished product on the basis of which you build your business. For example, run a blog based on someone else’s public platform. In our example, this will be the most expensive, but simple option to order a ready-made pizza at home.

Truck data. Snow mobile

There is an old bearded joke from the time of the “zero” years: “A team of truck drivers was able to deliver 100,000 CDs from Odessa to Kiev in one night. Thus, they reached a data transfer rate of 2.43 terabytes per second over a distance of more than 500 km without the use of expensive cables. "

At that time it was just a joke. However, with the modern volumes of a continuous stream of photos from each mobile phone, audio, video and other telemetry, it becomes completely unmockable and turns into a real problem. When you don’t have a direct rented thick optical link to a data center, moving large amounts of data to the cloud can be a huge problem. Here services such as Amazon's Snowball come to the rescue.