Performance Analysis of Cloud Applications Using Queuing Networks

Cloud services provide users with a variety of services, such as offering software, platforms, and infrastructure for various business tasks. When choosing an architecture for applications hosted on cloud platforms, it is important to consider the specifics of the tasks that the company plans to solve with their help. Different types of business tasks require different architectures of cloud applications. For example, to perform resource-intensive computing tasks, such as simulation modeling and processing large amounts of numerical data, the Big Computing architecture is used, which allows the use of the computing power of thousands of cores. For relatively simple tasks that can be demanding on computing resources, the Web Interface – Queue – Worker Role architecture type is used. For ordinary business applications that do not require frequent updates, an N-tier architecture with horizontal tiers separated by a subnet is suitable.

Data centers that host cloud computing services often have heterogeneous environments with different server generations and hardware configurations. The heterogeneity of cloud platforms directly impacts the overall performance of the data center. Workload fluctuations are common in cloud computing environments, making it difficult to accurately predict the workload. Modern cloud applications are moving toward a service-oriented architecture, deploying autonomous services instead of monolithic modules. A key feature of cloud architecture is scalability, which allows resources to be dynamically allocated and retrieved based on workload requirements. However, workload fluctuations can impact the quality of service provided to users. Service quality is defined by service level agreements between customers and cloud service providers and is assessed using system metrics such as response time, latency, workload averages, and service failure rates. Analysis of service quality in cloud computing often incorporates queueing theory for simulation modeling and analysis. As cloud application architectures become more complex, single-queue models may not be sufficient, leading to the adoption of finite-node queueing models for multi-server application tiers or queueing systems for individual tiers. It is important to pay attention to the differences in CPU speed and capabilities across cloud VMs. Queuing theory is currently widely used in cloud service modeling, but most studies still focus primarily on homogeneous characteristics, ignoring the complexities associated with the heterogeneity of cloud infrastructures.

Let's consider a cloud service of the “Infrastructure as a Service” type, which provides a company with computing resources for various business tasks directly from the cloud. As part of this service, the client rents virtual servers, network infrastructure, communication channel protection, a load balancer and access to the admin panel, with which you can manage access and user rights, as well as scale capacities, for example, change the volume of cloud storage.

Architecture of the cloud infrastructure service system

Virtualization resources are provided in the form of virtual machines that vary in technical characteristics such as processor performance, memory capacity, and request processing intensity. An application in the cloud can be deployed on a cluster of heterogeneous virtual machines. Customers can select virtual machines based on their business needs. Service providers often strive to improve the quality of service they provide. Tracking key performance indicators such as response time, average number of requests, and throughput helps identify bottlenecks and determine the optimal number of virtual machines allocated to applications. A data center that provides cloud computing services has a heterogeneous environment because it contains servers of several generations with different hardware configurations, sizes, and processor speeds. These servers are added to the data center gradually and are intended to replace existing machines. The heterogeneity of server platforms affects the performance of the data center. Workload fluctuations are common in a cloud computing environment. This affects the quality of service, even though the architecture is scalable – the ability to dynamically allocate and extract resources depending on the current workload requirements. Performance monitoring is responsible for making decisions on the admission controller and dynamic resource provisioning policy, which ensures fault tolerance in the event of virtual machine failure. The information obtained during the performance monitoring stage is used for analysis, planning future capacity requirements and preparing the necessary instructions in case of a need to adjust the provided resources. Each virtual machine in the cluster used in the application has a local agent installed that queries the current performance parameters, such as CPU load and free memory. If a virtual machine reaches a critical level for the monitored parameters, it is excluded from resource allocation until the corresponding parameters return to normal.

Multi-tier architecture of cloud application

Let's consider a multi-tier architecture of a cloud application deployed on a cluster of virtual machines. Such architecture is schematically presented in Figures 2 and 3.

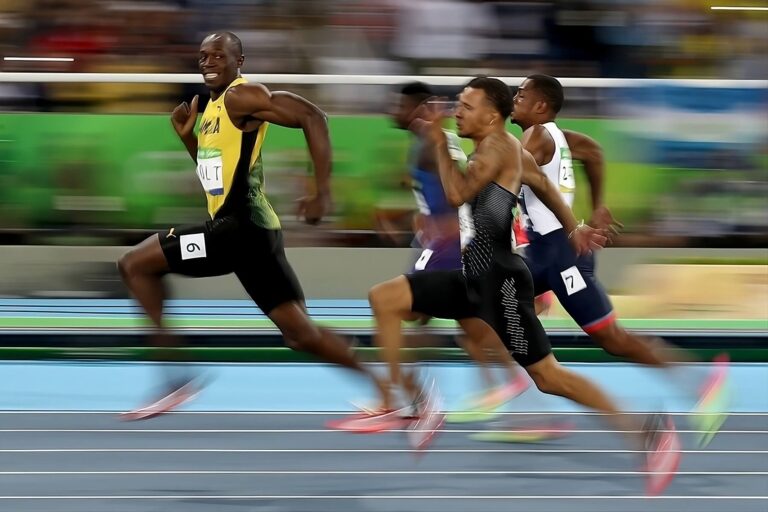

Three-tier cloud application diagramThe standard architecture of an N-tier cloud application consists of three generalized tiers: external, logical, and data storage. In fact, a logical tier may consist of several physical or logical tiers, depending on the business tasks being solved. For each logical tier, the input data is the processing results of the previous tier. Transitions are possible only to hierarchically lower tiers. The architecture of this application is flexible. Virtual machines can have different processing intensities measured in units per second. The intensity of request processing depends on the technical characteristics of the virtual machine, such as processor performance and memory. Requests arriving at the first logical tier, which is a load balancer, can come from both an actual user and another web application. Request execution time can vary from milliseconds to minutes. The load balancer accepts all incoming requests and distributes them between virtual machines of the logical tier using various algorithms that take into account different processing intensities in the nodes, the load on each node, and virtual machine failures. The load balancer has up-to-date information about the currently active virtual machines and has the ability to stop directing traffic to a failed virtual machine, distributing incoming traffic among the remaining nodes of the logical level. As soon as the virtual machine is operational again, the load balancer will start using it. The application can use different balancing policies, such as random distribution, least number of distributions, and round-robin (the “Carousel” balancing algorithm). When a cluster consists of virtual machines of different capacities, the workload distribution policy must take into account the processing intensity of the nodes.

Carousel balancing algorithm

Unlike other resource scheduling algorithms that simply queue processes and execute them without changing the state of the executing process in any way, the Carousel balancing algorithm has a preemption capability. This means that the scheduler can take a process that is being executed and put it back in the queue, i.e. change the process state from “running” to “waiting for execution”. The Carousel scheduler is turned on for a certain number of cycles, and if a process is running and has not yet entered the waiting or completed state, then it puts this process back into the ready state and executes the next process. The scheme of this algorithm is shown in Figure 4.

Queuing network describing the operation of a three-tier cloud application

The application is three-tiered. The first tier consists of one virtual machine acting as a load balancer. The load balancer uses a special algorithm to distribute incoming traffic between five virtual machines located on the second tier. The load balancer plays an important role in ensuring the efficiency of the application. The second tier consists of five virtual machines and represents various business processes that implement the logic and functionality of the application. The use of several virtual machines ensures fault tolerance in the event of a failure of one of the business tier nodes. The third tier is a data warehouse, presented in this paper as a replicated database.

Each of the nodes simulates the operation of a virtual machine raised on a cloud server. Virtual machines are connected in a cluster. Requests can be processed on and leave the system at the second and third levels, communication between the first and third levels without the second level is excluded. Each node is a queueing system M/M/1 with a Poisson input flow, exponential service time and an unlimited waiting queue. The probability of transition from state i to state i – 1 of the process