Noisy and stressful? Or noisy and fun? Your phone can hear the difference

Qualcomm’s latest smartphone chips will be able to define the soundscape thanks to British startup Audio Analytics.

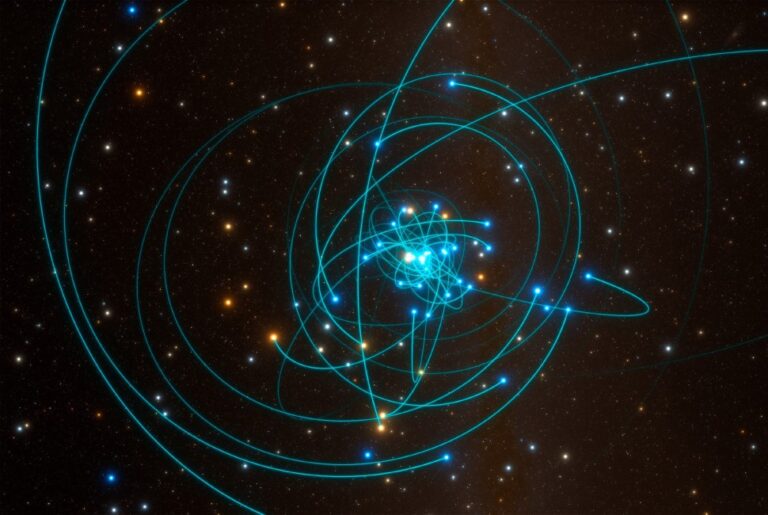

Audio Analytics can characterize the surrounding soundscape, as well as identify individual sounds, such as the sound of a smoke alarm (pictured below).

Smartphones have been able to distinguish between wake-up words like “Hey Siri” and “Okay Google” for several years without overusing the battery. These wake-up systems run on dedicated low power processors built into the phone’s larger chipset. They rely on algorithms used to train a neural network to recognize a wide range of voices, accents, and speech patterns. But they only recognize their awakening words. More general algorithms for recognizing all speech require more powerful phone processors.

Qualcomm today announced that the Snapdragon 8885G, its latest set of mobile chipsets, will include additional software in the portion of the semiconductor space that houses the wake word recognition engine.

Created by startup Audio Analytic (Cambridge, UK) compact sound recognition software platform ai3-nano will use a low-power Snapdragon processor to listen to sounds beyond speech. Depending on the apps provided by smartphone manufacturers, phones will be able to respond to sounds like doorbell, boiling water, crying babies, typing – a library of roughly 50 sounds that is expected to grow to 150-200 soon.

The first application available for this sound recognition system is called AI Acoustic Scene Recognition by Audio Analytic. Instead of listening to just one sound, recognition technology monitors the characteristics of all surrounding sounds to determine the environment as chaotic, lively, boring or calm. Audio Analytic CEO and Founder Chris Mitchell explains:

“The environment has two aspects – eventfulness, which refers to how many individual sounds are heard and how pleasant they are to us. Let’s say I went for a run in the park and there were a lot of bird sounds. I will most likely like it so the environment will be classified as “live”. Or there may be an environment in which there are many unpleasant sounds. It can be called “chaotic”.

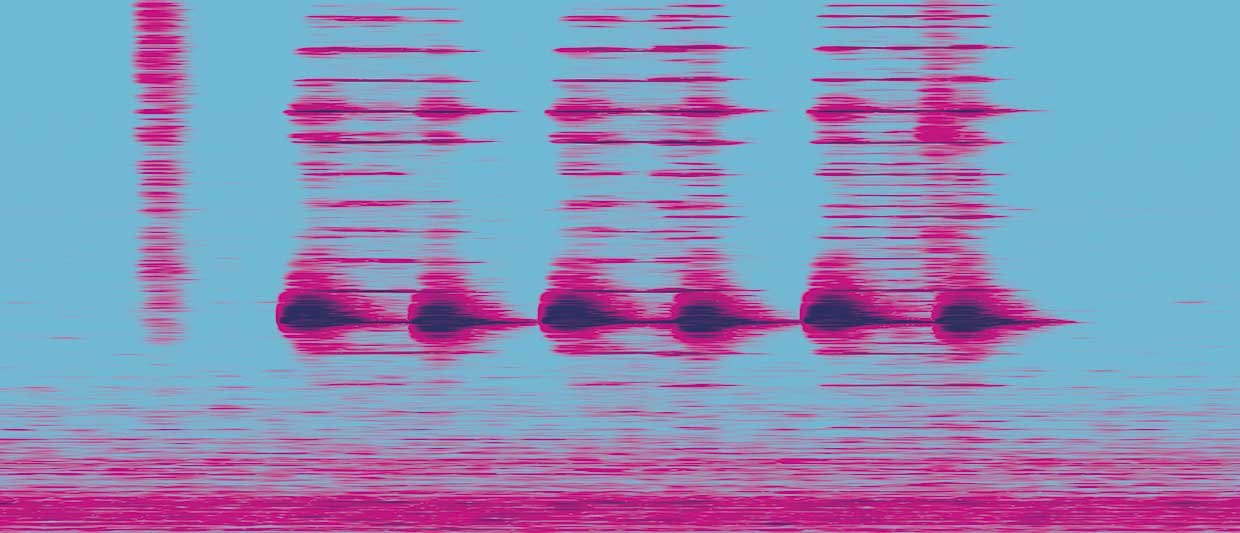

Mitchell’s team selected these four categories after analyzing research on sound perception. They then used a specially crafted dataset of 30 million audio recordings to train the neural network.

What a mobile device does with this new awareness of ambient sound will depend on the manufacturers using the Qualcomm platform. Mitchell has several ideas:

“For example, the noise environment of a subway train can be described as ‘boring’, so you’ll want to turn up active noise canceling in your headphones to remove low hum. But when you get off the subway, you need more transparency in order to hear, for example, a cyclist or a car signal, so the noise cancellation should be reduced. On your smartphone, you can also set up notifications depending on the type of environment, from the “silent / vibration / with signal” mode, and what kind of melody is on the call ”.

I first met Mitchell two years ago when the company was demonstrating prototypes of how its audio analysis technology would work in smart speakers. Since then, Mitchell says, products that use the company’s technology have been available in about 150 countries. Most of these are security systems that recognize the sound of breaking glass, a smoke alarm or a child’s cry.

The Audio Analytic approach, as Mitchell explained to me, involves using deep learning to separate sounds into standard components. He uses the word “ideophones” to refer to these components. This term also refers to the representation of sound in speech, such as “quacking”. Once the sounds are encoded as ideophones, each sound can be recognized in the same way that digital assistant systems recognize their wake-up words. This approach allows the ai3-nano engine to take up only 40KB and run completely on the phone without being connected to a cloud processor.

Mitchell suggests that once this technology is introduced into smartphones, its applications will grow beyond security and environment recognition. He expects early examples to include media tagging, games, and accessibility.

According to him, multimedia tagging assumes that the system can search for video recorded from the phone by sound. So, for example, a parent can easily find a passage where the child is laughing. Or, children can use this technology in games that teach animals what sounds they make.

In terms of accessibility, Mitchell sees the technology as a boon for the hearing impaired, who already rely on mobile phones as assistive devices. “This will help them detect and identify a knock on a door, a dog barking, or a smoke detector signal,” he says.

After deploying additional audio recognition capabilities, they are going to work on defining context that goes beyond specific events or scenes.

“We started doing early research in this area. For example, our system might say, “Looks like you are making breakfast” or “Looks like you are about to leave the house.”

This will allow applications to use this information when activating a security system, adjusting lighting or temperature settings.

Advertising

Right now in OTUS started Christmas sale… The discount applies to absolutely all courses. Make a gift for yourself or loved ones – go to the site and pick up a discounted course. And as a bonus, we suggest registering for absolutely free demo lessons :

You can view the calendar of upcoming demo lessons and webinars here…