Migrating virtual servers from oVirt to VMware

Author: Sultan Usmanov, DevOps company host key

In the course of work on optimizing the fleet of physical servers and compacting virtualization, we faced the task of transferring virtual servers from oVirt on VMware. An additional problem was the need to maintain the ability to rollback to the oVirt infrastructure in case of any complications during the migration process, since for a hosting company, the stability of the equipment is a priority.

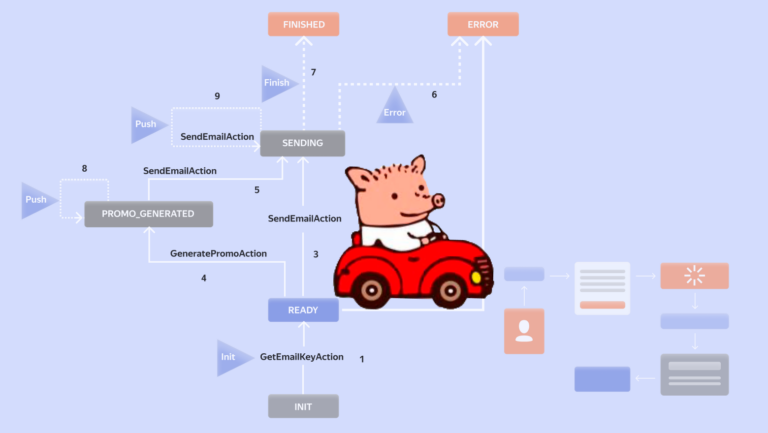

The following infrastructure was deployed for server migration:

NFS server, presented on oVirt, ESXi and mediation servers,

Mediation server where RAW and Qcow2 discs were converted to VMDK format.

Below are the scripts, commands, and steps that we used during the migration.

To partially automate and reduce the time to connect to the oVirt servers, in order to copy the disk of the virtual server and place it on NFS for further conversion, a bash script was written that ran on the proxy server and performed the following actions:

Connected to Engine-server;

Find the desired virtual server;

Turned off the server;

Renamed (server names in our infrastructure should not be repeated);

Copied server disk to NFS partition mounted to oVirt and ESXi servers.

Since we were limited in time, a script was written that only works with servers that have one disk.

bash script

#!/usr/bin/env bash

##Source

engine_fqdn_src= FQDN имя Engine сервера

engine_api_src="https://${engine_fqdn_src}/ovirt-engine/api"

guest_id=$1

##Common vars

engine_user=пользователь с правами на управление виртуальными серверами

engine_pass=пароль

export_path=/mnt/autofs/nfs

OVIRT_SEARCH() {

local engine_api=$1

local api_target=$2

local search

if [[ ! -z $3 ]]&&[[ ! -z $4 ]];then

local search="?search=$3=$4"

fi

curl -ks -user "$engine_user:$engine_pass" \

-X GET -H 'Version: 4' -H 'Content-Type: application/JSON' \

-H 'Accept: application/JSON' "${engine_api}/${api_target}${search}" |\

jq -Mc

}

##Source

vm_data=$(OVIRT_SEARCH $engine_api_src vms name $guest_id)

disk_data=$(OVIRT_SEARCH $engine_api_src disks vm_names $guest_id)

host_data=$(OVIRT_SEARCH $engine_api_src hosts address $host_ip)

vm_id=$(echo $vm_data | jq -r '.vm[].id')

host_ip=$(echo $vm_data | jq -r '.vm[].display.address')

host_id=$(echo $vm_data | jq -r '.vm[].host.id')

disk_id=$(echo $disk_data | jq -r '.disk[].id')

stor_d_id=$(echo $disk_data | jq -r '.disk[].storage_domains.storage_domain[].id')

##Shutdown and rename vm

post_data_shutdown="<action/>"

post_data_vmname="<vm><name>${guest_id}-</name></vm>"

##Shutdown vm

curl -ks -user "$engine_user:$engine_pass" \

-X POST -H 'Version: 4' \

-H 'Content-Type: application/xml' -H 'Accept: application/xml' \

--data $post_data_shutdown \

${engine_api_src}/vms/${vm_id}/shutdown

sleep 60

##Shutdown vm

curl -ks -user "$engine_user:$engine_pass" \

-X POST -H 'Version: 4' \

-H 'Content-Type: application/xml' -H 'Accept: application/xml' \

--data $post_data_shutdown \

${engine_api_src}/vms/${vm_id}/stop

##Changing vm name

curl -ks -user "$engine_user:$engine_pass" \

-X PUT -H 'Version: 4' \

-H 'Content-Type: application/xml' -H 'Accept: application/xml' \

--data $post_data_vmname \

${engine_api_src}/vms/${vm_id}

##Copying disk to NFS mount point

scp -r root@$host_ip:/data/$stor_d_id/images/$disk_id /mnt/autofs/nfsIn the case of servers that had two disks, work was carried out, which will be described below.

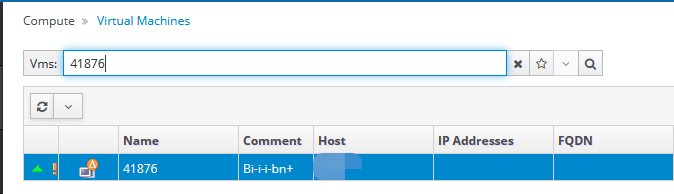

Connecting to the Engine and Finding the Right Server

In chapter “Compute” >> “Virtual Machines” in the search box, enter the name of the server to be migrated. Find the server and disable it:

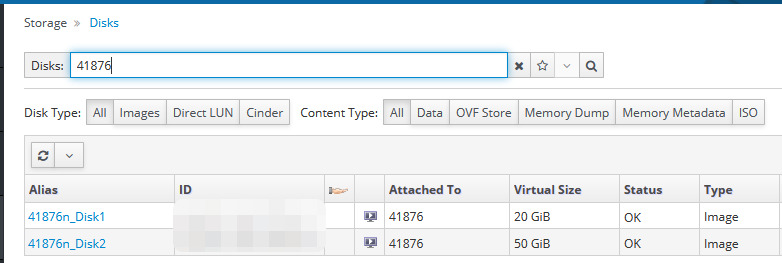

Go to section “Storage” >> “Disks” and find the server to be migrated:

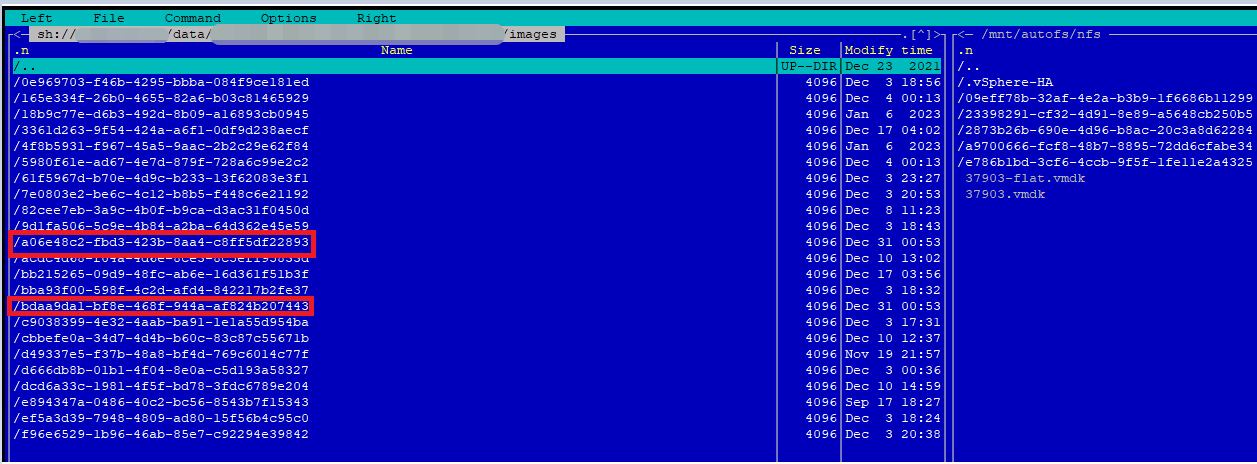

In this window, you need to remember the IDs of the drives connected to the transferred server. Then go to the proxy server via SSH and connect to the oVirt server where the virtual server is located. In our case, we used the application “Midnight Commander” to connect to a physical server and copy the necessary disks:

After you have copied the discs, you need to check their format (raw or qcow). You can use the command to check qemu-img info and specify a disk name. After selecting the format, you should perform the conversion using the command:

qemu-img convert -f qcow2 (название диска) -O vmdk (название диска.vmdk) -o compat6In our case, we are converting from qcow2 format to vmdk.

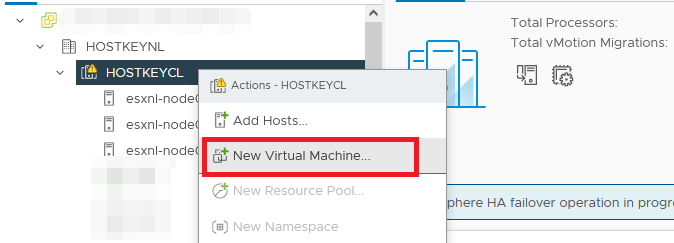

At the end of the conversion, you need to go to vCenter or, if just an ESXi server is installed, go to it through the web interface to create a virtual server without a disk. In our case, vCenter was installed.

Create a virtual server

Since we have a cluster configured, you need to right-click on it and select the option “New Virtual Machine”:

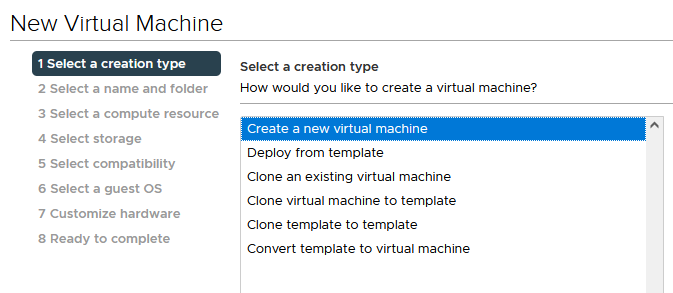

Then select “Create new virtual machine”:

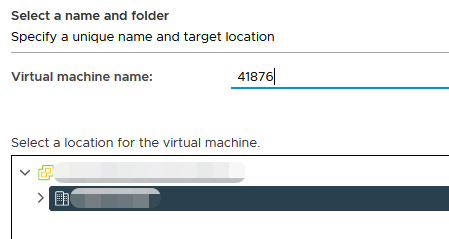

Set the server name and click “Next”:

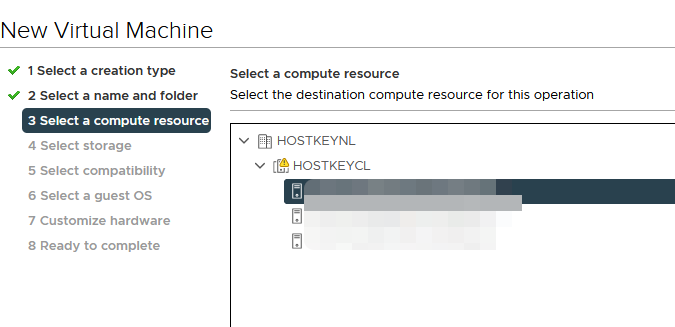

Select the physical server where you plan to host the virtual server and click “Next”:

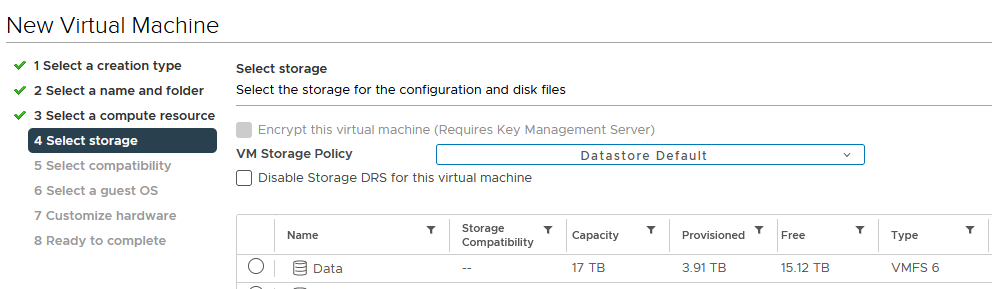

Select the storage where the server will be placed and click “Next”:

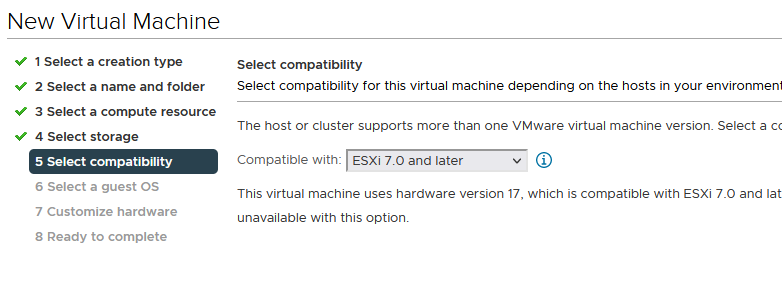

Specify the version of ESXi for compatibility, if there are version 6 servers in the infrastructure, you must select the desired version. In our case it is 7.

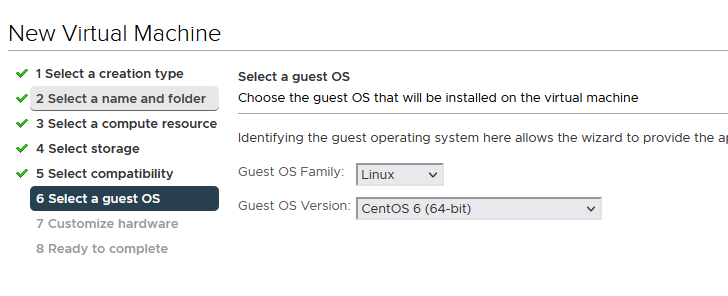

Select the operating system and version that was installed on the server that we are transferring. In our case, this is Linux with the CentOS 6 operating system.

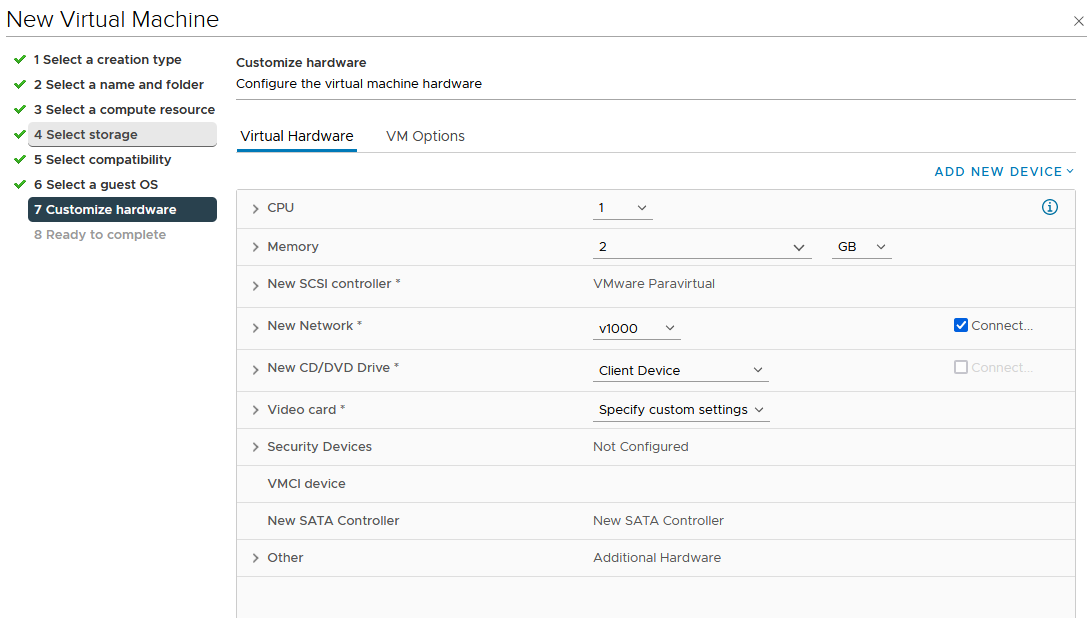

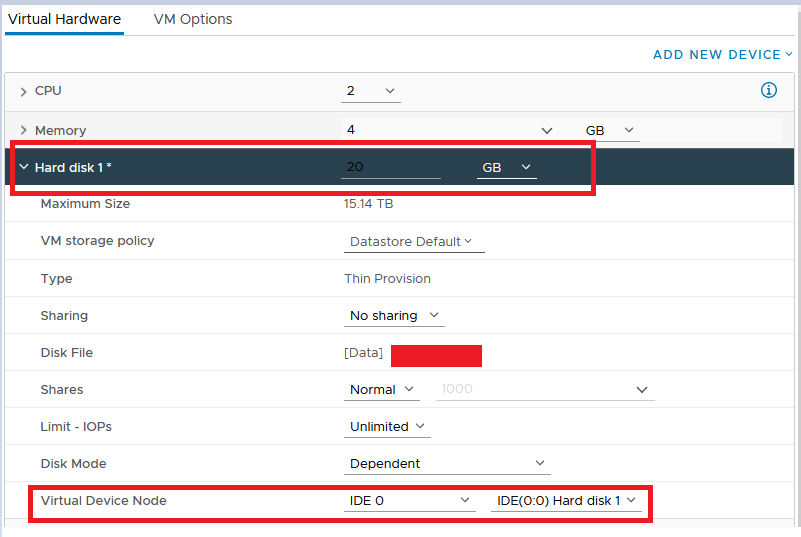

In the window “Customize Hardware”, you must set all the parameters identical to those that were set in the portable system. It is also necessary to remove the disk, because the converted disk will be connected instead.

If you want to leave the old mac-address on the network card, you must set it manually:

After creating a virtual server, you need to SSH into the ESXi host and convert the disk from Thick to Thin provision and specify its location, i.e. the name of the server you created above.

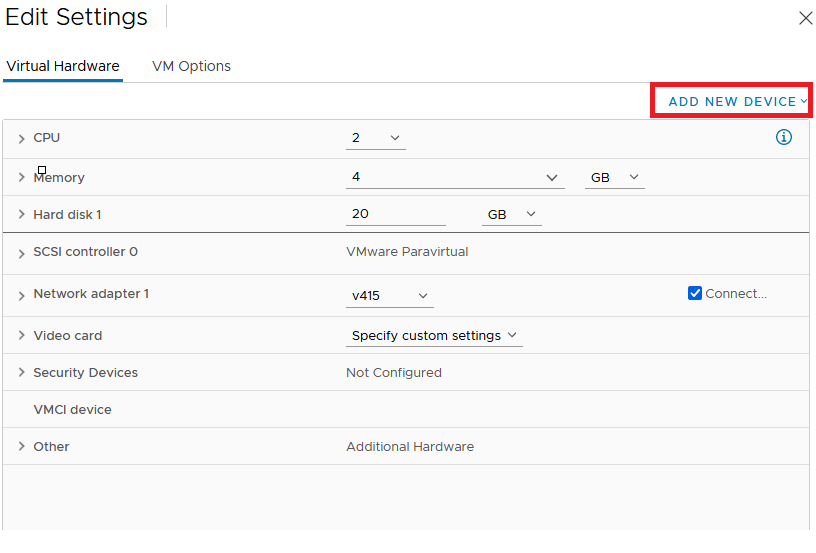

vmkfstools -i /vmfs/volumes/NFS/название диска/название диска.vmdk(что мы сконвертировали на предыдущем шаге) -d thin /vmfs/volumes/название хранилища на ESXi/имя сервера/название диска.vmdkAfter successful conversion, you need to connect the disk to the server. Again we go to the ESXi or vCenter web interface, find the desired server, right-click on its name and select “Edit Settings”.

In the window that opens, on the right side, click on “ADD NEW DEVICE” and select “Existing Hard Drive.

On our storage – ESXi disk, find the server and disk that we converted earlier, and click “OK“.

As a result of these actions, a virtual server will be created:

To start the server, you need to go to the disk settings in the section “Virtual Device Node” select an IDE controller. Otherwise, the system will display the message “Disk not found”.

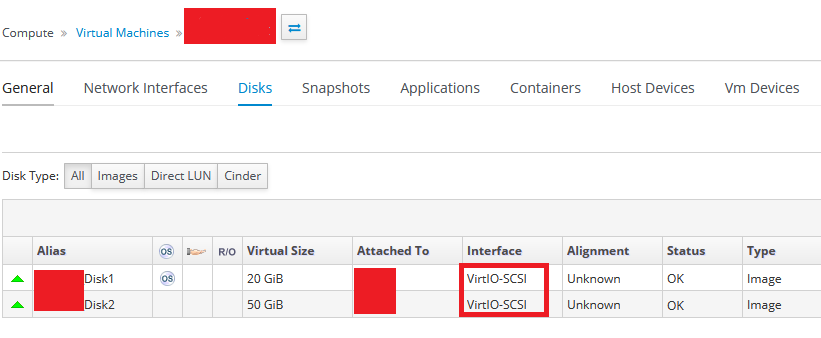

The steps described above for creating a virtual server and connecting a disk will be enough to correctly start the system if the interface on the source server in the disk settings was “Virtio-SCSI”. You can check the interface type in the oVirt settings on the virtual server itself in the “Compute >> Virtual Machines” find the server and go to “disks”:

During the migration process, we encountered the problem of migrating servers from Virtio – this is an old controller and disks in /etc/fstab are not called sda, as in new systems, but vda. To transfer such servers, we used the following solution – before starting the system, you must connect the LiveCD and perform the following steps:

Boot under LiveCD;

Create and mount disks, for example,

mount /dev/sda1 /mnt/sda1;Go to the mnt partition and connect to the system via chroot by running the commands:

mount -t proc proc /sda1/proc

mount -t sysfs sys /sda1/sys

mount -o bind /dev /sda1/dev

mount -t devpts pts /sda1/dev/pts

chroot /sda1After logging in (chroot), you need to change the name of the disks in fstab and rebuild the grub configuration file:

vim /etc/fstabgrub2-mkconfig -o /boot/grub2/grub.cfg

After completing these steps, you must restart the server.

The described solution allowed us to solve the problem of migrating a large fleet of servers. On average, transferring a disk with settings and starting a server of 15-20 GB took from 20 to 30 minutes, and with a volume of 100 GB – from one and a half to two hours.