Matrix multiplication. Slow achievement of a mythical goal

In recent work, it was established new speed record for multiplying two matrices… It also marks the end of an era for the method that scientists have used for research for decades.

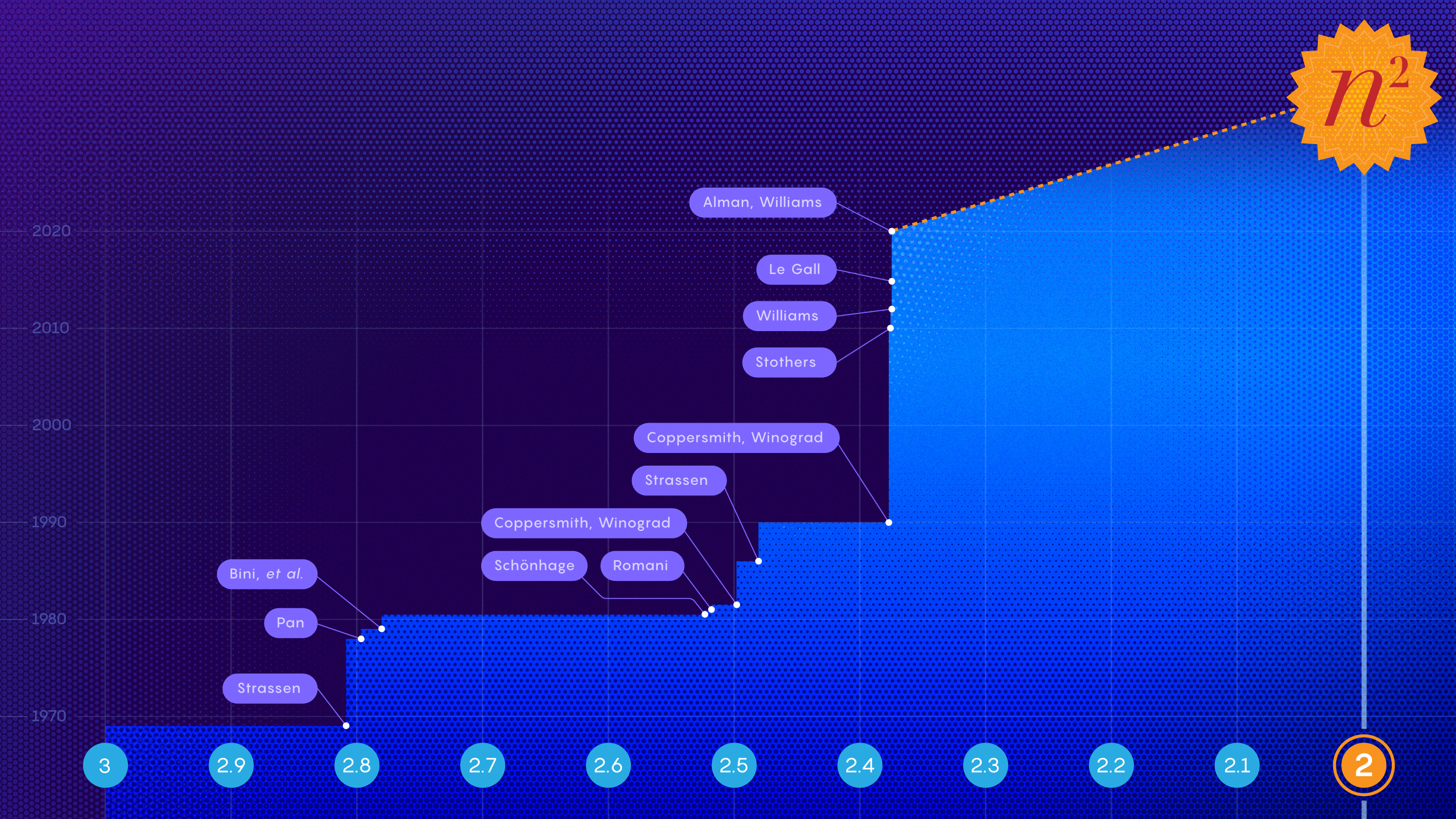

Mathematicians strive to achieve a mythical goal – the second degree (exponent two), that is, to multiply a pair of n x n matrices in just n2 steps. Researchers are getting closer to their goal, but will they ever be able to achieve it?

For computer scientists and mathematicians, the very idea of the “second degree” is associated with the idea of a perfect world.

“It is difficult to distinguish between scientific thinking and baseless dreams”, – admits Chris Umans from California Institute of Technology. “I want the degree to be two because it’s beautiful.”

In terms of the required number of steps “Second degree” is the ideal speed of execution one of the most fundamental mathematical operations is matrix multiplication. If the second degree is achievable, then the matrix multiplication can be performed as quickly as physically possible. If this is not the case, then we are stuck in a world that does not live up to our dreams.

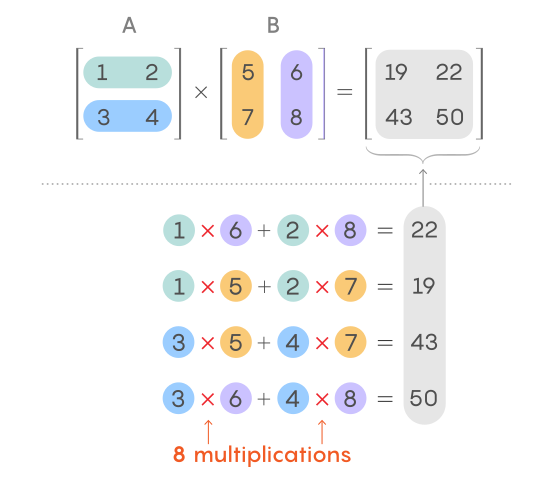

Matrices are arrays of numbers. When the two matrices agree (the number of columns in the first factor is equal to the number of rows in the second), they can be multiplied to get the third. For example, if you start with a 2 x 2 matrix pair, their product will also be a 2 x 2 matrix containing four elements. More generally, the product of a pair of n x n matrices is another n x n matrix with n2 elements.

Therefore, the smallest possible number of steps for multiplying pairs of matrices is n2, that is, the number of steps required to simply record the answer. Hence the name “second degree”.

And while no one knows for sure if this can be achieved, researchers continue to move in this direction.

Article, published in October, gets even closer to the goal and describes the fastest method of multiplying two matrices at the moment. The result you got Josh Alman, a doctoral student at Harvard University, and Virginia Wasilewska Williams from the Massachusetts Institute of Technology, decreases the degree of the previous best by about one hundred thousandth. This is really a great achievement in this area, achieved through painstaking work.

To get a better understanding of this process and how it can be improved, let’s start with a pair of 2 x 2 matrices, A and B. When calculating each element of their product, you use the corresponding row from A and the corresponding column from B. To get the top-right element , multiply the first number in the first row A by the first number in the second column B, then multiply the second number in the first row A by the second number in the second column B and add the two products.

Samuel Velasco / Quanta Magazine

This operation is known as getting a “dot product” rows with a column (sometimes called an “inner product”). To calculate other elements in the matrix product, repeat the procedure with the corresponding rows and columns.

In general, the classic 2 x 2 matrix multiplication method consists of eight multiplications and several additions. Typically, this method of multiplying two n x n matrices requires n3 multiplications.

As the size of matrices increases, the number of multiplications required to find their product grows much faster than the number of additions. To find the product of 2 x 2 matrices, only eight intermediate multiplications are required, and to find the product of 4 x 4 matrices, there are already 64 of them. However, the number of additions required to obtain the sum of these matrices is not that much different. Usually the number of additions is equal to the number of elements in the matrix, that is, four for 2 x 2 matrices and 16 for 4 x 4 matrices. This difference between addition and multiplication makes it clear why researchers measure the speed of matrix multiplication solely in terms of the number of multiplications required.

“Multiplications are everything,” says Umans. “The exponent in the end depends entirely on the number of multiplications. Additions disappear in a sense. “

For centuries, people believed that n3 Is the fastest way to multiply matrices… Reportedly in 1969 Volker Strassen set out to prove that it is impossible to multiply 2 x 2 matrices using less than eight multiplications. Apparently, he still could not find proof, and after a while he understood why: in fact, there is a way to do this using seven multiplications!

Strassen came up with a complex set of relations that allowed him to replace one of these eight multiplications with 14 additional additions. It might seem like the difference is completely insignificant, but it pays off because multiplication contributes more than addition. Finding a way to get rid of one multiplication for small 2 x 2 matrices, Strassen discovered a possibility that he could use when multiplying larger matrices.

“This tiny change translates into huge improvements in handling large matrices,” Williams says.

Virginia Wasilewska Williams of MIT and Josh Alman of Harvard University have discovered the fastest way to multiply two matrices in n2.3728596 steps. Jared Charney; Richard T.K. Hawk

Suppose you want to multiply a pair of 8 x 8 matrices. One way to do this is to split each large matrix into four 4 x 4 matrices so that each has four elements. Since the elements of a matrix can also be matrices, you can think of the original matrices as a pair of 2 x 2 matrices, each of the four elements of which is itself a 4 x 4 matrix. With some manipulation, each of these 4 x 4 matrices can be split into four matrices size 2 x 2.

The idea behind this multiple splitting of large matrices into smaller ones is that you can apply Strassen’s algorithm over and over again to smaller matrices and use his method to reduce the number of steps at each step. In general, Strassen’s algorithm increased the speed of matrix multiplication with n3 up to n2.81 multiplicative steps.

The next important step in the development of the idea took place in the late 1970s, when a fundamentally new approach to solving this problem appeared. It involves translating matrix multiplication into another computational linear algebra problem using objects called tensors. Tensorsused in this problem are three-dimensional arrays of numbers made up of many different parts, each of which looks like a small matrix multiplication problem.

Matrix multiplication and this tensor problem are in a sense equivalent to each other, but the researchers already had faster procedures to solve the latter. Thus, they were faced with the task of determining the “exchange rate” between them: What size matrices can be multiplied with the same computational costs that are required to solve the tensor problem?

“This is a very common concept in theoretical computer science: to transform tasks and draw analogies between them to show that they are equally simple or complex,” said Alman.

In 1981, Arnold Schönhage used this approach to prove that matrix multiplication can be done in n2.522 steps. Strassen later called this approach “Laser method”…

Over the past few decades, every improvement in matrix multiplication has come from improvements in the laser method as researchers have found more efficient ways to transform the problem. In their new proof, Alman and Williams blur the distinction between the two problems and show that it is possible to reduce the number of multiplications. “Overall, Josh and Virginia found a way to apply machine computing to laser and got the best results to date,” said Henry Cohn from Microsoft Research.

In their paper, the theoretical limit on the rate of matrix multiplication is improved to n2.3728596…

Also thanks to this research, Williams can regain the crown in the field of matrix multiplication, which she rightfully received in 2012 (n2.372873), and then conceded in 2014 François Le Gallu (n2.3728639).

But, despite all these races and victories, it becomes clear that in the case of this approach, the law of diminishing returns, or diminishing returns, operates. Most likely, the improvement of Alman and Williams almost completely exhausted the possibilities of the laser method, but did not allow achieving the final theoretical goal.

“It’s unlikely that you can get anywhere near the second degree using this family of methods,” Umans said.

This will require the discovery of new methods and a strong belief that it is possible at all.

Williams recalls one of his conversations with Strassen about this: “I asked him if he thought it was possible to get the second degree for matrix multiplication, and he replied:“ No, no, no, never! ”.