Making inference on Nvidia Triton Inference Server

There are so many neural network inference frameworks around that it’s hard to know which one is best for you. I decided that I was implementing the same task on several different technologies. This is how this one was born. repository.

Task

Since the goal of the project was inference, it was decided not to train something from scratch, but to take ready-made models.

I wanted to choose a task that would meet the following requirements:

It was not too simple (consisted of one model), but not too complicated either;

It contained neural networks trained for different tasks (for example, a grid for detection and segmentation);

Had an implementation in pytorch (because I know it).

The choice fell on the task of recognizing Russian license plates. Models were taken from this repository.

The license plate recognition pipeline is as follows:

Number detection using Yolov5;

The cut numbers are run through a Spatial transformer for alignment;

The text of the number is recognized with LPR-net.

I will not dwell on how grids work (here there are links to the grids, who are interested).

Neural networks

Since several implementations of inference are planned, the code with them was moved to a separate plastic bagfrom which meshes are imported.

Selected as the dependency manager Poetry. I would like to be able to rely on this project when implementing my inference. To do this, it is necessary that it can be launched both in a month and in a year. Poetry just captures all dependencies (both main and dependency dependencies) in a special file poetry.lockwhich will give reproducibility.

The weights of the neural networks were saved directly in the repository because:

It’s reluctant to upload them somewhere, and then make sure that you don’t accidentally delete them;

It’s easier to play this way (no need to download something, and then think about what name to put in what folder);

They don’t weigh that much.

How to use them can be understood from tests.

framework

Used for inference Nvidia Triton Inference Server (hereinafter Triton).

I would say that Triton aims to maximize the utilization of your hardware:

Allows you to transfer data between models without copying;

Does not load models that are not in use;

Uses shared memory to receive data and return responses;

And other.

The main disadvantages, in my opinion:

Works only on Nvidia graphics cards;

Designed to work on one machine. Triton does not expect you to run some models on one physical machine and some on another;

The documentation consists of scattered according to GitHub readme, some of which are duplicated in website.

Converting Models

Pytorch Models

To use Triton you need to define model_repository. It is a folder with folders for each model. Next, you can combine the models into some calculation graph, describing the transfer of data between them.

The model folder should contain the configuration of the model and its weights.

Triton supports large number frameworks (backends) for training grids. We will need Pytorch and Python.

Pytorch the backend allows you to hurt TorchScript models. I have translated into it LPR net And STN.

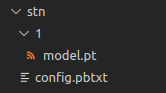

Consider the conversion on the example of STN. For this:

1. Convert in TorchScript

dummy_input = torch.randn(1, 3, 24, 94, device=device)

model = load_stn(settings.STN.WEIGHTS, device)

traced_model = torch.jit.trace(model, [dummy_input])2. Save to the folder with the model version. Triton wants all of your models to be in a separate folder with a version number. Then you can send requests for a specific version. Also, using this mechanism, you can replace versions of models directly on the working product.

model_path = (

Path(__file__).resolve().parents[1]

/ "model_repository"

/ "stn"

/ "1"

/ "model.pt"

)

traced_model.save(model_path)3. Set configuration. The main thing that needs to be defined is the backend, batches (so that Triton can process batches), inputs and outputs to the model. In our case we get:

backend: "pytorch"

max_batch_size: 32

input [

{

name: "input__0"

data_type: TYPE_FP32

dims: [ 3, 24, 94 ]

}

]

output [

{

name: "output__0"

data_type: TYPE_FP32

dims: [ 3, 24, 94 ]

}

]hidden text

Notice the underscores in the names of inputs and outputs. It’s special format for Pytorch backend.

As a result, we got such a folder with a model

Python Models

If for some reason the conversion fails or there is something other than the model, there is Python backend. In our case, additional logic is built into yolo if we send a numpy or torch tensor to it. In order not to add additional pre- and post-processing, a Python backend was used.

The folder structure is the same as in the previous case, but instead of weights we have a file model.py. It must implement the class TritonPythonModel. A model is created in it during initialization:

def initialize(self, args):

self.model_config = json.loads(args["model_config"])

self.model = load_yolo(

settings.YOLO.WEIGHTS, settings.YOLO.CONFIDENCE, torch.device("cuda")

)For each request received by the model, predictions are obtained and responses are formed:

def execute(

self, requests: List[pb_utils.InferenceRequest]

) -> List[pb_utils.InferenceRequest]:

responses = []

for request in requests:

image = pb_utils.get_input_tensor_by_name(request, "input__0").as_numpy()

image = prepare_detection_input(image)

detection = self.model(image, size=settings.YOLO.PREDICT_SIZE)

df_results = detection.pandas().xyxy[0]

img_plates = prepare_recognition_input(

df_results, image, return_torch=False

)

out_tensor_0 = pb_utils.Tensor(

"output__0", img_plates.astype(self.output0_dtype)

)

out_tensor_1 = pb_utils.Tensor(

"output__1",

df_results[["xmin", "ymin", "xmax", "ymax"]]

.to_numpy()

.astype(self.output1_dtype),

)

inference_response = pb_utils.InferenceResponse(

output_tensors=[out_tensor_0, out_tensor_1]

)

responses.append(inference_response)

return responsesFor this to work, you need to deliver the packages to the interpreter that Triton uses to run your models on the Python backend.

In Docker images Triton uses Python 3.8. If you have a different version, then you need to compile for yourself specifically. I went simpler and used 3.8 🌝.

Packages can be installed simply with pip when building your image. I was never able to get Poetry to install packages to the global Python, so I hacked around a bit and asked for Poetry export dependencies in requirements.txt. Them i installed via pip.

hidden text

If you do not download triton images with an error 401 unauthorizedThat log in in nvcr.io

Further, it is also given configuration. Here we have two will be two exits. The batch size is set to 0, since the preprocessing for the grids following after yolo does not implement the case if the batch is greater than zero.

hidden text

Please note that if max_batch_size: 0then you need clearly specify batch dimension.

backend: "python"

max_batch_size: 0

input [

{

name: "input__0"

data_type: TYPE_UINT8

dims: [ -1, -1, 3 ]

}

]

output [

{

name: "output__0"

data_type: TYPE_FP32

dims: [ -1, 3, 24, 94 ]

},

{

name: "output__1"

data_type: TYPE_FP32

dims: [ -1, 4 ]

}

]Calculation graph

The processing pipeline consists of successive application of several models. To set the processing logic in Triton, there is Ensembling And Business Logic Scripting (BLS).

The first one allows you to set logic using configs and is suitable for simple straightforward cases (for example, you can combine STN and LPR-net models into one such pipeline), and the second one – using Python code and is suitable for complex cases (with loops, exits by condition and another).

BLS was chosen, since it is necessary to stop processing if the detector did not find numbers, and also to do post-processing of LPR-net outputs (recognizes the text of the number).

For Triton, our logic is another model c in Python backend.

In it, we sequentially transfer data from model to model. If the detector did not find the numbers, then the processing stops.

cropped_images, coordinates = self.predict(

model_name="yolo",

...

)

num_plates = from_dlpack(coordinates.to_dlpack()).shape[0]

if num_plates == 0:

...

continue

(plate_features,) = self.predict(

model_name="stn",

...

)

(text_features,) = self.predict(

model_name="lprnet",

...

)hidden text

To transfer data between models, avoiding unnecessary copies between the GPU and the CPU, use dlpack. In our case, it allows you to convert torch tensors to Triton tensors and vice versa.

It also sets the standard configuring for a Python backend model.

backend: "python"

max_batch_size: 0

input [

{

name: "input__0"

data_type: TYPE_UINT8

dims: [ -1, -1, 3 ]

}

]

output [

{

name: "coordinates"

data_type: TYPE_FP32

dims: [ -1, 4 ]

},

{

name: "texts"

data_type: TYPE_STRING

dims: [ -1, 1 ]

}

]hidden text

Triton uses shared memory for Python backend and data transfer to itself. If you get him into kubernetes, he will ask where she went. A crutch that will help solve this is to mount the volume along the path where this shared memory should be.

Using Models

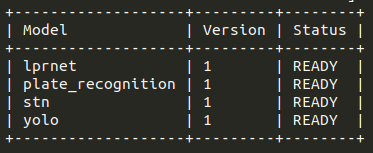

The models are set. Triton reports that everything has been loaded successfully.

To execute the described pipeline, you need to send a request to Triton to use the model plate_recognition (this is the name of the model that defines our calculation graph / license plate recognition pipeline). You can write your requests over HTTP or gRPC or use a ready-made library tritonclient. Examples can be seen in tests.

triton_client = httpclient.InferenceServerClient(url="localhost:8000")

model_name = "plate_recognition"

image = cv2.imread(img_path)

inputs, outputs = [], []

inputs.append(httpclient.InferInput("input__0", image.shape, "UINT8"))

inputs[0].set_data_from_numpy(image)

outputs.append(httpclient.InferRequestedOutput("coordinates", binary_data=False))

outputs.append(httpclient.InferRequestedOutput("texts", binary_data=False))

results = triton_client.infer(

model_name=model_name,

inputs=inputs,

outputs=outputs,

)

coordinates = results.as_numpy("coordinates")

texts = results.as_numpy("texts")Conclusion

Well, the inference is written. This example is not too simple to be completely useless, but not too complex to cover all the features of this inference framework.

I advise you to look in the documentation about:

Also in the Triton repositories there is an examples section (for example, for Python), which has many useful examples.

The next will be the implementation of inference on TorchServe. In the meantime subscribe to my telegram channel. There I talk about neurons with an emphasis on serving.