Machine learning and the main questions of the world, the universe and all that

This post is based on podcast episode “Let’s get some air!” In it, I talked with Andrey Ustyuzhanin, head of the machine learning laboratory “Lambda” at the HSE.

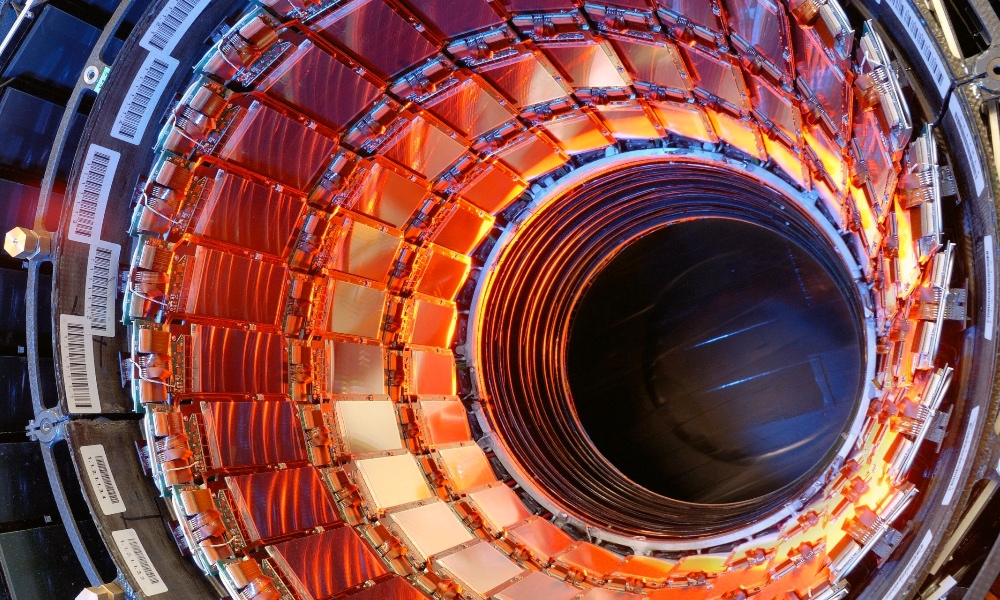

Lambda labs have been applying machine learning to physics problems long before it became mainstream. The first joint projects began back in 2011, and a year later Yandex began cooperation with CERN. The company first connected to the LHCb experiment (this is the name of the smallest detector of the Large Hadron Collider, which studies the asymmetry of matter and antimatter; in 2015, pentaquarks were discovered with its help). The main focus of the HSE-Yandex joint laboratory has become the application of machine learning methods in solving fundamental problems of natural sciences. We decided to start with physics and it was right – AI turned out to be extremely useful there. Now the Lambda employees are working with several physical experiments at once: their methods are used in the analysis of data from the Fermi telescope, as well as in the project to create a new generation of instruments for space exploration – the Cherenkov detector, or Cherenkov telescope array.

In addition, it became clear that if you know how to apply machine learning in physics, then chemistry or biology is literally at your fingertips. So, in 2019, Lambda led a joint project with the HSE Population Genomics Laboratory, previously with the neurobiological center, processing data obtained using the brain encephalogram and studying the characteristics of a person’s emotional state.

Data Science and the Large Hadron Collider

Experiments at CERN are the largest project in the resumes of the guys from Lambda, and maybe in general in the history of science. The main task for which the LHC was built was to find the Higgs boson – the particle responsible for the gravitational interaction. British physicist Peter Higgs theoretically substantiated the existence of the boson, which was named after him, back in the sixties. The problem was finding such a boson in an experiment. Actually, it is much easier to describe such an experiment than to stage it: the idea is to accelerate and collide beams of protons. In a head-on collision at near-light speeds, protons fall apart into a bunch of particles. The experimenter only has to “catch” the necessary particles and try to measure their properties. Complex sensitive elements are built around the collision points, somewhat similar to the matrix of modern cameras, only they react to single elementary particles and try to reconstruct a three-dimensional image of the collision.

In addition to purely technical problems, computational difficulties arise in setting up such an experiment. Detectors generate a lot of data. LOTS OF. To store something potentially new and interesting and not waste time on particles that the detector has seen millions of times, a fast filtering system is needed. She uses machine learning. However, after the data is received, it must be processed: restore the trajectory of motion, simulate the event that generated the registered particle. Now machine learning is used at almost every stage. work with information in the LHC – from its detection to filtration, processing and analysis.

How to do scientific closure?

Academician Kapitsa is credited with the aphorism: “when the theory coincides with the experiment, this is no longer a discovery, but a closure.” In a sense, the LHC deals precisely with “closings”. The researchers have some kind of model for what they are looking for, some kind of theoretical description of the processes that the experiment is trying to reproduce. Sometimes, as in the case of the Higgs boson, the theory claims that some object exists, but no one has been able to register it before. In other studies, the existence of an object is established, but it is necessary to measure its properties, which have not been measured before. Over the years of the LHC’s operation, almost all the experiments performed fell within the framework of the standard model. This in itself is amazing and, in a sense, is a separate discovery: the standard model, formulated back in the seventies of the twentieth century, is suddenly a fairly accurate description of the microworld.

When it comes to quickly processing a huge data stream that needs to be correlated with some model description, machine learning is indispensable. Detectors located in the LHC collect information about what happens during a collision of particles, and data science allows you to select from a large cloud the particles that seem most interesting, analyze the information received and calculate the reliability of the hypothesis being tested. You can get several different answers in the output:

- This is something we have already seen, but not what we were looking for. The harsh everyday life of the collider worker continues;

- This is something new, but not what we were looking for. Let’s take a closer look at this event;

- This is what we were looking for! Hooray! Closing!

Citizen science

Often the most difficult part of the task is to choose the right starting values for the parameters that would allow simulating what is happening during the experiment. If the model is properly initialized, it will learn and improve the final accuracy of the calculations. If the initial parameters are poorly selected, then machine learning will be useless. These kinds of problems regularly arise in problems of quantum physics, for example, if you want to simulate qubit quantum computer.

I didn’t believe in the existence of intuition until I got to Helsinki for quantum Game Jam… The event itself is worthy of a separate post, its essence is that several development teams over the weekend assemble prototypes of games with quantum effects, using, for example, IBM Quantum Computer API… One of the organizers of the event, professor Sabrina Maniscalco told how games with quantum effects can be useful to modern science. It turned out that people by trial and error are good at choosing the approximate parameters of the initialization of a quantum system, and machine learning models can then improve this solution. At the same time, people themselves can not go into the details of the quantum system, the behavior of which is simulated by the game for them. They just try to throw a vegetable into a basket or hit a target, just vegetables and arrows on the mobile screen behave strangely.

This is one example of Citizen science. This movement is especially popular in Scandinavia. Its essence is that since you, a scientist, live on taxpayers’ money, so it would be nice to explain to citizens (preferably using intelligible examples) what exactly you do and why you need it. If you can involve people in your work, that’s great. The guys of “Lambda” also popularize their work: they maintain instagram, adapt their work to understand students who are just beginning to understand machine learning and can apply it to problems from physics, astrophysics or other sciences.

Life after the collider

Machine learning benefits scientists not only at CERN. For example, it can be used to search for exoplanets: when the planet crosses the straight line connecting the star around which it revolves and the earth from which we look at this star, then the luminosity of the star decreases slightly. The resolving power of our telescopes is not enough to “see” the planet, but we can notice a decrease in the brightness of a particular star. If we run through the data analysis algorithm hundreds of hours of our observations of the starry sky, then by a periodic decrease in luminosity, the algorithm will find the stars around which the planets revolve.

From tasks of the “planetary” scale, one can go to the “galactic” scale. The fact is that the universe is expanding, and with acceleration. In order for such a behavior of the universe to converge with our existing ideas about how the world works, a large supply of mass must be hidden somewhere in the universe. It is this not found matter that is called dark. Moreover, according to calculations, by this time, mankind has studied information about only 5% of the mass of the Universe.

We see that the stars at the periphery rotate at about the same angular velocity as the stars at the center of galaxies, which contradicts what we know about physics. You can think of the visible stars as chocolate pieces in a cookie. There are huge cookies, there is flour, dough, sugar and so on, it all revolves, and we see only chocolate pieces, but we do not see the dough. Dark matter is some kind of strange substance that just gravitationally holds the stars, does not allow them to lag behind the rest of the rotation of the galaxy.

The question is how much dark matter is accumulated in which areas and how much the structure of the galaxy corresponds to the current concept of the structure of the Universe. The guys are now using machine learning algorithms to estimate the masses of a cluster of galaxies. If we correctly assess the distribution of mass in the universe, we can better understand what happened in the first moments after the Big Bang. Perhaps this will give us a hint of what dark matter is and where to look for it.

Let’s get some air! Is a podcast for techno-optimists in which professionals share their personal experiences. Quantum computing, genetics, IT in the regions, artificial intelligence. In general, join:

- Data Scientist Profession

- Data Analyst profession

- Machine Learning Course

- Course “Mathematics and Machine Learning for Data Science”

- Java developer profession

- JAVA QA engineer

- Frontend developer profession

- Profession Ethical hacker

- C ++ developer profession

- Profession Unity Game Developer

- Profession Web developer

- The profession of iOS developer from scratch

- Profession Android developer from scratch

COURSES

- Data Engineering Course

- Python for Web Development Course

- Course “Algorithms and Data Structures”

- Data Analytics Course

- DevOps course

Let’s get some air! Is a podcast for techno-optimists in which professionals share their personal experiences. Quantum computing, genetics, IT in the regions, artificial intelligence. In general, join:

Let’s get some air! Is a podcast for techno-optimists in which professionals share their personal experiences. Quantum computing, genetics, IT in the regions, artificial intelligence. In general, join: