Linux is the best OS

Disclaimer

I am new to the field of information technology, and even more so to writing structured texts, so this is not a guide. All information presented here is a collection of studied material, supported by my thoughts.

The text is aimed primarily at beginners in this area, like me, so if I have made factual errors somewhere, then welcome to the comments, I will only be happy with constructive criticism.

This text is also in video format. Links to all other sites are in telegram channel.

Table of contents

Introduction

What is OS?

History of Windows

History of Unix

History of GNU/Linux

Free Software vs. Proprietary

Distributions and which one to choose

Graphical environment

Conclusion

Sources

Introduction

Hello! Here I want to talk about why GNU/Linux is the best operating system at the moment and why do you urgently need to switch from Windows to it.

We'll define an operating system, go over the main OS families and briefly touch on their history, look at the concept of free software, and choose the ideal distribution.

What is OS?

Let's start from the very beginning. What is it operating system? We will not go into details, since this is a broad topic; we will only touch on the main points.

OS is one of the most important and complex programs in the system. It performs several key tasks:

Controlling the start and end of programs.

Manage all peripheral devices, providing other programs with simplified access to these devices.

For example, a user program can open a file for reading, specifying only the file name, read information from it, write the data to RAM, and then close the file. All these capabilities and their hidden technical implementations are provided by the OS.

Roughly speaking, this is a layer between application software and computer hardware.

Since 1955, the first operating systems began to appear, but the most popular, and still in use, systems appeared in the early 90s and were divided into two main families: Windows And Unix.

History of Windows

In 1975, two Harvard student friends Paul Allen And Bill Gatesdeveloped BASIC language interpreter for the Altair 8800 computer. A month later, a license agreement was signed with the company MITSthe manufacturer of this PC, to use their BASIC interpreter as part of the Altair software. This is how the company appeared Microsoft.

In 1980, Microsoft entered into an agreement with IBM to supply the operating system for the new IBM PC. To this end, Microsoft hired Tim Paterson in 1981 to complete the adaptation of 86-DOS to the IBM PC prototype. Later the company bought the rights to 86-DOS and renamed it MS-DOS. At the same time, IBM was using a licensed version of MS-DOS called PC DOS.

Fun fact: In 1984, Microsoft was developing software for the Apple Macintosh.

The main innovation of the Macintosh at that time was GUIwhich greatly simplified working with a computer for ordinary users. Bill Gates, inspired by this idea, decided to create a graphical add-on for MS-DOS. The first version was released at the end of 1985 Microsoft Windowswhich was not yet a full-fledged operating system, but only a graphical shell for MS-DOS.

The further development of Windows can be divided into two directions: Windows based on MS-DOS and Windows based on NT (New Technology).

Windows based on MS-DOS

This area includes operating systems such as Windows 95, 98 and Me. These operating systems were not full-fledged multi-user and multi-tasking systems, like Windows NT. For example, the user interface and graphics subsystem remained 16-bit, resulting in poor stability and performance. Problems in a 16-bit application could cause the entire system to freeze.

Windows based NT

The second direction includes systems with registration NT (New Technology)such as Windows 10 (Windows NT 10.0) or Windows 11 (Windows NT 10.0.22000)as well as server versions, for example, Windows Server 2022 (Windows NT 10.3). The first version from this subfamily, Windows NT 3.1was released in 1993. These OSes are completely 32-bit or 64-bit and do not depend on MS-DOS even to boot. They run on x86, x86-64 and ARM processors.

History of Unix

Another interesting line of events occurred in the late 1960s, where a consortium of General Electric, MIT and Bell Labs (a division of AT&T) developed an OS called MULTICS. Later Bell Labs, where he worked Ken Thompsonleft the project, and Thompson himself developed his own OS for the PDP-7 computer and, together with Brian Kernighan called her Unicssimilar to MULTICS. Final name – Unix.

Later, together with Dennis Ritchie they ported the system to a more advanced PDP-11 minicomputer. Then the idea arose to rewrite the OS into a higher-level programming language. An attempt to use the “B” language was unsuccessful, and Ritchie proposed expanding it by creating a new language – Si.

In 1973, Unix was rewritten in C. This was a breakthrough, as many believed that high-level languages and operating systems were incompatible. However, this step determined the future development of the industry: the C language and Unix remain relevant to this day.

In 1977, Unix was ported to a new architecture thanks to Dennis Ritchie. This is how the first portable C compiler appeared.

AT&T, due to antitrust restrictions, could not participate in the computer business and distributed Unix on a non-commercial basis, licensing the code to educational institutions. One of these establishments was Berkeley Universitywhere one of the most popular Unix branches was created – BSDdeveloped Bill Joy in 1977.

AT&T began commercializing Unix in the early 1980s, creating Unix System 3. In 1984, antitrust restrictions were lifted from the company, which led to the end of the free distribution of Unix source code. This sparked legal disputes between AT&T and BSDi that continued until 1993, when AT&T sold its Unix division to Novell. The latter settled its differences with Berkeley.

While the Unix developers were busy with internecine disputes, the market was filled with many cheap computers based on Intel processors and Windows.

History of GNU/Linux

When the commercialization of Unix systems began in 1983, Richard Stallman started developing his Unix-like OS from scratch. He founded the Free Software Foundation and published his ideological manifesto. The project was named GNU is a recursive acronym for the phrase “GNU's Not Unix”.

Initially it was a development environment with a compiler gcc and a set of utilities: gdb (debugger), glib (library for C), coreutils (basic utilities like ls, rm, cat, etc.), bash shell and a number of other programs that have become basic in such systems.

Development of the GNU Hurd kernel for the OS they left it as a last resort. They wanted to make the kernel itself not monolithic, but to divide it into a bunch of small server programs that would communicate asynchronously with each other. Because of this idea, it was much more difficult to catch errors, so the development was delayed.

Stallman's supporters had already written a lot of open source software, but without an open kernel, the goal of a completely Free OS was still far away.

In 1991, a Finnish student Linus Torvalds began development of its monolithic Unix-like OS kernel called Linux for the i386 platform. Inspired SunOShe created his own kernel and published its source code, which attracted many volunteers.

This kernel became the missing element for the GNU project, which made it possible to create a completely free OS – GNU/Linux.

As Linus himself notes, legal disputes between AT&T and Berkeley University played an important role in the popularization of Linux, which prevented the spread of BSD on the i386 platform.

GNU/Linux is the most popular Unix-like operating system and is available in many distributions, including commercial ones. Examples of popular commercial Unix-like OSs − macOS BSD based and Android based on the Linux kernel.

Free Software vs. Proprietary

Linus Torvalds decided to license the Linux kernel GNU GPLproposed by Richard Stallman. Let's figure out what this license is and what Free Software is.

Free Software Movement started in 1983 thanks to Richard Stallman, who founded Free Software Foundation (FSF)to promote your ideas to the masses.

What is Free Software? In English the term sounds like Free Software. Here's the word Free means not only freebut also free in terms of freedom of use. Free software may not be free if it is closed and proprietary, that is, it is the private property of a company.

Here are the four main principles of Free Software:

Freedom to run the program for any purpose.

Freedom to study and modify the source code to make the program fit your needs.

If you are not a programmer, you can work in a team with a developer or use collective control: other people can join the project and create documentation for easier understanding.

Freedom to distribute copies of the program, whether for free or for money.

This means that Free Software can be used for commercial purposes, for example by providing technical support services.

Freedom to distribute modified versions of the program.

These principles are implemented through a license GNU GPL and apply to all software created using it.

There is also a more common term – Open Source (open source). It covers the second principle of Free Software, but is not limited to it. The term was fixed Eric Raymond And Bruce Perens as an alternative to the term Free Software, since the word “free” can be misleading, implying only free of charge.

However, according to Stallman, Open Source does not always mean freedom. An example would be UnRAR is a program for unpacking RAR archives, the source code of which is open, but the license prohibits using it to create RAR-compatible archivers. Companies such as Microsoft often use the term Open Source, avoiding the concept of Free Software.

Why should you switch to Free Software?

The main reason is closed source proprietary programs. In operating systems such as Windows or macOS, users do not see the source code and cannot know what is happening when the program runs. You have to take the developers' word that your data is protected. However, proprietary programs may collect your data and use it for business purposes. One of the ways of such unauthorized access is backdoor — a method of remote control or data access intentionally built in by developers. An example is a mandatory update in Windows, which is installed regardless of the user's wishes.

For these reasons, proprietary software can be considered potentially malicious.

Although Free Software has its advantages, Stallman's idea may seem too utopian in the modern world. Personally I support Bruce Perens's opinionone of the leaders of the Debian project, who believes that free and proprietary software should coexist, and the former should be not only an alternative, but a better choice.

There are two additional reasons from me for this approach:

Freedom to choose any software, be it free or proprietary.

For example, many GNU/Linux distributions distribute proprietary software through official repositories, leaving the choice to the user.

Competition between different types of software contributes to the development of technology and an increase in the number of alternative programs.

Distributions and which one to choose

The GNU/Linux operating system is free software and, in my opinion, remains the best choice among the operating systems available. Now, having a base in the form of GNU/Linux, you need to select a distribution.

Distribution is an assembled set of components, including:

Linux kernel along with pre-installed programs, utilities and libraries from the GNU project.

Package Manager — a tool for managing software installation, updating and uninstallation.

Optional graphical shell.

Additional configurations and settings depending on the distribution.

Among the many distributions, there are three main ones for beginners:

Debian

One of the very first and most important distributions. This includes both Debian itself and popular distributions based on it. This is for example Ubuntu, Linux Mint, Kali Linux.

A package manager is commonly used apt (Advanced Packaging Tool)which is a superstructure on top of the lower level one dpkg.

Debian is a distribution with a mixed update system, that is, it can be used as stableso floating releases BY. Stable ones are the more popular and preferred option in these distributions. Because of this, such systems contain a lot of stable, but at the same time outdated software, and here the user himself chooses what is more important to him – stability or relevance.

Red Hat

One of the first companies that could commercialize free software and sell your distribution Red Hat Enterprise Linux by subscription.

The most interesting distribution for us based on Red Hat Linux is Fedora. Under development By the Fedora Project with commercial support from Red Hat and IBM itself, and also being a test field for features that are expected to be used in the Red Hat Enterprise Linux distribution in the future.

Package format RPM (Red Hat Package Manager) And DNF (Dandified YUM) as a tool to manage these packages.

They also use their own update method every 6 – 8 months, so the software there is not as outdated as in Debian, but still not the newest.

Arch Linux

Arch Linux development is led entirely by a non-profit community, unlike Ubuntu or Fedora.

Uses package manager pacmanand also the update method – floating releasesthat is, it receives the latest versions of the software, which is why it may not be very stable.

A distinctive feature of Arch is that it is installed as a minimal basic system, customized by the user to his own needs by building a unique environment with installing only the necessary components.

What to choose?

I recommend Arch Linux for the following reasons:

Pragmatism: complete freedom of choice between free and proprietary software.

Flexibility of customization: Installation and configuration of the system is completely dependent on the user.

Documentation: ArchWiki contains comprehensive instructions and recommendations.

For installation you can use Python script – archinstall, if you don't want to go into details at first. But people who want to learn the GNU/Linux system are recommended to do it from scratch.

If we highlight the differences between other distributions, we can note the following:

Arch is the least cluttered with pre-installed programs and tells you – build your system yourself.

Installed here latest software versionsdue to the update method floating releasesso if you want to use your PC as a gaming machine, for example, then this option will help you get that kind of experience without any hassle.

AUR – Arch user repositories. This is a community-maintained repository of programs for Arch users outside the main repository. It provides a large selection of third-party programs from users, but at the same time it imposes responsibility on you, since they do not undergo such thorough testing as the same software from an official source, so use them only at your own peril and risk. There is a voting system for your favorite packages, and many good programs from the AUR are eventually transferred to the official repository.

Well, as mentioned above – he fully supported by the community and independent of corporate decisions.

Graphical environment

An important aspect when choosing a distribution is the graphical environment. There are two main graphics servers: Xorg And Wayland. Wayland is more modern and secure, but has not yet completely replaced Xorg.

Wayland may not work correctly on Nvidia video cards.

I'm using composer for Wayland – Hyprlandand everything works fine on an AMD video card + 2 monitors.

After installing Arch, you will only get a terminal. To work with a graphical interface, you can install desktop environment (DE) or window manager (WM).

Desktop Environment (DE) is a set of programs with a common graphical interface (for example, KDE Plasma, GNOME).

Window Manager (WM) – a program for controlling the display of windows. Can be part of DE or work separately.

If you follow the simplest path, for example, through the same archinstall, you will be asked to select desktop environments, but I do not recommend installing them for the following reasons:

System load by means of unnecessary programs in the background (hello Windows)

Poor performance of these programs between themselves and the interface itself as a whole (although it would seem there should be maximum compatibility)

Very it is difficult to change such environments for example, change the window manager, since everything is done with the expectation of using it in this form and additional changes can lead to errors. Windows also uses its own desktop environment, but you can only change what Microsoft allows you to do, which is practically nothing.

Well, the most interesting thing, as for me, is that probably all desktop environments use exactly stack window managersjustifying this as a simplified transition from the same Windows, but this does not deviate much from its concept.

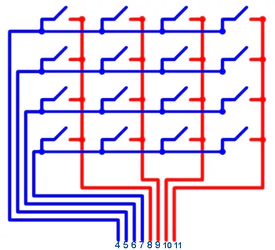

Here we smoothly move on to the differences between window managers:

Stacked (floating) follow the traditional desktop metaphor used in commercial operating systems like Windows and macOS. Windows appear like pieces of paper on a table, overlapping and overlapping each other.

Frame (tile) arrange windows on the screen in the form of tiles (frames) so that they do not overlap each other. Typically, frame window managers rely heavily on the keyboard to manage windows and have little or no mouse support. Frame-based window managers may offer a set of standard frame layouts or allow you to specify them manually.

There are also dynamic versions that combine both stack and frame ones.

Why I first of all advise choosing not a desktop environment, but a separate window manager, is for all the reasons already mentioned above, and in addition to this, the ability to install a frame version of such a manager.

Here are the 2 main reasons why tiled windows beat floating ones:

Operation speed on a PC speeds up significantly, since all interaction with the system can be done using hot keys on the keyboard and switching between virtual desktops.

All open windows will occupy maximum possible free space on the monitor, and when new ones are opened, they will also be effectively distributed among themselves.

The whole strength of Unix-like systems, unlike Windows, is in a similar method of working with the system. Since you'll be doing a lot of things using a terminal emulator, which is what I recommend, instead of just using GUI programs, this style of system management will be a big advantage.

Conclusion

A program that runs in a graphical environment and performs terminal functions is called terminal emulator.

For beginners, this may seem somewhat complicated, but when you get used to it a little, you will understand how much easier it is to work with the system through such a terminal.

I use it myself Alacrittyso I recommend it to you too. Very simple setup in one toml file.

Using a terminal emulator, you can update the system with one command with arguments:

pacman -Syu

So install the necessary applications in one line:

pacman -S firefox

Basic commandswhich you need to work in the terminal, as well as for working with pacman.

GNU/Linux offers freedom of choice and control over the system, making it an excellent option for users who value independence from large corporations and the security of personal data.

I hope I have interested you and you will at least try this OS and appreciate all its advantages. Then all that remains is to try and learn new things.

Thank you for your attention, gain new knowledge and pass it on to others!

Sources

History of Windows

History of Unix

Unix (Wikipedia) — The main stages of the creation and development of Unix, key figures and concepts.

MULTICS (Wikipedia) — Information about the predecessor of Unix, the MULTICS operating system.

BSD (Wikipedia) — History of Berkeley Software Distribution (BSD), its influence on Unix and the development of the industry.

History of GNU/Linux

GNU (Wikipedia) — History of the GNU project, its philosophy and main components.

Linux (Wikipedia) — Information about the development of the Linux kernel and its importance for open source software.

Richard Stallman (Wikipedia) — Biography of Richard Stallman, his contribution to the free software movement.

Linus Torvalds (Wikipedia) — Biography of Linus Torvalds and the history of the creation of the Linux kernel.

SunOS (Wikipedia) — Information about the SunOS operating system, which inspired Linus Torvalds.

Revolution OS (YouTube) — Documentary film about the development of GNU/Linux and open source software.