Large language models and vector databases

The emergence of generative models, and more importantly, their emergence in the public domain, at once changed the usual “landscape” of information technology. Databases are not left out. As it turned out, large language models are friends with the SQL language almost better than with all other programming languages. And it definitely gives a new impetus to relational databases. But is it only relational?

Problem

New opportunities come with new challenges. GPT technology is no exception here. The first attempts to move from “pampering” to the case identified one technical problem. Not that it was and is some kind of particularly sophisticated, but somehow it must be solved.

We are talking about the size of what you feed to the input of the neural network during operation. While we were having fun asking the neural network questions like: what is heavier, a kilogram of fluff or a kilogram of nails, the limit of four thousand tokens even for the Russian language (I remind you that in Russian tokens are spent several times faster than in English) seemed quite acceptable. Four thousand letters is a relatively large number. You will only read it for about 4 minutes. For “ha-ha-ha” enough for the eyes.

The limit begins to “squeeze” when we try to adapt the language model to some real case. In a number of cases, this means that we are implicitly for the user to attach something else to his question. For example, I use a language model to organize the interaction of an unskilled user with a database. The user asks a question in human language. I add a description of the database structure to his question and ask the neural network to build an SQL query.

There is another way to rationally use the neural network. The idea, as they say, lies on the surface. We organize a support service for a certain product. We get the user’s question. If at this moment we force the neural network (and where will it go!) RTFM, then it will answer the user’s question on average better than a person. Because, of course, he also used to be RTFM, but that was a long time ago and he already forgot something. The idea is quite working, but the point is that these same FMs can be very voluminous. Neither four, nor eight, nor even 32 thousand tokens that we are promised in GPT-4 may not be enough.

Do not forget that this problem is measured not only in tokens (or, if you like, in bytes), but also in monetary units. While we are having fun, and future possible vendors are vying with each other to show us “attractions of unheard of generosity”, this is not so noticeable. But, as the Chinese say, “you can’t wrap fire in paper.” Each call to a large language model is the work of a supercomputer, and it costs money. Even with the very forgiving rates currently offered by OpenAI, our hypothetical artificial helpdesk worker could be golden.

And finally, there is one more point. Even if progress leads us to the fact that we will not be particularly limited by either tokens or money, the question of quality will still remain. The fact is that with an increase in the size of the prompt, the quality of the result may suddenly drop. Here’s a concrete example for you. As I said before, I organize the interaction of the user with the database. I have a description of the database structure. There is a sales table in this description. In the field sales table profit And profitability. Testing with questions like: 5 most profitable products, last month’s profit and so on. At the output I get normal SQL queries. Everything is fine. At some point, I decide to add a table of product prices to the database description. Type: retail price such and such, wholesale such and such, etc. I get an unexpected effect. Now when asked about profit, the language model starts jumping on one leg, waving its arms and shouting: I know, I know, I know how to calculate profit! And he starts counting through the price of the goods. Despite the fact that the scheme clearly indicates the presence of a ready-made calculation. But the language model really needs to demonstrate its “smartness”.

Solution

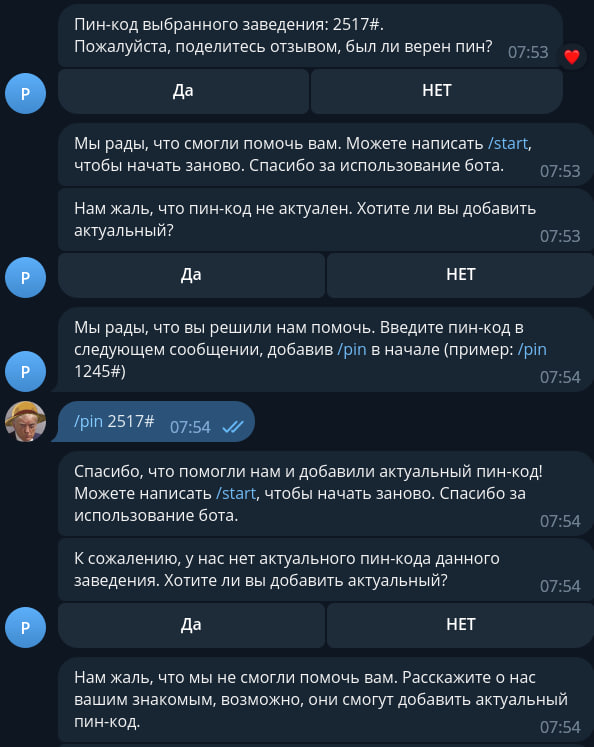

We have considered two cases. Although for different reasons, but both there and there we need to somehow divide the prompt into parts. Further, depending on what question we receive from the user, we add this or that part.

Vector databases are best suited for this task. Vector databases have appeared relatively recently. These are not relational databases. And they do not belong to the NoSQL family either. We can say that they stand apart. Vector databases are designed to store and retrieve one particular type of data: vector embeddings. All they know how to do, and do well, is to answer the question: What is the best fit for this text?. Actually this is what we need. Whether it’s a manual or a description of the database structure, we divide our original large promt into parts. Having received the user’s question at the input, we determine to which part this question belongs and use this part in the request.

Conclusion

Vector bases were born in the world of artificial intelligence and machine learning. With the advent of large language models, vector bases become useful not only to a relatively narrow circle of those who are directly involved in training models, but also to those who reap the fruits of their labor, are engaged in the operation of large language models. Vector databases should be looked at by those who position themselves as a database specialist.

I also want to recommend you free webinarwhere you will learn:

What questions you need to ask yourself before you start designing data warehouses in terms of choosing an architecture.

What are the main differences between OLAP and OLTP.

What are the key features inherent in different architectures and how to properly combine both solutions.

And also in practice, build the architecture of the test repository right in the classroom!