Kubernetes: why is it so important to set up system resource management?

As a rule, there is always a need to provide a dedicated pool of resources to any application for its correct and stable operation. But what if several applications work on the same capacities at once? How to provide the minimum necessary resources for each of them? How can I limit resource consumption? How to properly distribute the load between nodes? How to ensure the operation of the horizontal scaling mechanism in case of increased load on the application?

You need to start with what basic types of resources exist in the system – this, of course, processor time and RAM. In k8s manifests, these types of resources are measured in the following units:

- CPU – in the cores

- RAM – in bytes

Moreover, for each resource there is an opportunity to set two types of requirements – requests and limits. Requests – describes the minimum requirements for the free resources of the node to run the container (and the hearth as a whole), while limits sets a strict limit on the resources available to the container.

It is important to understand that in the manifest it is not necessary to explicitly define both types, and the behavior will be as follows:

- If only the limits of the resource are explicitly set, then requests for this resource automatically take a value equal to limits (this can be verified by calling describe entities). Those. in fact, the operation of the container will be limited by the same amount of resources that it requires to run.

- If only requests are explicitly set for a resource, then no restrictions are set on this resource from above – i.e. the container is limited only by the resources of the node itself.

It is also possible to configure resource management not only at the level of a specific container, but also at the namespace level using the following entities:

- Limitrange – describes the restriction policy at the container / hearth level in ns and is needed to describe the default restrictions on the container / hearth, as well as to prevent the creation of obviously fat containers / hearths (or vice versa), limit their number and determine the possible difference in the values of limits and requests

- ResourceQuotas – describe the restriction policy as a whole for all containers in ns and is used, as a rule, to delimit resources by environments (useful when environments are not rigidly delimited at the level of nodes)

The following are examples of manifests where resource limits are set:

At the specific container level:

containers: - name: app-nginx image: nginx resources: requests: memory: 1Gi limits: cpu: 200mThose. in this case, to start a container with nginx, you will need at least the presence of free 1G OP and 0.2 CPU on the node, while the maximum container can eat 0.2 CPU and all available OP on the node.

At integer level ns:

apiVersion: v1 kind: ResourceQuota metadata: name: nxs-test spec: hard: requests.cpu: 300m requests.memory: 1Gi limits.cpu: 700m limits.memory: 2GiThose. the sum of all request containers in the default ns cannot exceed 300m for the CPU and 1G for the OP, and the sum of all limit is 700m for the CPU and 2G for the OP.

Default restrictions for containers in ns:

apiVersion: v1 kind: LimitRange metadata: name: nxs-limit-per-container spec: limits: - type: Container defaultRequest: cpu: 100m memory: 1Gi default: cpu: 1 memory: 2Gi min: cpu: 50m memory: 500Mi max: cpu: 2 memory: 4GiThose. in the default namespace for all containers, by default, request will be set to 100m for the CPU and 1G for the OP, limit – 1 CPU and 2G. At the same time, a restriction was also set on the possible values in request / limit for CPU (50m

Limitations on the ns hearth level:

apiVersion: v1 kind: LimitRange metadata: name: nxs-limit-pod spec: limits: - type: Pod max: cpu: 4 memory: 1GiThose. for each hearth in the default ns, a limit of 4 vCPU and 1G will be set.

Now I would like to tell you what advantages the installation of these restrictions can give us.

The mechanism of load balancing between nodes

As is known, the k8s component such as schedulerwhich works according to a specific algorithm. This algorithm in the process of choosing the optimal node to run goes through two stages:

- Filtration

- Ranging

Those. according to the described policy, nodes are initially selected on which the hearth can be launched on the basis of a set predicates (including checking if the node has enough resources to start the pod – PodFitsResources), and then for each of these nodes, according to priorities points are awarded (including, the more free resources a node has – the more points it is assigned – LeastResourceAllocation / LeastRequestedPriority / BalancedResourceAllocation) and is run on the node with the highest number of points (if several nodes satisfy this condition at once, then a random one is selected) .

At the same time, you need to understand that the scheduler, when evaluating the available resources of the node, focuses on the data stored in etcd – i.e. by the amount of the requested / limit resource of each pod running on this node, but not by the actual consumption of resources. This information can be obtained in the output of the command. kubectl describe node $NODE, eg:

# kubectl describe nodes nxs-k8s-s1

..

Non-terminated Pods: (9 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits AGE

--------- ---- ------------ ---------- --------------- ------------- ---

ingress-nginx nginx-ingress-controller-754b85bf44-qkt2t 0 (0%) 0 (0%) 0 (0%) 0 (0%) 233d

kube-system kube-flannel-26bl4 150m (0%) 300m (1%) 64M (0%) 500M (1%) 233d

kube-system kube-proxy-exporter-cb629 0 (0%) 0 (0%) 0 (0%) 0 (0%) 233d

kube-system kube-proxy-x9fsc 0 (0%) 0 (0%) 0 (0%) 0 (0%) 233d

kube-system nginx-proxy-k8s-worker-s1 25m (0%) 300m (1%) 32M (0%) 512M (1%) 233d

nxs-monitoring alertmanager-main-1 100m (0%) 100m (0%) 425Mi (1%) 25Mi (0%) 233d

nxs-logging filebeat-lmsmp 100m (0%) 0 (0%) 100Mi (0%) 200Mi (0%) 233d

nxs-monitoring node-exporter-v4gdq 112m (0%) 122m (0%) 200Mi (0%) 220Mi (0%) 233d

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 487m (3%) 822m (5%)

memory 15856217600 (2%) 749976320 (3%)

ephemeral-storage 0 (0%) 0 (0%)Here we see all the pods running on a particular node, as well as the resources that each of the pods requests. And here is what scheduler logs look like when starting the cronjob-cron-events-1573793820-xt6q9 pod (this information will appear in the scheduler log when setting the 10th level of logging in the arguments of the launch command –v = 10):

I1115 07:57:21.637791 1 scheduling_queue.go:908] About to try and schedule pod nxs-stage/cronjob-cron-events-1573793820-xt6q9

I1115 07:57:21.637804 1 scheduler.go:453] Attempting to schedule pod: nxs-stage/cronjob-cron-events-1573793820-xt6q9

I1115 07:57:21.638285 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s5 is allowed, Node is running only 16 out of 110 Pods.

I1115 07:57:21.638300 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s6 is allowed, Node is running only 20 out of 110 Pods.

I1115 07:57:21.638322 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s3 is allowed, Node is running only 20 out of 110 Pods.

I1115 07:57:21.638322 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s4 is allowed, Node is running only 17 out of 110 Pods.

I1115 07:57:21.638334 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s10 is allowed, Node is running only 16 out of 110 Pods.

I1115 07:57:21.638365 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s12 is allowed, Node is running only 9 out of 110 Pods.

I1115 07:57:21.638334 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s11 is allowed, Node is running only 11 out of 110 Pods.

I1115 07:57:21.638385 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s1 is allowed, Node is running only 19 out of 110 Pods.

I1115 07:57:21.638402 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s2 is allowed, Node is running only 21 out of 110 Pods.

I1115 07:57:21.638383 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s9 is allowed, Node is running only 16 out of 110 Pods.

I1115 07:57:21.638335 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s8 is allowed, Node is running only 18 out of 110 Pods.

I1115 07:57:21.638408 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s13 is allowed, Node is running only 8 out of 110 Pods.

I1115 07:57:21.638478 1 predicates.go:1369] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s10 is allowed, existing pods anti-affinity terms satisfied.

I1115 07:57:21.638505 1 predicates.go:1369] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s8 is allowed, existing pods anti-affinity terms satisfied.

I1115 07:57:21.638577 1 predicates.go:1369] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s9 is allowed, existing pods anti-affinity terms satisfied.

I1115 07:57:21.638583 1 predicates.go:829] Schedule Pod nxs-stage/cronjob-cron-events-1573793820-xt6q9 on Node nxs-k8s-s7 is allowed, Node is running only 25 out of 110 Pods.

I1115 07:57:21.638932 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s10: BalancedResourceAllocation, capacity 39900 millicores 66620178432 memory bytes, total request 2343 millicores 9640186880 memory bytes, score 9

I1115 07:57:21.638946 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s10: LeastResourceAllocation, capacity 39900 millicores 66620178432 memory bytes, total request 2343 millicores 9640186880 memory bytes, score 8

I1115 07:57:21.638961 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s9: BalancedResourceAllocation, capacity 39900 millicores 66620170240 memory bytes, total request 4107 millicores 11307422720 memory bytes, score 9

I1115 07:57:21.638971 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s8: BalancedResourceAllocation, capacity 39900 millicores 66620178432 memory bytes, total request 5847 millicores 24333637120 memory bytes, score 7

I1115 07:57:21.638975 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s9: LeastResourceAllocation, capacity 39900 millicores 66620170240 memory bytes, total request 4107 millicores 11307422720 memory bytes, score 8

I1115 07:57:21.638990 1 resource_allocation.go:78] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s8: LeastResourceAllocation, capacity 39900 millicores 66620178432 memory bytes, total request 5847 millicores 24333637120 memory bytes, score 7

I1115 07:57:21.639022 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s10: TaintTolerationPriority, Score: (10)

I1115 07:57:21.639030 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s8: TaintTolerationPriority, Score: (10)

I1115 07:57:21.639034 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s9: TaintTolerationPriority, Score: (10)

I1115 07:57:21.639041 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s10: NodeAffinityPriority, Score: (0)

I1115 07:57:21.639053 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s8: NodeAffinityPriority, Score: (0)

I1115 07:57:21.639059 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s9: NodeAffinityPriority, Score: (0)

I1115 07:57:21.639061 1 interpod_affinity.go:237] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s10: InterPodAffinityPriority, Score: (0)

I1115 07:57:21.639063 1 selector_spreading.go:146] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s10: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639073 1 interpod_affinity.go:237] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s8: InterPodAffinityPriority, Score: (0)

I1115 07:57:21.639077 1 selector_spreading.go:146] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s8: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639085 1 interpod_affinity.go:237] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s9: InterPodAffinityPriority, Score: (0)

I1115 07:57:21.639088 1 selector_spreading.go:146] cronjob-cron-events-1573793820-xt6q9 -> nxs-k8s-s9: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639103 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s10: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639109 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s8: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639114 1 generic_scheduler.go:726] cronjob-cron-events-1573793820-xt6q9_nxs-stage -> nxs-k8s-s9: SelectorSpreadPriority, Score: (10)

I1115 07:57:21.639127 1 generic_scheduler.go:781] Host nxs-k8s-s10 => Score 100037

I1115 07:57:21.639150 1 generic_scheduler.go:781] Host nxs-k8s-s8 => Score 100034

I1115 07:57:21.639154 1 generic_scheduler.go:781] Host nxs-k8s-s9 => Score 100037

I1115 07:57:21.639267 1 scheduler_binder.go:269] AssumePodVolumes for pod "nxs-stage/cronjob-cron-events-1573793820-xt6q9", node "nxs-k8s-s10"

I1115 07:57:21.639286 1 scheduler_binder.go:279] AssumePodVolumes for pod "nxs-stage/cronjob-cron-events-1573793820-xt6q9", node "nxs-k8s-s10": all PVCs bound and nothing to do

I1115 07:57:21.639333 1 factory.go:733] Attempting to bind cronjob-cron-events-1573793820-xt6q9 to nxs-k8s-s10Here we see that initially the scheduler performs filtering and forms a list of 3 nodes on which launch is possible (nxs-k8s-s8, nxs-k8s-s9, nxs-k8s-s10). Then it scores for several parameters (including BalancedResourceAllocation, LeastResourceAllocation) for each of these nodes in order to determine the most suitable node. As a result, under it, it is planned on the node with the most points (here, two nodes have the same number of points 100037, so a random one is selected – nxs-k8s-s10).

Conclusion: if pods work on a node for which no restrictions are set, then for k8s (from the point of view of resource consumption) this will be equivalent to as if such pods were completely absent on this node. Therefore, if you, by convention, have a pod with a voracious process (for example, wowza) and there are no restrictions for it, then a situation may arise when, in fact, the given one has eaten all the resources of the node, but at the same time for k8s this node is considered unloaded and it will be awarded the same number of points when ranking (namely in points with an assessment of available resources), as well as a node that does not have work pitches, which ultimately can lead to an uneven distribution of the load between the nodes.

Hearth eviction

As you know, each of the pods is assigned one of the 3 QoS classes:

- guaranuted – assigned then, for each container in the hearth for memory and cpu request and limit are set, and these values must match

- burstable – at least one container in the hearth has request and limit, while request

- best effort – when no container in the hearth is limited in resources

At the same time, when there is a lack of resources (disk, memory) on the node, kubelet starts ranking and evicting sub’s according to a certain algorithm that takes into account the priority of the pod and its QoS class. For example, if we are talking about RAM, then based on the QoS class points are awarded according to the following principle:

- Guaranteed: -998

- Bestoffff: 1000

- Burstable: min (max (2, 1000 – (1000 * memoryRequestBytes) / machineMemoryCapacityBytes), 999)

Those. with the same priority, kubelet will first expel the pods with the best effort QoS class from the node.

Conclusion: if you want to reduce the likelihood of eviction of the required pod from the node in case of lack of resources on it, then along with the priority, you must also take care of setting the request / limit for it.

Application hearth horizontal auto-scaling mechanism (HPA)

When the task is to automatically increase and decrease the number of pod depending on the use of resources (system – CPU / RAM or user – rps), such an entity k8s as can help in its solution HPA (Horizontal Pod Autoscaler). The algorithm of which is as follows:

- The current reading of the observed resource is determined (currentMetricValue)

- The desired values for the resource (desiredMetricValue) are determined, which are set for system resources using request

- Determines the current number of replicas (currentReplicas)

- The following formula calculates the desired number of replicas (desiredReplicas)

desiredReplicas = [ currentReplicas * ( currentMetricValue / desiredMetricValue )]

However, scaling will not occur when the coefficient (currentMetricValue / desiredMetricValue) is close to 1 (we can set the allowable error ourselves, by default it is 0.1).

Consider hpa using the app-test application (described as Deployment), where you need to change the number of replicas, depending on CPU usage:

Application manifest

kind: Deployment apiVersion: apps/v1beta2 metadata: name: app-test spec: selector: matchLabels: app: app-test replicas: 2 template: metadata: labels: app: app-test spec: containers: - name: nginx image: registry.nixys.ru/generic-images/nginx imagePullPolicy: Always resources: requests: cpu: 60m ports: - name: http containerPort: 80 - name: nginx-exporter image: nginx/nginx-prometheus-exporter resources: requests: cpu: 30m ports: - name: nginx-exporter containerPort: 9113 args: - -nginx.scrape-uri - http://127.0.0.1:80/nginx-statusThose. we see that under with the application it is initially launched in two instances, each of which contains two containers nginx and nginx-exporter, for each of which is given requests for CPU.

HPA manifest

apiVersion: autoscaling/v2beta2 kind: HorizontalPodAutoscaler metadata: name: app-test-hpa spec: maxReplicas: 10 minReplicas: 2 scaleTargetRef: apiVersion: extensions/v1beta1 kind: Deployment name: app-test metrics: - type: Resource resource: name: cpu target: type: Utilization averageUtilization: 30Those. we created an hpa that will monitor the Deployment app-test and adjust the number of hearths with the application based on the cpu indicator (we expect that the hearth should consume 30% percent of the CPU requested by it), while the number of replicas is in the range of 2-10.

Now, we will consider the hpa operation mechanism if we apply a load to one of the hearths:

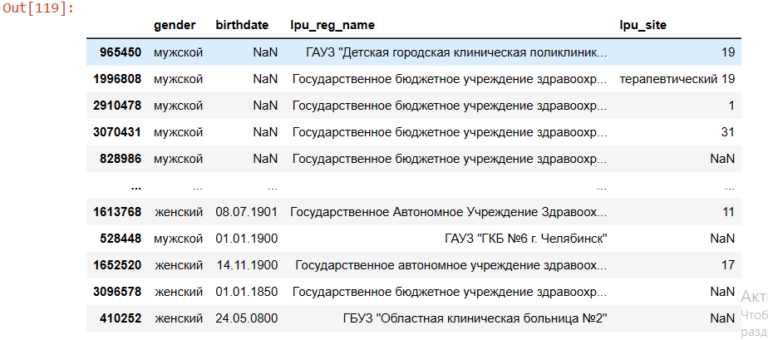

# kubectl top pod NAME CPU(cores) MEMORY(bytes) app-test-78559f8f44-pgs58 101m 243Mi app-test-78559f8f44-cj4jz 4m 240Mi

Total we have the following:

- Desired value (desiredMetricValue) – according to the hpa settings, we have 30%

- Current value (currentMetricValue) – for calculation, the controller-manager calculates the average resource consumption in%, i.e. conditionally does the following:

- Gets the absolute values of hearth metrics from the metric server, i.e. 101m and 4m

- Calculates the average absolute value, i.e. (101m + 4m) / 2 = 53m

- Gets the absolute value for the desired resource consumption (for this, the request of all containers is summed) 60m + 30m = 90m

- Calculates the average percentage of CPU consumption relative to the request hearth, i.e. 53m / 90m * 100% = 59%

Now we have everything necessary to determine whether it is necessary to change the number of replicas, for this we calculate the coefficient:

ratio = 59% / 30% = 1.96

Those. the number of replicas should be increased ~ 2 times and make up [2 * 1.96] = 4.

Conclusion: As you can see, in order for this mechanism to work, a prerequisite is including the availability of requests for all containers in the observed hearth.

The mechanism of horizontal autoscaling nodes (Cluster Autoscaler)

In order to neutralize the negative impact on the system during bursts of load, the presence of a tuned hpa is not enough. For example, according to the settings in the hpa controller manager decides that the number of replicas needs to be doubled, however, there are no free resources on the nodes to run such a number of pods (that is, the node cannot provide the requested resources for the pod requests) and these pods enter the Pending state.

In this case, if the provider has the appropriate IaaS / PaaS (for example, GKE / GCE, AKS, EKS, etc.), a tool such as Node Autoscaler. It allows you to set the maximum and minimum number of nodes in the cluster and automatically adjust the current number of nodes (by accessing the cloud provider API to order / delete nodes) when there is a shortage of resources in the cluster and the pods cannot be scheduled (in the Pending state).

Conclusion: to enable auto-scaling of nodes, it is necessary to specify requests in the hearth containers so that k8s can correctly evaluate the load of nodes and accordingly report that there are no resources in the cluster to start the next hearth.

Conclusion

It should be noted that setting resource limits for the container is not a prerequisite for the successful launch of the application, but it is still better to do this for the following reasons:

- For more accurate scheduler operation in terms of load balancing between k8s nodes

- To reduce the likelihood of a hearth eviction event

- For horizontal auto-scaling application hearths (HPA)

- For horizontal auto-scaling of nodes (Cluster Autoscaling) for cloud providers