I know that I know nothing, but others don't know that either.

When I was writing my article about interfaces in JS using the example of the movie “The Transporter” with Jason Statham, I decided to use ChatGPT to help me with factual information. For example, to find out how much the bag with the Chinese girl weighed and from where to where Frank Martin (Statham's character) was supposed to transport her. Although I rewatched the movie before writing the article, searching for the necessary scenes seemed tedious to me, and I decided to take a shortcut by turning to ChatGPT. After all, everyone knowsthat AI will soon throw good old Google search to the side of history.

However, the result disappointed me. In short, ChatGPT (like any LLM) works with probabilities and it is very difficult, bordering on impossible, for it to admit that it does not know something. It will give out all sorts of garbage with very low plausibility, but it will not say so “Sorry dude, I don't know” Well, if you want to know more about the details, then welcome under the cut.

What is intelligence?

Without digging too deep, I will focus on a popular source – wikipedia:

Intelligence … is a quality of the psyche consisting of the ability to perceive new situations, the ability to learn and remember from experience, the ability to understand and apply abstract concepts, and the ability to use one's knowledge to control the environment around one.

From this definition it follows that intelligence is a dynamic concept. It must reflect changes in the environment, be aware of themcompare with previously acquired knowledge, and develop control actions capable of changing the environment.

What is LLM?

And again a reference to the wiki:

A large language model (LLM) is a language model consisting of a neural network with many parameters (usually billions of weights or more) trained on a large amount of unlabeled text using unsupervised learning.

Weights are, roughly speaking (not so roughly described here), probabilities that A is related to B. BNM reads the input, turns it into tokens, and starts calculating the most likely relationships between the input and “previously realized“, forming the most probable “exit“.

Why LLM is not intelligence

I periodically come across the following thought in comments under articles on LLM: “we don't know exactly how natural intelligence works, it's quite possible that it works like this“But intelligence is by definition capable of “understanding and applying abstract concepts and using your knowledge” . And this is precisely where LLM has problems.

An ordinary person with natural intelligence can watch a film and answer questions about it. It is not difficult for the intellect to separate the space of facts of the film from the rest of its experience. And if a person is asked the question: “What color pantsuit did the Chinese girl Frank Martin carry in his bag wear?“He will answer that the Chinese girl that Frank Martin was carrying in his bag was wearing a white shirt and a beige skirt, not a pantsuit.

Here's how the various ChatGPT models answer the question:

Hi. Answer briefly and only what you are sure of. What color pantsuit did the Chinese girl who was transported in the bag of Frank Martin, the hero of the 2002 film “The Transporter” wear?

ChatGPT 3.5-turbo: The Chinese girl's pantsuit was blue.

ChatGPT 4-turbo: The Chinese girl's pantsuit in the movie “The Transporter” was orange.

ChatGPT 4o: White.

ChatGPT 4: In the movie “The Transporter” the Chinese girl Lai wore a pink pantsuit.

As you can see from the answers, LLM is hallucinating and is unable to register the very fact of the absence of the necessary information. The model by its nature cannot be aware of anything, it only connects one thing with another based on statistical dependencies obtained when processing a huge volume of texts. And if this data contains any connections between a girl, a Chinese woman, a pantsuit, a bag, a carrier, etc., then it will choose the most probable ones and build an answer from them. This is not about awareness, this is about big data.

Conclusion

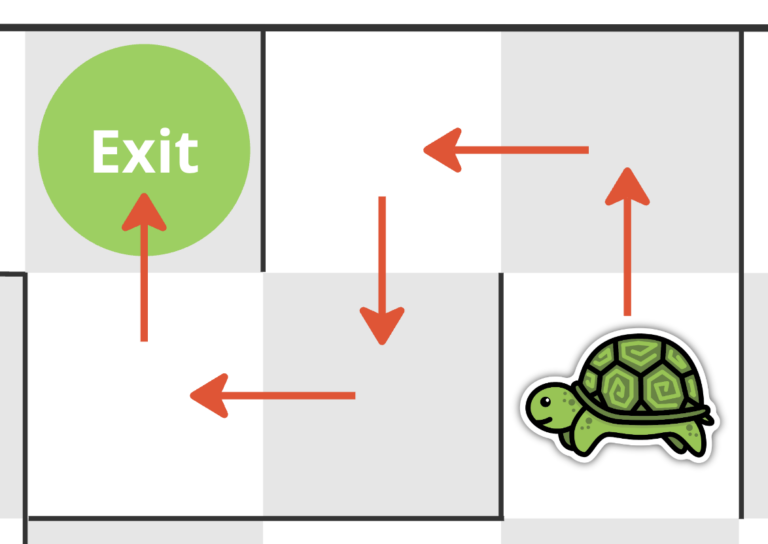

So far, AI in the form of LLM is very far from natural intelligence. BYAM is currently a typical Chinese room. A set of instructions that John Searle follows when translating hieroglyphs. These instructions do not even record knowledge of any facts, nor the ability to “understanding and applying abstract concepts“, but simply statistically significant relationships between hieroglyphs.

There are different ways to detect AI, but if I were taking the Turing Test, I would ask questions that a human would have to answer.”Don't know“AI still has a lot of trouble with this.