How we automated the entire server life cycle

Hello, Habr! My name is Alexei Nazarov. I am engaged in automation in the department of administration of infrastructure systems in the National Payment Card System (NSPK JSC) and wanted to tell a little about our internal products that help us grow.

If you have not read a post about our infrastructure, then it’s time! After reading this post, I would like to talk about some of the internal products that we have developed and implemented.

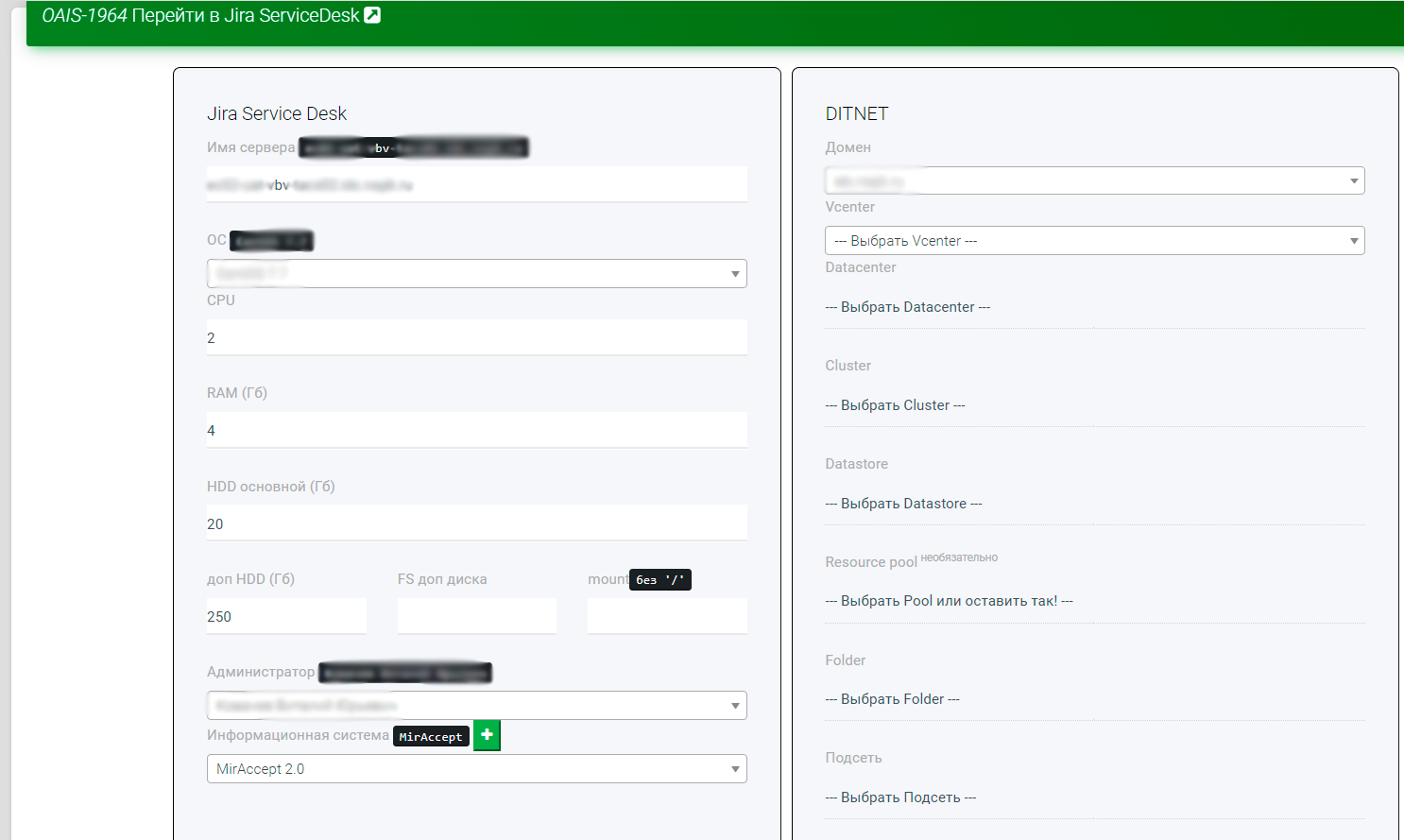

Our company, like any other, has its own regulations and business processes. One of them is the one by which we create servers or a server stand according to the request of Jira ServiceDesk. The server has a functional administrator, i.e. owner. Servers also have a status (Test, Productive, UAT, etc.). Due to the statuses and other characteristics of the server, it should be in its segment, data center, datastore, network, etc. So, to create a server you first need to: create a server in VMware, give it a name, ip, dns and other important parameters, and then ansible-playbook.

The history of development

I came to the NSPK in January 2015 and worked in a situation center as a Linux on-duty officer. Our main responsibilities included creating and configuring servers, maintaining the server in a healthy state. When the monitoring system showed any interruptions with the servers, they turned to us. 1-line support for escalation required information about the server: its purpose, for which system is responsible, to whom it belongs, etc. If critical triggers were triggered in the monitoring system, the 1-line described detailed information about the causes and condition of the system. Detailed information about the servers at that time was with us, since we were engaged in servers. So we also transmitted detailed information about 1-line servers.

To account for the servers, we used an excel file. For accounting ip used phpIPAM https://phpipam.net/. phpIPAM is an open source address space accounting product. Some more information could be in the monitoring system itself. The number of servers was no more than 700.

In our department, employees are responsible for various tasks: some are engaged in virtualization and storage, others are Windows, and we are Linux. There is also a department where network engineers and database administrators are located.

Server creation was not streamlined. Productive servers were created on request, test servers could be created without them. The server was created manually. Which means:

- It was required to find out in which data center, datastore, network, etc.

- Create a server in the desired segment through Vcenter

- Drive out bash scripts and some ansible-playbooks

- Add correct server information to excel file

- Add ip in phpIPAM

- Close the application, if it was

After some time, it became clear that more and more servers were required to be created. And we began to look for options for systems for storing information and accounting for servers.

There are many such systems on the Internet. Even phpIPAM can store server information. But in such systems it is inconvenient to look and analyze the status of servers in the context. They did not have the necessary fields and links, there are no filters by fields as in excel, there is no clear delineation of the rights to edit and view certain servers.

I liked Python then, and I wanted to do something on Django. Therefore, I decided to write my CMDB for the needs of the department and the company. After its creation, we decided to automate the process of creating and configuring servers. What happened? More on this later …

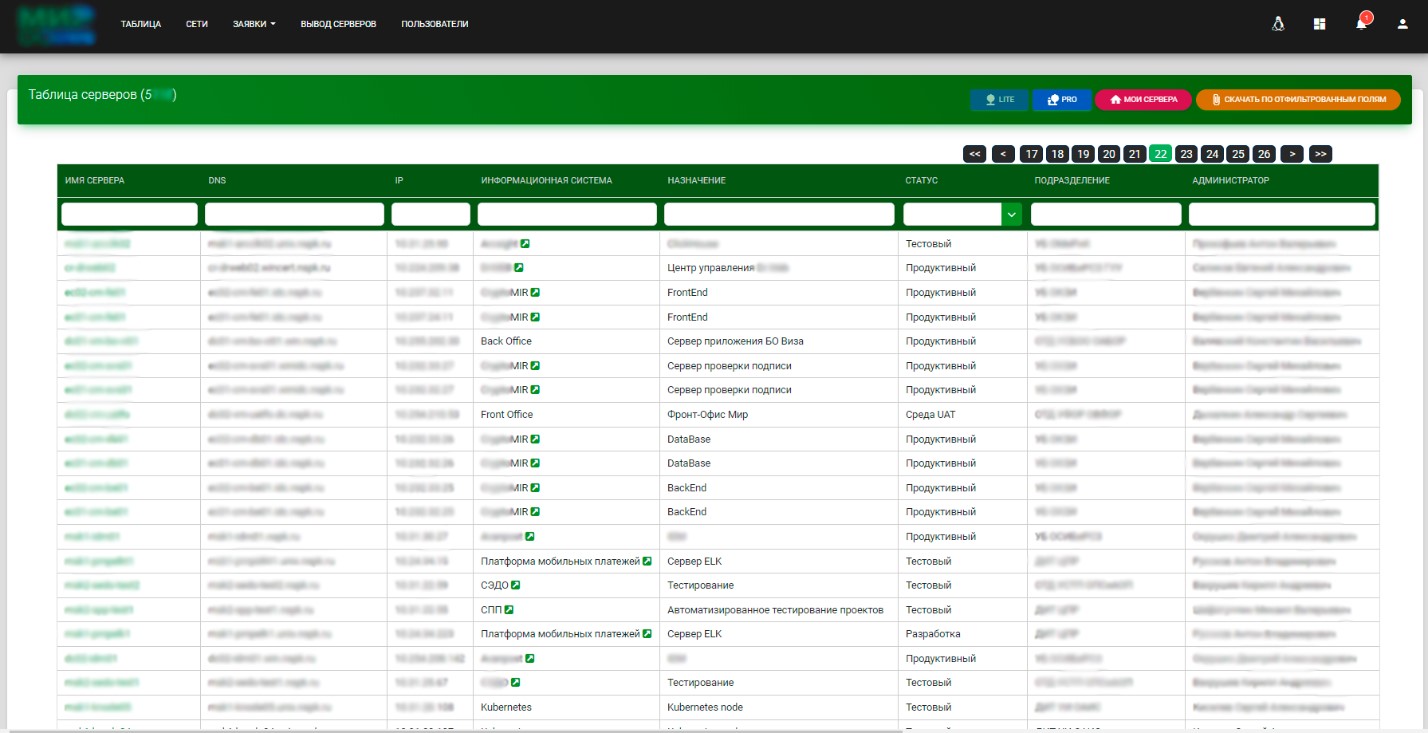

Server world

The primary server storage system. At the moment we have more than 5000 servers. The system is constantly being refined, integrated with business processes and other systems.

This is the main page:

When you go to the server page, you can see more detailed information, edit the fields, see the history of changes.

In addition to storing data about servers, the server world has the following functionality:

- Differentiation of access rights for viewing and editing data (by department, by management, by department)

- Convenient viewing of servers in tabular form, filter by any fields, showing / hiding fields

- A variety of mail alerts

- Updating server information

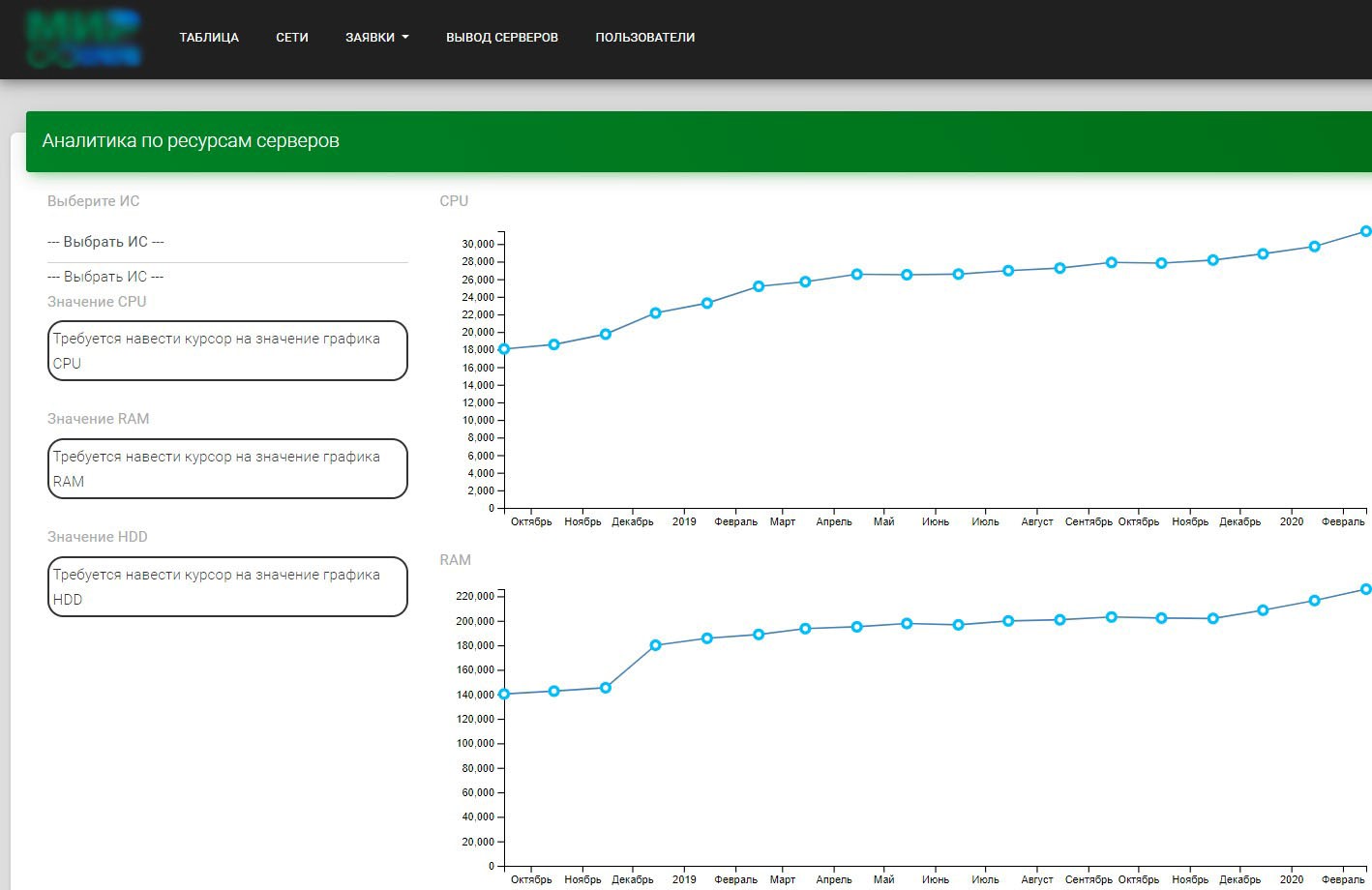

- Daily server data collection and storage for system resource analytics

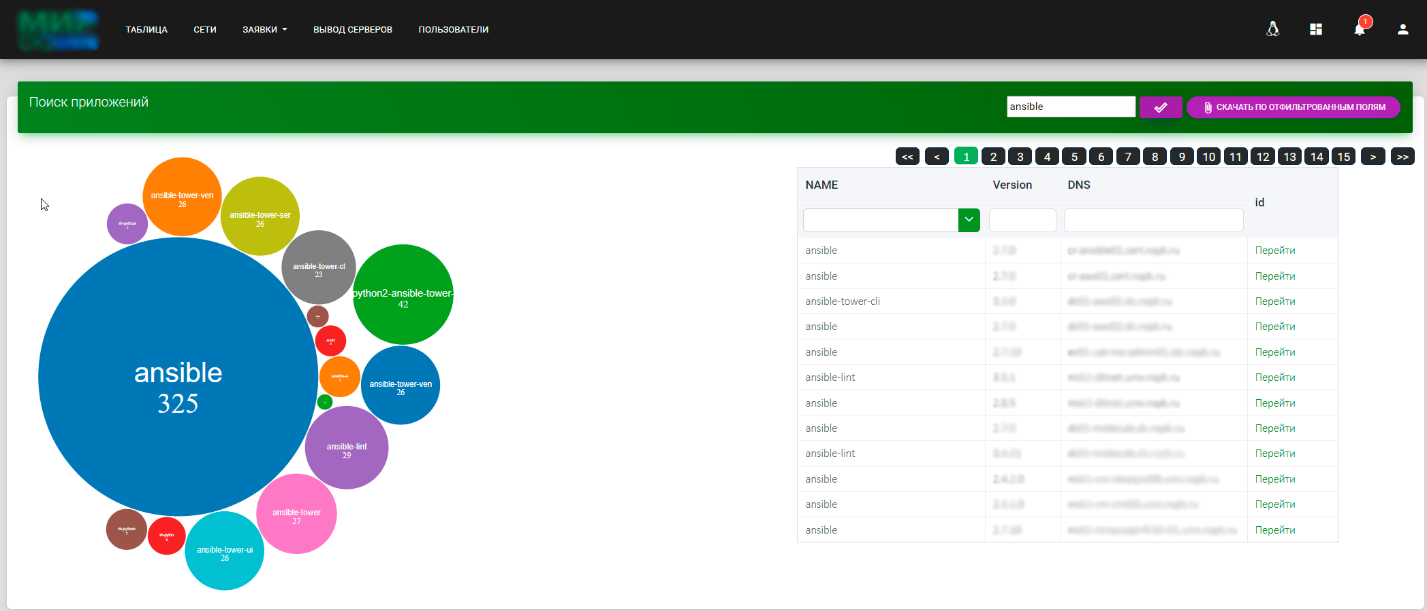

- Search and compare installed applications on servers

Integration of the World of servers with other systems:

1) Automatically update ip in phpIPAM

2) Fulfillment of Jira ServiceDesk applications for the provision of a new server (server stand) through the World of Servers

3) View the location of the physical server and the correctness of filling information in the dcTrack system (https://www.sunbirddcim.com/)

1) Transfer of server information through the REST API for Zabbix and other monitoring systems

2) Transfer of information about software installed on servers via REST API for the needs of information security

3) Synchronization of server owners from 1C and Active Directory to receive name, work mail, affiliation, status of the employee. It is necessary to write that such data is required to distinguish between rights, as well as to automatically notify server owners of a number of events related to their servers.

Ditnet

Our infrastructure currently has more than 10 data centers. From this it is clear that the World of Servers will not be able to create and configure a server in any segment due to the understandable requirements of PCI-DSS.

Therefore, when fulfilling applications for the provision of the server, we generate json with the data that is required to be created in the VMware environment. Json transfer is implemented through secure rsync or ftps – it depends on the segment.

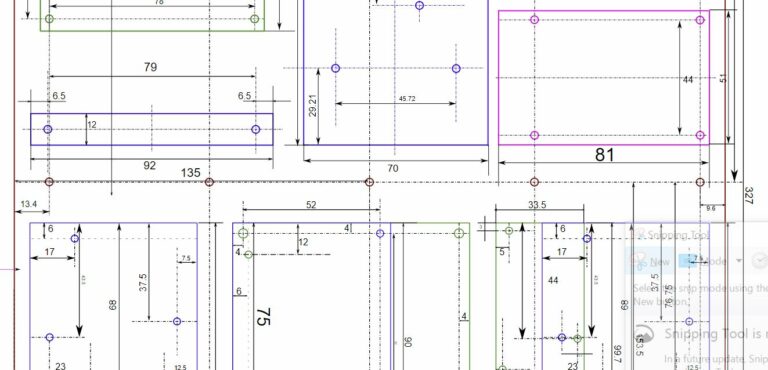

It should be noted that our department has done a lot of work. They removed bashsible, reworked ansible into idempotent roles for server configuration, configured molecule (https://molecule.readthedocs.io/), unified all VMware artifacts and much more. Standardization of VMware artifacts was required for the most part for server subnets in all data centers (we already have more than 900 of them).

As an example. Previously, the Distributed Switch could be called “test2”, and now 192.168.1.0 | 24_test2. This renaming was required so that at the stage of json formation it would be possible to match subnets from phpIPAM and VMware.

Fulfillment of applications for the provision of servers:

1) DitNet daily or on request collects all artifacts from VMware (clusters, datastores, networks, templates, etc.). Packs all the information in json and sends it to the server world

2) The server world receives data and fills the database data with VMware artifacts

3) In the World of Servers, there is a page that accesses Jira ServiceDesk and, by jql request, receives a list of applications for providing servers with the status “Queue”. On this page, the contractor populates the table with VMware artifacts and other resources (Figure below). Part of the data is automatically filled in with the data specified in the application.

4) After filling out and clicking the “Create” button, the application changes the status in Jira ServiceDesk “In Work”

5) At this moment, the Server World generates json with data on creating a VM (artifacts, dns, ip, etc.) and transfers it to a folder for its segment (determined by the server domain)

6) Each DitNet in its segment requests data from its folder and enriches the data table with the servers for installation. There are additional fields in the database with information on the installation status (default: “ready to install”)

7) On DitNet, Celery beat runs every 5 minutes, which by the status of the installation determines the number of servers that need to be installed and configured

8) Celery worker starts several sequential tasks:

a. Creates a server in VMware (use the pyvmomi library)

b. Download or update the gitlab server setup project

c. Ansible-playbook is launched (use this guide https://docs.ansible.com/ansible/latest/dev_guide/developing_api.html)

d. Molecule Launches

e. Sending mail to the executor and the World of servers about the status of execution

9) After each task, the status is checked. If all tasks are completed, we inform the contractor with the link formed to close the Jira ServiceDesk application. If any of the tasks failed, then notify the artist with the Vmware or Ansible log.

What else can Ditnet do at the moment:

- Collects all data and resources from all servers. For this task, we use Ansible with the setup module. On hosts, in addition to local facts, we also use custom ones. Before each launch, we create an inventory for Windows and Linux.

- Collects SNMP information about physical servers. We scan specific subnets and get the serial number, BIOS version, IPMI version, etc.

- Gathers information about server groups in Freeipa (HBAC, SUDO rules), about groups in Active Directory. To collect and control the role model of user access to information systems

- Reinstalling servers

- And there are seals in the background. Figure below:

All the information that DitNet collects is sent to the World of Servers. And there, all the analytics and updating of server data is already underway.

How do we update

At the moment, not only I am working on the World of Servers and DitNet. There are already three of us.

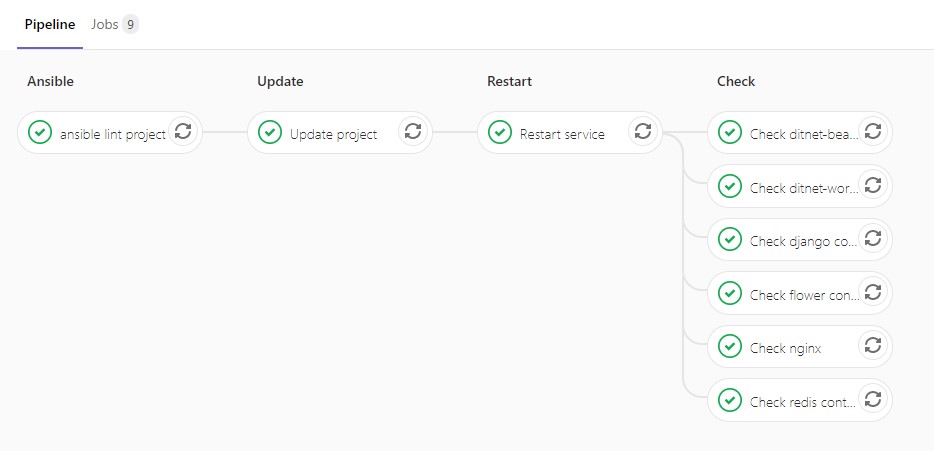

All source code is stored in our Gitlab for easy parallel development. Each project has its own Ansible-playbook, which launches Gitlab CI and updates the application. Pipeline:

The pipeline shows that there are not enough unit tests. But, I think, in the near future we will fix it.

Ansible-playbook can also be launched via Ansible Tower (AWX) on new servers if a new installation is required.

In the case of DitNet, we use docker to deliver the necessary libraries to all segments. It is described by docker-compose. And docker-compose services are wrapped in systemd.

Planned in the future

- Automatic execution of applications for server installation without an executor

- Scheduled automatic server updates

- Adding storage entities to the World of Servers and automatic data collection

- Collection of information from physical servers about all components for sending to the World of servers to control the spare parts for servers

- Automatic notification of the server owner leaving the company for subsequent binding of servers to the future owner

- Continued integration with other company systems

… and much more!

PS Thank you for your time! Criticism and comments are welcome!