How Uma.Tech developed infrastructure

Five years is a characteristic time for summing up the intermediate results. Therefore, we decided to talk about the development of our infrastructure, which has gone through an amazingly interesting development path over the five years, which we are proud of. The quantitative changes we have implemented have turned into qualitative ones; now the infrastructure can operate in modes that seemed fantastic in the middle of the past decade.

We provide the work of the most complex projects with the strictest requirements for reliability and loads, including PREMIER and Match TV. On sports broadcasts and at the premiere of popular TV series, it is required to return traffic in terabits / s, we easily implement this, and so often that working at such speeds has long become commonplace for us. And five years ago, the most difficult project working on our systems was Rutube, which has evolved since then, increased volumes and traffic, which had to be taken into account when planning workloads.

We talked about how we developed the hardware of our infrastructure (“Rutube 2009-2015: the history of our hardware”) and developed a system responsible for video uploading (“From zero to 700 gigabits per second – how one of the largest video hosting sites in Russia is uploading video “), But a lot of time has passed since the writing of these texts, many other solutions have been created and implemented, the results of which allow us to meet modern requirements and be flexible enough to rebuild for new tasks.

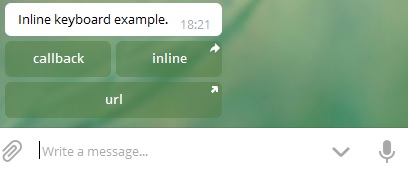

Network core constantly developing. We switched to Cisco equipment in 2015, as mentioned in the last article. Then it was all the same 10 / 40G, but for obvious reason, after a few years they upgraded the existing chassis, and now we are actively using 25 / 100G as well.

100G links have long been neither a luxury (rather, it is an urgent requirement of the time in our segment), nor a rarity (more and more operators provide connection at such speeds). However, 10 / 40G remains relevant: through these links, we continue to connect operators with a small volume of traffic, through which it is currently impractical to use a more capacious port.

The network core we have created deserves a separate consideration and will become the topic of a separate article a little later. There we will delve into the technical details and consider the logic of our actions when creating it. But now we will continue to draw the infrastructure more schematically, since your attention, dear readers, is not unlimited.

Video serving servers evolve quickly, for which we offer a lot of effort. If earlier we used mainly 2U servers with 4-5 network cards with two 10G ports each, now most of the traffic is sent from 1U servers, in which there are 2-3 cards with two 25G ports each. Cards with 10G and 25G are almost equal in value, and faster solutions allow you to give both 10G and 25G. The result is clear savings: fewer server components and cabling – less cost (and more reliability), components take up less rack space – more servers can be accommodated per unit of floor space and therefore lower rental costs.

But more important is the gain in speed! Now with 1U we can give over 100G! And this is against the backdrop of a situation when some large Russian projects call “achievement” the return of 40G with 2U. We would have their problems!

Note that we still use the generation of network cards that can only work on 10G. This equipment works stably and is perfectly familiar to us, so we did not throw it away, but found a new application for it. We installed these components in video storage servers, for which one or two 1G interfaces are clearly not enough for effective operation, here 10G cards turned out to be relevant.

Storage systems grow too. Over the past five years, they have changed from twelve-disk drives (12x HDD 2U) to thirty-six-disk drives (36x HDD 4U). Some people are afraid to use such capacious “carcasses”, because in the event of failure of one such chassis, there may be a threat to performance – and even working capacity! – for the entire system. But this will not happen with us: we have provided redundancy at the level of geo-distributed copies of data. We spread the chassis to different data centers – we use three in total – and this eliminates the occurrence of problems both in case of chassis failures and when the platform falls.

Of course, this approach made hardware RAID redundant, which we abandoned. By eliminating redundancy, we simultaneously increased the reliability of the system, simplifying the solution and removing one of the potential points of failure. Recall that our storage systems are “self-made”. We went for this completely deliberately and the result completely satisfied us.

Data centers over the past five years, we have changed several times. Since writing the previous article, we have not changed only one data center – DataLine – the rest required replacement as our infrastructure developed. All transfers between sites were planned.

Two years ago, we migrated inside MMTS-9, moving to sites with high-quality repairs, good cooling systems, stable power supply and without dust, which used to lie in thick layers on all surfaces, and also abundantly clog the insides of our equipment. Opt for quality service – and dust free! – became the reason for our move.

Almost always “one crossing equals two fires”, but the problems of migration are different each time. This time, the main difficulty of moving inside one data center was “provided” by optical cross-connections – their interfloor abundance without being mixed into a single cross-connect by telecom operators. The process of updating and re-routing crossings (with the help of MMTS-9 engineers) was, perhaps, the most difficult stage of the migration.

The second migration took place a year ago, in 2019 we moved from M77 to O2xygen. The reasons for the move were similar to those discussed above, but they were supplemented by the problem with the unattractiveness of the original data center for telecom operators – many providers had to “catch up” to this point on their own.

The migration of 13 racks to a high-quality site in MMTS-9 made it possible to develop this location not only as an operator’s (a couple of racks and “forwarding” operators), but also to use it as one of the main ones. This somewhat simplified the migration from the M77 – we moved most of the equipment from this data center to another site, and O2xygen took the role of developing, sending 5 racks with equipment there.

Today O2xygen is already a full-fledged platform, where the operators we need have “come” and new ones continue to connect. For operators, O2xygen was also attractive in terms of strategic development.

We definitely spend the main phase of the move overnight, and when migrating inside MMTS-9 and to O2xygen, we adhered to this rule. We emphasize that we strictly adhere to the rule “moving in a night” regardless of the number of racks! There was even a precedent when we moved 20 racks and did it in one night too. Migration is a fairly simple process that requires accuracy and consistency, but there are some tricks here, both in the preparation process, and when moving, and when deploying to a new location. We are ready to tell you about migration in detail if you are interested.

results We like five-year development plans. We have completed building a new resilient infrastructure across three data centers. We have dramatically increased the density of traffic delivery – if we recently rejoiced at 40-80G with 2U, now it is normal for us to give 100G with 1U. Now a terabit of traffic is perceived by us as commonplace. We are ready to further develop our infrastructure, which turned out to be flexible and scalable.

Question: what to tell you about in the following texts, dear readers? Why did we start building homemade storage systems? About the network core and its features? About the tricks and intricacies of migration between data centers? About optimizing issuance solutions by selecting components and fine-tuning parameters? About creating sustainable solutions thanks to multiple redundancy and horizontal scalability within the data center, which are implemented in a structure of three data centers?

Author: Petr Vinogradov