How to become a DevOps engineer in six months or even faster. Part 2: Configuration

Freshen up your memory quickly

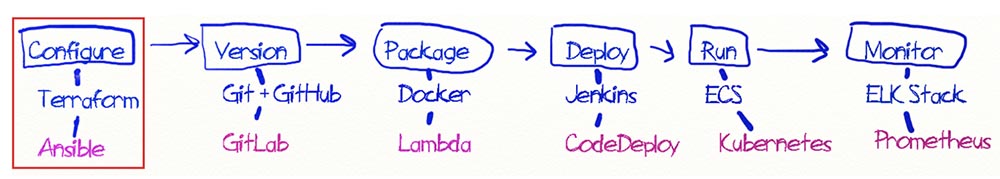

In the first part, I argued that the work of the DevOps engineer is to create fully automated digital pipelines that move code from the developer’s machine to production. Effectively doing this job requires an understanding of the basics, which are the OS, a programming language and a cloud storage service, as well as a good understanding of the tools and skills based on this.

Let me remind you that your goal is to first study blue things from left to right, and then also to study purple things from left to right. Now we will look at the first of 6 months of training devoted to configuration.

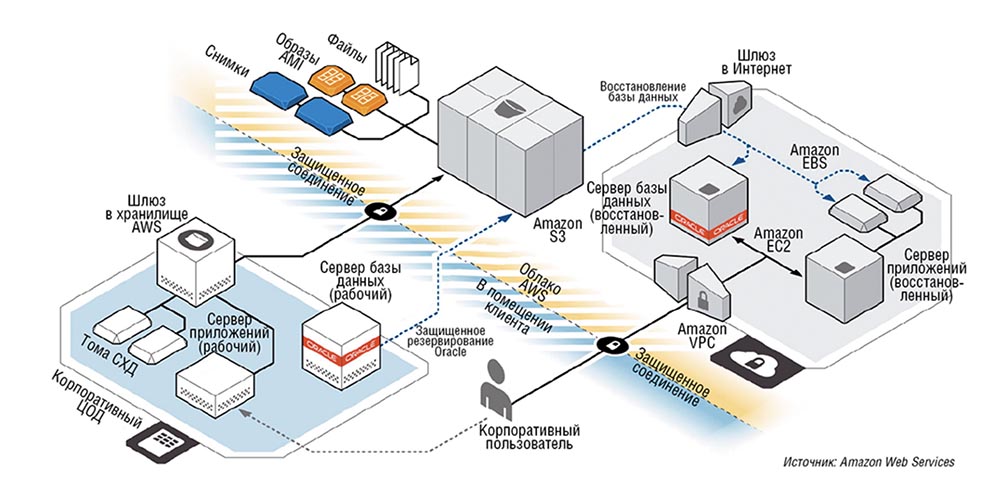

What happens at the configuration stage? Since the code we create needs machines to run, the configuration phase actually creates the infrastructure that runs our code.

In the past, building support infrastructure was a lengthy, time-consuming, and error-prone trial. Now that we have our amazing cloud, all the preparations can be done with the click of a button. Or at least a few taps. However, it turns out that pressing buttons to complete these tasks is a bad idea because it means:

- propensity to make mistakes (and people make mistakes);

- ignoring versions (button clicks cannot be stored in the Git repository);

- irreproducibility and non-repeatability (more cars = more clicks);

- the impossibility of checking on the fly (I have no idea whether my clicks will really work or vice versa will ruin everything).

For example, think about all the work necessary to provide your development environment … then an int environment … then QA … then staging … then prod in the USA … then prod in the EU … it will quickly become quite tedious and annoying. Therefore, instead of mechanical clicks of the mouse button, a new method called “infrastructure-as-code” is used, and this is what will be discussed at this stage of configuring your system. “Infrastructure as code” means configuring a system using configuration files, rather than manually editing configurations on servers or interactively. This method can be a declarative or scripted description of the infrastructure. In accordance with DevOps best practices, “infrastructure-as-code” requires that any work necessary to provide computing resources is done only with code. By “computing resources” I mean everything necessary for the application to run correctly in prod: its own computing resources, storage, network, databases, etc. Hence the name – “infrastructure as code.”

In addition, this means that instead of “snapping” our path through the mechanical deployment of infrastructure, we will:

- Describe the desired state of the infrastructure in Terraform

- store it in our source code control version control system;

- go through the formal Pull Request process to receive feedback;

- test performance;

- perform configuration in order to ensure the operation of the system with all necessary resources.

Why is this, and not that?

The obvious question is: “Why Terraform? Why not Chef, Puppet, Ansible, CFEngine, Salt or CloudFormation? ” Good question! During his discussion, whole barrels of virtual ink were spilled. I think you should learn Terraform because:

- it is very fashionable, so you will have tons of employment opportunities;

- it is easier to digest;

- it is a cross-platform product.

Of course, you can choose any other option and also succeed. But I have to note the following. This industry is developing quickly and rather randomly, but I see such a development: traditionally, things like Terraform and CloudFormation were used to provide infrastructure, while Ansible and similar systems were used to configure it.

Therefore, you can consider Terraform as a foundation tool, and Ansible as a crane for building a house on this foundation, with the subsequent deployment of the application as a roof (deployment can also be done using Ansible).

In other words, you create your virtual machines using Terraform, and then use Ansible to configure the servers as well as deploy your applications. Traditionally, these things are used in this way.

However, Ansible can do much of what (if not all) Terraform can do, but the converse is also true. Don’t let this bother you. Just know that Terraform is one of the industry’s leading infrastructure-as-code tools, so I highly recommend that you start with it.

In fact, experience with Terraform + AWS is one of DevOps’ most sought after engineering skills. But even if you are going to donate Ansible to Terraform, you still need to know how to programmatically configure a large number of servers, right? It turns out that this is not necessary!

Immutable Deployment

I predict that configuration management tools such as Ansible will lose their importance, while infrastructure provisioning tools such as Terraform or CloudFormation will increase it. And all this because of what is called “immutable deployment.” Simply put, this means the practice of never changing the deployed infrastructure, that is, your deployment unit is a virtual machine or a Docker container, not a piece of code.

In this case, you do not deploy the code to a set of static virtual machines, but deploy the entire virtual machines along with the code baked into them. You do not change the way you configure virtual machines, but instead deploy new virtual machines with the desired configuration. You are not fixing prod machines; you are deploying new, already fixed machines.

You do not start one set of virtual machines in dev, and another set of machines in prod, they are all the same. In fact, you can completely safely disable all SSH access to all prod machines, because there is nothing to do there – no settings to change, no logs to view (I will talk more about the logs later). When used correctly, this is a very powerful template that I highly recommend using!

Immutable deployments require configuration to be separate from your code. Please read the manifest The Twelve-Factor Appthat details this (and other amazing ideas!) in great detail. This is a must read for practicing DevOps.

Disconnecting the code from the configuration is very important, because you do not want to redeploy the entire application stack every time you “trick” your database passwords. Instead, make sure that applications retrieve it from an external configuration store (SSM / Consul / etc.). In addition, you can easily see how with the advent of immutable deployments, tools like Ansible begin to play a less prominent role. The reason is that you only need to configure one server and deploy it a whole bunch of times as part of your automatic scaling group.

If you create containers, then by definition you need immutable deployments. You do not want your dev container to be different from the QA container, which will also be different from the prod container. You need exactly the same container in all environments, as this avoids configuration bias and simplifies rollback in case of problems.

In addition to containers, for those just starting out, preparing your AWS infrastructure using Terraform is a good DevOps training model and what you really need to learn.

But wait … what if you need to look at the logs to fix the problem? In this case, you don’t need to enter the machines to view the logs; just look at the centralized logging infrastructure for all your logs. Some guy has already published detailed information on this resource. articlehow to deploy the ELK stack in AWS ECS, you can read it to learn how to do this in practice. Again, you can completely turn off remote access and feel much more secure than most people there.

To summarize, we can say that our fully automated journey “DevOps” begins with the preparation of the computing resources needed to complete the code setup phase. And the best way to achieve this is to use immutable deployments. If you’re interested in where to start, grab the Terraform + AWS combination as your starting point!

That’s all for the configuration phase, in the next article we will consider the second phase – the version.

The sequel will be very soon …

A bit of advertising 🙂

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to your friends, cloud VPS for developers from $ 4.99, A unique analogue of entry-level servers that was invented by us for you: The whole truth about VPS (KVM) E5-2697 v3 (6 Cores) 10GB DDR4 480GB SSD 1Gbps from $ 19 or how to divide the server? (options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper at the Equinix Tier IV data center in Amsterdam? Only here 2 x Intel TetraDeca-Core Xeon 2x E5-2697v3 2.6GHz 14C 64GB DDR4 4x960GB SSD 1Gbps 100 TV from $ 199 in the Netherlands! Dell R420 – 2x E5-2430 2.2Ghz 6C 128GB DDR3 2x960GB SSD 1Gbps 100TB – from $ 99! Read about How to Build Infrastructure Bldg. class c using Dell R730xd E5-2650 v4 servers costing 9,000 euros for a penny?