How to add emotion to a Russian-language personalized dialog agent

What's the problem?

There has been a lot of progress in natural language processing and dialogue research, most notably the development of GPT, BERT models and their derivatives that can generate text, parse and understand content at a high level. These models have been trained on huge amounts of data and can perform a variety of tasks, including translation, summarization, content creation and even dialogue. However, there are still a number of serious challenges.

Among them are the lack of emotional empathy in dialog agents, inadequate and inappropriate responses in emotionally charged contexts, and the inability to take into account the user's emotional state over a long interaction. Also, modern dialog agents sometimes generate incoherent and contradictory responses in a dialog – this is caused by the lack of a consistent persona of the dialog agent.

We decided to add methods to the library that will help solve these problems. Here's what we got:

New modules

In our latest rupersonaagent update from July 30, 2024, we added the following modules:

Module for recognizing emotions and tonality of user messages.

Module for detecting aggressive user speech.

Classification model interpretation module.

Module for augmentation and synthesis of emotional and personalized dialogue data for the Russian language.

A module for modifying the response of a personalized dialog agent based on information about its person.

A model for increasing the emotionality of responses of a dialogue agent taking into account the context of the dialogue.

In this article we will consider some of them in detail.

Model for increasing the emotionality of dialogue agent responses taking into account the dialogue context

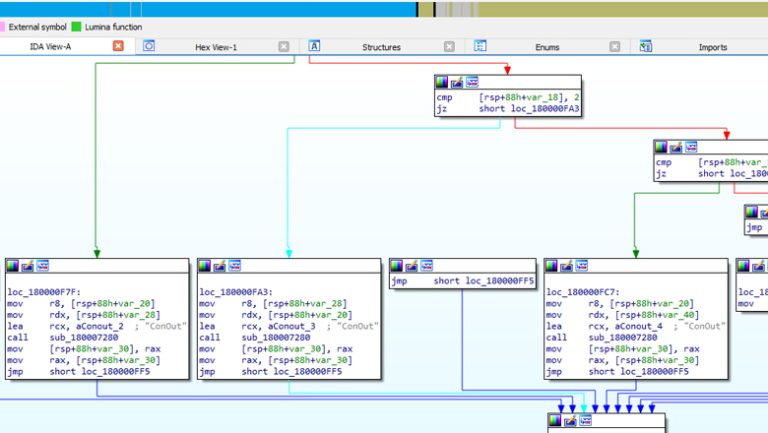

This module is based on the Fusion-in-Decoder model.

Fusion-in-Decoder models can be trained using train_reader.py and tested using test_reader.py.

A description of all possible parameters is available in the file options.py

Education

train_reader.py provides the code for training.

Example:

python train_reader.py \

--train_data train_data.json \

--eval_data eval_data.json \

--base_model_path base/model/path \

--per_gpu_batch_size 1 \

--name my_experiment \

--checkpoint_dir checkpoint \

Tensors of different sizes lead to increased memory consumption. Tensors fed to the Encoder input have a fixed size by default, unlike the Decoder input. The size of the tensors on the Decoder side can be set using --answer_maxlength.

Testing

Model testing is done using test_reader.py

The test metrics are Exact Match and BLEU.

Example:

python test_reader.py \

--base_model_path base/model/path \

--model_path checkpoint_dir/my_experiment/my_model_dir/checkpoint/best_dev \

--eval_data eval_data.json \

--per_gpu_batch_size 1 \

--name my_test \

--checkpoint_dir checkpoint \

FiD Agent

FiD Agent is a base class that allows trained models to be used as conversational agents.

Parameters:

model_path — путь до чекпойнта

context_length — сколько последних сообщений хранится в контексте (сообщения бота и пользователя вместе)

text_maxlength — максимальная длина текста, подаваемого на вход модели

(включает в себя последнюю реплику, один факт о персоне и историю диалога).

Если текст слишком длинный, он обрезается с правой стороны.

device — cpu или gpu

Methods:

def set_persona(self, persona: List[str]):

# установка списка фактов о персоне

def clear_context(self):

# очистка истории диалога

def set_context_length(self, length: int):

# изменение параметра context_length и обрезка истории диалога соответственно

def chat(self, message: str) -> str:

# передача одного сообщение модели и получение ответа в виде строки

Minimal working example:

from rupersonaagent.fidagent import FiDAgent

#Создаем объект класса агента, передавая ему путь до обученной модели

model_path = "path/to/your/model"

agent = FiDAgent(model_path=model_path,context_length=7,text_maxlength=200,device="cuda:0")

#Определяем набор фактов о персоне и передаем его агенту

persona = ["Я аспирант", "Мне 25 лет", "Грусть"]

agent.set_persona(persona)

#Отправка и получение сообщения

message = "Привет, как дела?"

response = agent.chat(message)

print(response)

Data

Data format

The expected data format is a list of entry examples, where each entry example is a dictionary in the following format.

Example data:

{

'id': '0',

'question': 'Последняя реплика',

'target': 'Ожидаемый ответ',

'answers': ['повторяет target'],

'ctxs':

[

{

"title": "Факт_о_персоне_1",

"text": "История диалога"

},

{

"title": "Факт_о_персоне_2",

"text": "История диалога”

}

]

}

Since the dialogue consists of successive remarks, then for a dialogue of length N remarks it is necessary to create N similar examples.

Data processing

Data processing for the rupersonachat dataset can be recreated using persona_chat_preprocess.ipynb

Methods:

def merge_utts(utts: List[str]) -> List[str]:

# Сливает последовательные реплики одного пользователя в одну

def clean_dialogue(dialogue: str) => List[str]:

# Обрабатывает диалог и разбивает его на отдельные реплики

def clean_persona(persona: str) -> List[str]:

# Обрабатывает описание персоны и бьет его на отдельные факты

def preprocess(ds: DataFrame, bot_prefix: str, user_prefix: str) -> List[Dict]:

# Принимает на вход pandas DataFrame и возвращает список обработанных примеров

Example:

# Загрузка данных как pandas DataFrame

df = pd.read_csv('./TlkPersonaChatRus/dialogues.tsv', sep='\t')

# Обработка

data = preprocess(df)

# Сохранение

with open('data.json', 'w') as outfile:

json.dump(data, outfile)

Module for modifying the response of a personalized dialog agent based on information about its person

This model is based on the RAG (Retrieval Augmented Generation) method.

The retriever model for RAG can be trained using train_biencoder.py and tested with test_biencoder.py

Education

train_biencoder.py provides the code for training.

Example:

python src/train_biencoder.py \

--data_path data/toloka_data \

--model_name "cointegrated/rubert-tiny2" \

--max_epochs 3 \

--devices 1 \

--save_path "bi_encoder/biencoder_checkpoint.ckpt" \

Testing

Model testing is done using test_biencoder.py. Test metrics are Recall@k and MRR.

Example:

python src/test_biencoder.py \

--model_name \

--checkpoint_dir bi_encoder/biencoder_checkpoint.ckpt \

--data_path data/toloka_data \

Data

Data format

The expected data format is two columns: query and candidate, where the query contains the history of the dialogue and the candidate contains the next move in the dialogue.

Example data:

DatasetDict({

train: Dataset({

features: ['query', 'candidate'],

num_rows: 209297

})

val: Dataset({

features: ['query', 'candidate'],

num_rows: 1000

})

test: Dataset({

features: ['query', 'candidate'],

num_rows: 1000

})

})

{

'query': 'dialog_history',

'candidate': 'next_turn'

}

{

'query': [

'<p-2> Привет) расскажи о себе',

'<p-2> Привет) расскажи о себе <p-1> Привет) под вкусный кофеек настроение поболтать появилось',

'<p-2> Привет) расскажи о себе <p-1> Привет) под вкусный кофеек настроение поболтать появилось <p-2> Что читаешь? Мне нравится классика'

],

'candidate': [

'<p-1> Привет) под вкусный кофеек настроение поболтать появилось',

'<p-2> Что читаешь? Мне нравится классика',

'<p-2> Я тоже люблю пообщаться'

]

}

The dataset was used for the experiments. Toloka Persona Chat Rus. The model used for training was rubert-tiny2. LLM model – saiga_mistral_7b_gguf

Preliminary data processing was carried out using data_processing.ipynb

Facts about the person

The facts about the person were loaded into the FAISS vector database using data_storage.py

Example of interaction with a dialog agent

You can chat with the model using demo.py

gradio demo.py

When you run the command, a link opens with an interface for communicating with the agent in order to install the persona in demo.py you can change the persona variable in the ChatWrapper() class

Module for detecting aggressive user speech.

Methods

train_ngram_attention.py— training and validation of NgramAttention model;train_ddp_ngram_attention.py— training NgramAttention model on gpu in distributed setup. Recommended for use, as the training process is quite labor-intensive;train_bert.py— BERT model training;fusion.py— combine BERT with NgramAttention for better quality.

Data format

The data should be provided as a csv file with two columns: “comment, label and stored in the directory out_data. An example data set can be found in the same directory.

Usage

Training NgramAttention model on CPU:

python -m hate_speech.train_ngram_attention --mode train

Training NgramAttention model on gpu:

python -m hate_speech.train_ddp_ngram_attention

The learning rate, batch size and number of epochs can be specified using options --learning_rate, --batch_size, --total_epochs. All script parameters can be found in train_ngram_attention.py or train_ddp_ngram_attention.py in the case of distributed learning.

Evaluation of the NgramAttention model:

python -m hate_speech.train_ngram_attention --mode test

Training and evaluation of the BERT model:

python -m hate_speech.train_bert

Training and evaluation of the combined model:

python -m hate_speech.fusion

Using new models of the rupersonaagent library, we were able to successfully develop a personalized emotional dialog agent by integrating a number of modules aimed at recognizing and interpreting the emotional coloring of written speech. The modules implemented in the library also allowed our dialog agent to classify emotions, adapt its responses depending on the user's emotional state and the specified emotion. This helped make the interaction deeper and more personalized.