How I wrote asynchronous web requests in Python, or why the provider thinks I’m a bandit

The other day, at work, it was necessary to make a utility that goes directly from the console to the api of our cloud service and takes some information from there. Details of what and why are beyond this story. The fundamental issue here is different – speed. Speed is really important (the order of the number of requests is tens and hundreds). Because waiting is not fun.

Here I want to share my research on the topic of requests, how to do cool and how not. With code examples of course. And also to tell how stupid I was.

Let’s start with the classics

Sequential synchronous requests. We will use the well-known lib requests and tqdm for nice console output. As a toy example, I chose the first public apish that came across: https://catfact.ninja/. The quality metric will be RPS (Request per second). The higher – the correspondingly better.

import time

import requests

from tqdm import tqdm

URL = 'https://catfact.ninja/'

class Api:

def __init__(self, url: str):

self.url = url

def http_get(self, path: str, times: int):

content = []

for _ in tqdm(range(times), desc="Fetching data...", colour="GREEN"):

response = requests.get(self.url + path)

content.append(response.json())

return content

if __name__ == '__main__':

N = 10

api = Api(URL)

start_timestamp = time.time()

print(api.http_get(path="fact/", times=N))

task_time = round(time.time() - start_timestamp, 2)

rps = round(N / task_time, 1)

print(

f"| Requests: {N}; Total time: {task_time} s; RPS: {rps}. |\n"

)We get the following output in the terminal:

Fetching data...: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 10/10 [00:06<00:00, 1.52it/s]

[{'fact': 'Despite imagery of cats happily drinking milk from saucers, studies indicate that cats are actually lactose intolerant and should avoid it entirely.', 'length': 148}, {'fact': 'The smallest pedigreed cat is a Singapura, which can weigh just 4 lbs (1.8 kg), or about five large cans of cat food. The largest pedigreed cats are Maine Coon cats, which can weigh 25 lbs (11.3 kg), or nearly twice as much as an average cat weighs.', 'length': 249}, {'fact': 'A cat has 230 bones in its body. A human has 206. A cat has no collarbone, so it can fit through any opening the size of its head.', 'length': 130}, {'fact': "Cats' hearing is much more sensitive than humans and dogs.", 'length': 58}, {'fact': 'The first formal cat show was held in England in 1871; in America, in 1895.', 'length': 75}, {'fact': 'In contrast to dogs, cats have not undergone major changes during their domestication process.', 'length': 94}, {'fact': 'Ginger tabby cats can have freckles around their mouths and on their eyelids!', 'length': 77}, {'fact': 'Cats bury their feces to cover their trails from predators.', 'length': 59}, {'fact': 'While it is commonly thought that the ancient Egyptians were the first to domesticate cats, the oldest known pet cat was recently found in a 9,500-year-old grave on the Mediterranean island of Cyprus. This grave predates early Egyptian art depicting cats by 4,000 years or more.', 'length': 278}, {'fact': 'Relative to its body size, the clouded leopard has the biggest canines of all animals’ canines. Its dagger-like teeth can be as long as 1.8 inches (4.5 cm).', 'length': 156}]

| Requests: 10; Total time: 6.61 s; RPS: 1.5. |

RPS – 1.5. Very sad. I also don’t have the fastest internet at home right now. Well, there is nothing to add here.

What can be optimized now? Answer: use requests.Session

If multiple requests are made to the same host, the underlying TCP connection will be reused, resulting in a significant performance increase. (quote from documentation requests)

Using the session

def http_get_with_session(self, path: str, times: int):

content = []

with requests.session() as session:

for _ in tqdm(range(times), desc="Fetching data...", colour="GREEN"):

response = session.get(self.url + path)

content.append(response.json())

return contentHaving slightly changed the method, and calling it, we see the following:

Fetching data...: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 10/10 [00:02<00:00, 3.88it/s]

[{'fact': 'Cats purr at the same frequency as an idling diesel engine, about 26 cycles per second.', 'length': 87}, {'fact': 'Cats eat grass to aid their digestion and to help them get rid of any fur in their stomachs.', 'length': 92}, {'fact': 'A cat’s heart beats nearly twice as fast as a human heart, at 110 to 140 beats a minute.', 'length': 88}, {'fact': 'The world’s rarest coffee, Kopi Luwak, comes from Indonesia where a wildcat known as the luwak lives. The cat eats coffee berries and the coffee beans inside pass through the stomach. The beans are harvested from the cat’s dung heaps and then cleaned and roasted. Kopi Luwak sells for about $500 for a 450 g (1 lb) bag.', 'length': 319}, {'fact': 'There are more than 500 million domestic cats in the world, with approximately 40 recognized breeds.', 'length': 100}, {'fact': 'Cats sleep 16 to 18 hours per day. When cats are asleep, they are still alert to incoming stimuli. If you poke the tail of a sleeping cat, it will respond accordingly.', 'length': 167}, {'fact': 'Since cats are so good at hiding illness, even a single instance of a symptom should be taken very seriously.', 'length': 109}, {'fact': 'At 4 weeks, it is important to play with kittens so that they do not develope a fear of people.', 'length': 95}, {'fact': 'The technical term for a cat’s hairball is a “bezoar.”', 'length': 54}, {'fact': 'Baking chocolate is the most dangerous chocolate to your cat.', 'length': 61}]

| Requests: 10; Total time: 2.58 s; RPS: 3.9. |

Almost 4 RPS, compared to 1.5 is already a breakthrough.

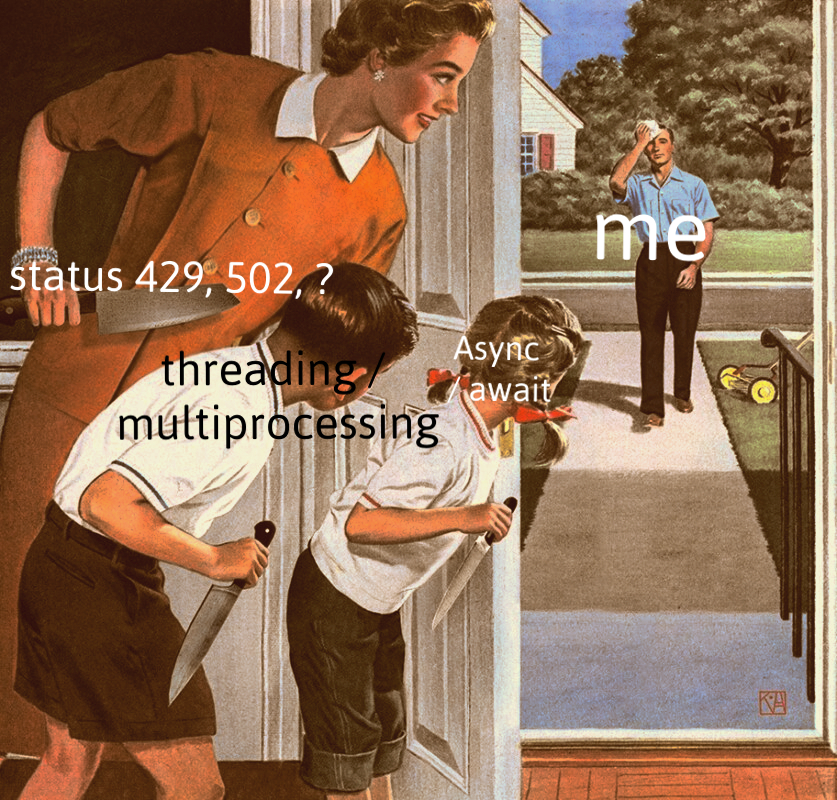

But it’s no secret that to reduce i / o time there is a practice of using asynchronous / multi-threaded programs. This is just such a case, because while waiting for a response from the server, our program does nothing, although it could send another request, and then another, and so on. Let’s try to implement an asynchronous approach to solving a case.

async / await

For the convenience of calls, we will make a wrapper function:

def run_case(func, path, times):

start_timestamp = time.time()

asyncio.run(func(path, times))

task_time = round(time.time() - start_timestamp, 2)

rps = round(times / task_time, 1)

print(

f"| Requests: {times}; Total time: {task_time} s; RPS: {rps}. |\n"

)And the actual implementation of the method itself (do not forget to put aio http, normal requests do not work in asynchronous paradigm):

async def async_http_get(self, path: str, times: int):

async with aiohttp.ClientSession() as session:

content = []

for _ in tqdm(range(times), desc="Async fetching data...", colour="GREEN"):

response = await session.get(url=self.url + path)

content.append(await response.text(encoding='UTF-8'))

return contentif __name__ == '__main__':

N = 50

api = Api(URL)

run_case(api.async_http_get, path="fact/", times=N)We see:

Async fetching data...: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:12<00:00, 3.99it/s]

| Requests: 50; Total time: 12.55 s; RPS: 4.0. |

Surprisingly, there is not much difference from the previous case. I think the gain in i / o time is offset by the costs of transferring flow control to functions to each other (I heard that these moments are very scolded in python). I really don’t know how.

In words, this approach can be explained as follows.

– In the loop, a coroutine is created that sends a request.

– Without waiting for a response, flow control is again given to the event loop, which creates the next coroutine in the “for loop”, which also sends a request.

– But now, before giving control to the event loop, the response status of the first coroutine is checked. If it can continue (received a response to the request), then flow control is returned to it, if not, then see point 2.

– And so on

As a result, the code is basically asynchronous, but requests are not sent “at once”. A fundamentally different approach to this situation will help asyncio.gather.

Using asyncio.gather

Gather – no matter how trite to collect from English. The gather method collects a collection of coroutines and runs them all at once (also conditionally, of course). That is, unlike the previous case, we create coroutines in a loop, and then run them.

It was:

[cоздали корутину] -> [запустили корутину] -> [cоздали корутину] -> [запустили корутину] ->

[cоздали корутину] -> [запустили корутину] ->[cоздали корутину] -> [запустили корутину]

And it became:

[cоздали корутину] -> [создали корутину] ->[cоздали корутину] -> [создали корутину] ->

[запустили корутину] -> [запустили корутину] -> [запустили корутину] -> [запустили корутину]

async def async_gather_http_get(self, path: str, times: int):

async with aiohttp.ClientSession() as session:

tasks = []

for _ in tqdm(range(times), desc="Async gather fetching data...", colour="GREEN"):

tasks.append(asyncio.create_task(session.get(self.url + path)))

responses = await asyncio.gather(*tasks)

return [await r.json() for r in responses]if __name__ == '__main__':

N = 50

api = Api(URL)

run_case(api.async_gather_http_get, path="fact/", times=N)And we get… we get… we get nothing. The cursor continues to blink meaningfully in the terminal window. Not working – thought Stirlitz.

Through painful debugging and trying to figure out why my code wasn’t working, I figured out it was my VPN. Its nodes are located somewhere in Cloudflare’s jurisdiction. And they do not encourage such behavior, believing that I am a bot. A normal person will not make so many requests per second, so my requests … are lost somewhere in the depths of the Internet. They will not be answered. Never. Coroutines just never end.

Okay, having understood where the legs grow from, we run the code:

Async gather fetching data...: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [00:00<00:00, 106834.03it/s]

| Requests: 50; Total time: 2.38 s; RPS: 21.0. |The numbers have grown, cool, 21 is not 1.5 – that’s for sure.

However, there is some limit, let’s increase N (number of requests) to 200.

| Requests: 200; Total time: 2.97 s; RPS: 67.3. |This is what happens, is it possible? Not really. If we carefully consider what the server answers us, we will notice that most of the answers are {'message': 'Rate Limit Exceeded', 'code': 429}

The specific request limit that I set during the experiment with this service is about 60 responses at a time, the rest it does not digest. So if you go to services that you don’t want to overload or put down at all, then approach this issue thoughtfully, do not exceed certain limits.

What’s up with threading?

There is not much point here – a practical experiment showed that threading shows the same results (plus or minus) as the asynchronous code from point 3.

multiprocessing

I won’t lie, I just watched how some Indian from YouTube did something similar. The results are much worse than the previous methods. Yes, and writing such code is violence against your psyche. And I save my psyche.

Let me summarize:

Cloudlfare fell the other day – sorry, it’s because of me, I won’t do it again.

If you want to be a query champion, use asyncio.gather, but with carefully chosen limits. If you do not go to one host, but to different sources, then do not hesitate at all.