ETL process for migrating procedures from mssql to postgreSQL. Part 1. Introduction

I would like to share the experience of transforming one code representation into another using the ETL process and a graph database using an example that is relevant today.

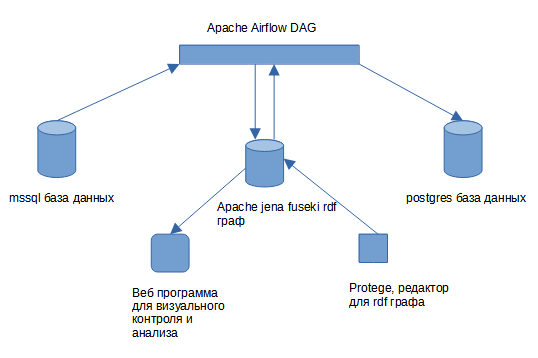

Briefly there is a basis on mssql the server is stored procedures. There is a base on postgres. There is an ETL process on Apache Air Flow. We start the process, at the end, procedures and data appear in the postgres database.

I will say right away this approach is not a complete machine that will transfer any mssql database to postgres. This is an attempt to systematize such a transition, break it down into manageable small parts that are typed and over which transformations are performed with the ability to control the result. The result is procedures or functions on postgres.

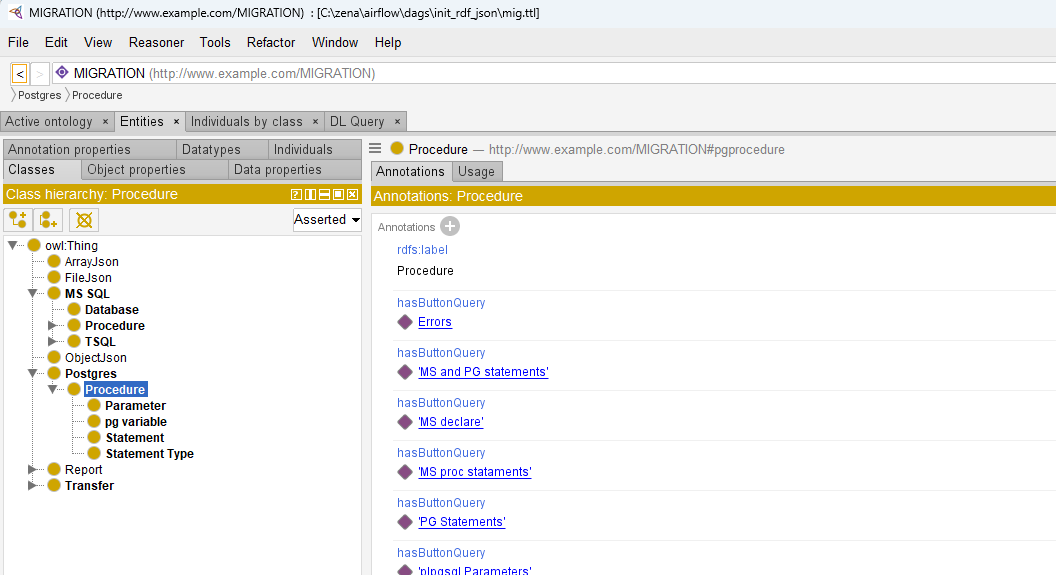

As part of the ETL process for the migration of procedures, an RDF graph based on Apache Jena Fuseki is used. The general approach – all information about the procedures is placed in a graph, classified, links are added between the objects of interest to us. Then graph growth begins by executing python modules, with the ultimate goal of building “create procedure” command that is sent to postgres for execution at the last stage of the ETL process.

For analysis and visual control, a web program is used, where you can quickly add reports and link them to the classification tree of the graph using buttons. Without this program, it is extremely difficult to navigate the information in the graph.

The reports themselves areselect” queries executed in the context of the parent object displayed as a button. To edit the classification tree and add reports, a standard tool for working with RDF graphs Protege is used.

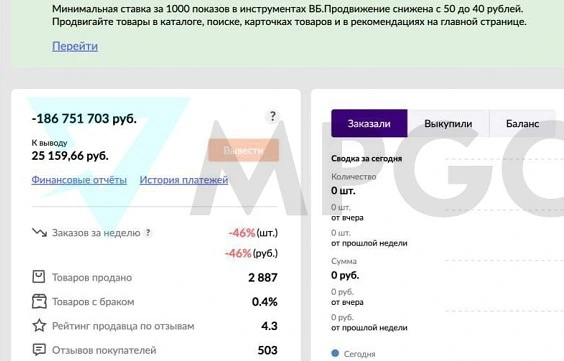

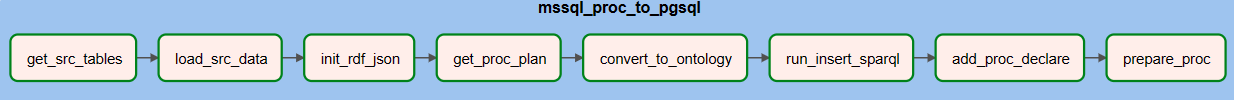

The first two steps of the ETL process (“get_src_tables”, “load_src_data”) transfer data is a standard pandas python module, transfer is not perfect but it creates a test dataset. The rest of the steps are related to procedure migration.

The basis of the transfer is the data from the “information_schema” mssql server and the plans for the execution of stored procedures (“execution plan”). The “get_proc_plan” step, using the procedure signature, calls them for execution and saves the xml file with the plan. Then all this is exported to a graph for analysis and migration.

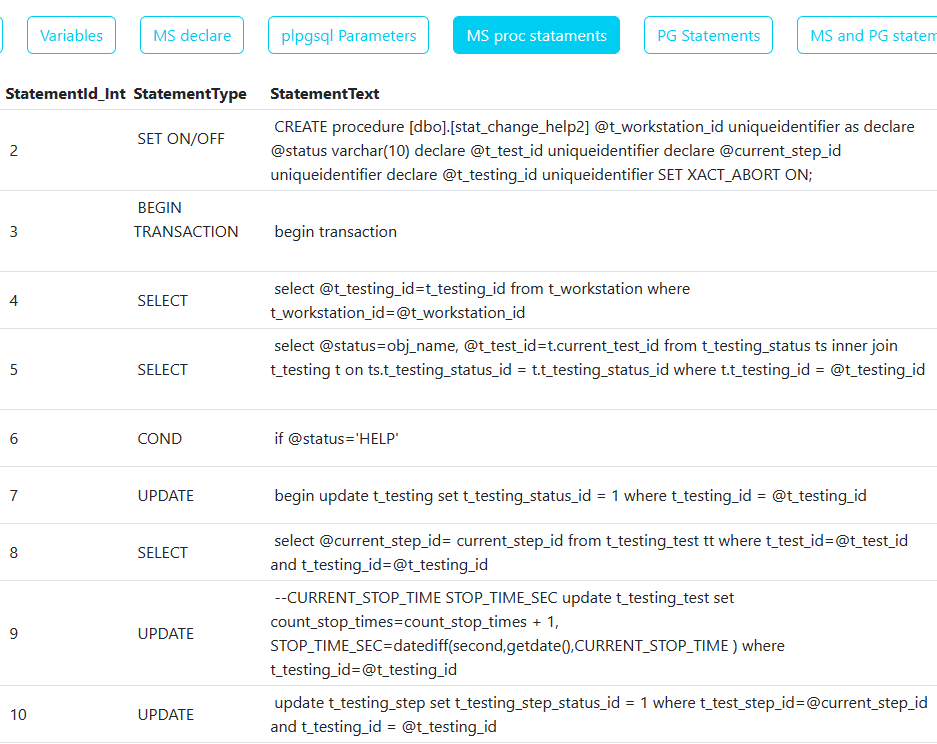

It is the breakdown of the procedure into parts used in the execution plan that is the basis of migration. The parts of the plan are typified, for example in the image above there is “Select”, “COND” or “Update”. Those. now we have not one large text of the procedure, but small pieces, which we will analyze and transform.

At the database level, you can make an inventory by execution plan types.

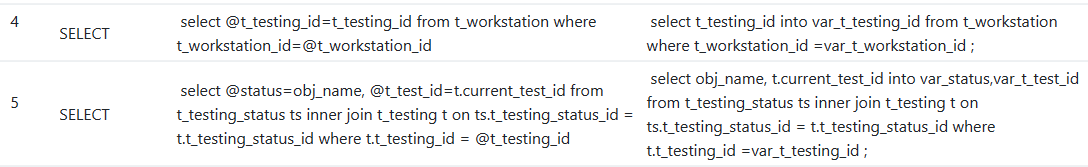

To create postgres procedures, the same approach is taken, each part of the mssql execution plan will correspond to a postgres part.

The principle of migration is as follows: A specific type is taken, for example “Select”, all use cases are analyzed and typical cases are highlighted. For example, in the database used for the example, I found for 4 types of conversion for the “select” type.

For each type of conversion, a python handler is written using the sqlparse module, which, inside each procedure for parts of the “Select” type, will try to perform the corresponding conversion if this case is found.

As mentioned at the beginning, there is control over the execution of each launch of the ETL process with error recording in the same graph, where you can generally track which procedures were not created and what errors were at the same time.

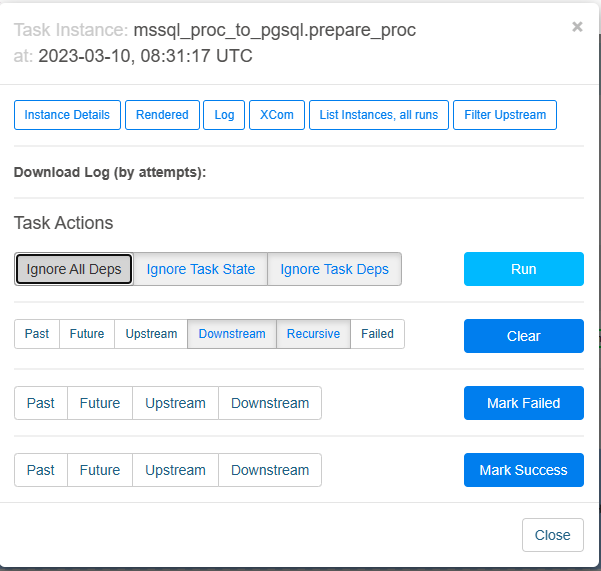

Usually during development, I change the python module related to the conversion of a particular type of execution plan and run the last step of the ETL process called “prepare_proc” and after it is completed, I check the error reports, compare with previous runs.

In this publication, I described the framework process for migrating procedures from mssql to postgres. In general, it can be taken as the basis for your transfer and the main thing to do is to analyze your procedures using a web program, identify typical conversion cases and then write parsers in python sqlparse.

Video on youtube (put HD quality if the picture is not clear)

Code in git.

dag

Web program (Angular)

In further parts I plan to describe how to install it all, add reports to the web program and how to write your own parsers.

Thank you for attention.