Development of the first project on the platform of Microsoft Dynamics 365 For Finance and Operations

I will talk about the approaches that we used, about the mistakes that were made, I will share my knowledge and gained experience. This article may be of interest to those who begin to develop a project in D365 or just think about it.

This is a free transcript the report from the mitc Mycrosoft Dynamics 365 & Power Platform Meetup.

Project Goal and Technical Basics

Our German subsidiary purchases goods and sells them to a Russian legal entity. Earlier, we used the Tsenit system, which allowed us to keep records only at the level of financial data, but could not cope with the goods and logistic tasks. To solve these problems, we had additional tools. Data was stored in several databases at once. All this negatively affected the speed and reliability of the entire system.

We wanted the accounting system to help the German branch submit reports, pay taxes and pass audits. The past ERP hardly solved these problems, so we decided to develop and launch our own accounting system. Our ERP was supposed to combine finance, accounting and branch logistics in a single circuit. As the main software, we chose Microsoft Dynamics 365 – the former Dynamics AX, also known as Axapta.

The business component is described in the article “Technology, Outsourcing and Mentality”. Here we will talk about technical implementation. So, we needed to automate several business processes:

- Purchase of goods from suppliers;

- Sale to a Russian legal entity;

- Integration between D365 and Ax2012, the accounting system of a Russian legal entity;

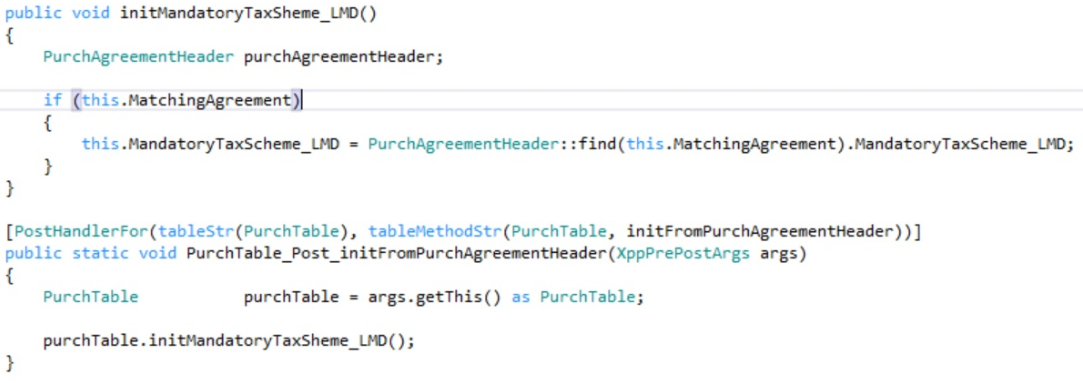

- Automation of the selection of tax schemes;

- Reporting in accordance with German law.

In the project, we decided to introduce the Microsoft Dynamics 365 cloud solution, since the German office did not have the IT infrastructure to deploy the application, nor the people who would be responsible for it. For small remote branches, the SaaS scheme is optimal, because it allows you to get all the necessary software and development environments to start implementation, immediately after signing the contract with the provider.

We had a tight schedule: it was necessary to complete the entire development in 3 months. Since commodity accounting was conducted in spreadsheets in the old system, transferring the entire set of historical data would be an impossible task in the middle of a fiscal year. But at the beginning of the reporting period, it is enough to transfer only balances. Thus, it was necessary to launch either January 1, 2019, or postpone it for another year.

Our team did not have development experience at D365. Despite all the circumstances, we planned to start this project as quickly as possible. Next, I will separately describe all the stages of development. I will dwell on each iteration in detail: what experience we got and what mistakes we made.

First iteration, modifications on application version 7.3

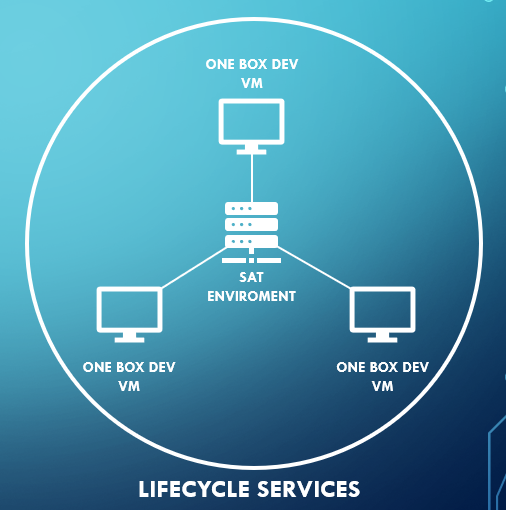

In order to get down to business quickly, we first developed a simple application architecture. It consisted of development environments – DevBox 1-tier environments. All components were installed on the same server / virtual machine: Application Object Server (AOS), database, Dynamics 365 Retail and Management Reporter.

In order to get down to business quickly, we first developed a simple application architecture. It consisted of development environments – DevBox 1-tier environments. All components were installed on the same server / virtual machine: Application Object Server (AOS), database, Dynamics 365 Retail and Management Reporter.

For testing, we decided to use the SAT environment – Standard Acceptance Test 2-tier environment.

2-tier environment is a Multi-box environment, the components of which are installed in several cloud services and usually include more than one Application Object Server (AOS). In fact, it is as close as possible to a productive environment, so we decided to test on it.

We deployed the first development environments on the existing on-premise infrastructure, but its capacity was not enough for the further development of the project. Therefore, when two more developers joined the project, we quickly and elegantly deployed DevBox for them in the cloud.

Our cloud environments were managed through the Lifecycle services portal.

Having finished with the environments, the team began to develop. We set up the development environment on Visual Studio and connected them to the version control of Azure DevOps, having previously created a branch for downloading the code. Next, we developed an approach to the development and transfer of changes to the SAT environment.

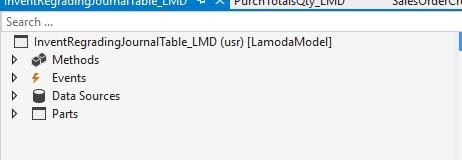

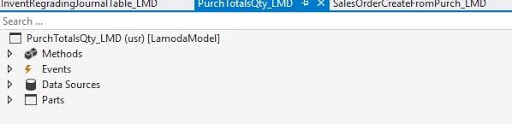

There are no layers in the D365 architecture; all the standard code has been laid out in the model. Modifications were transferred to the SAT environment through the LCS portal with a package containing a compiled model.

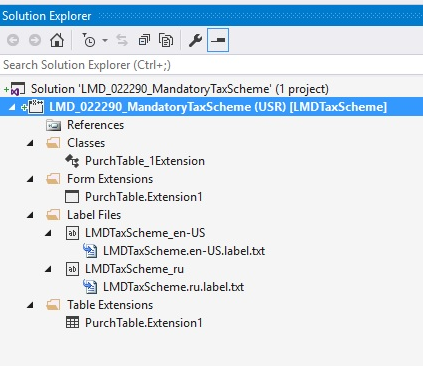

A model is the smallest unit of transferring changes to products, so we decided to make one model – one modification, to independently transfer our modifications for testing and further to the products.

To begin with, we implemented the simplest and most common modification – adding a new field to the standard table, initializing it when creating the record, and outputting to the standard form.

Even in such a simple project, there are new types of objects. We made an extension to add new fields to the standard table. To bring the field to the standard form, we made a new type of object – an extension for the form. And to initialize the field, we created a class that extends table methods. He allowed to initialize the field when creating the record.

On such a simple modification, we saw one of the basic principles of D365 – not a change, but an extension of standard objects.

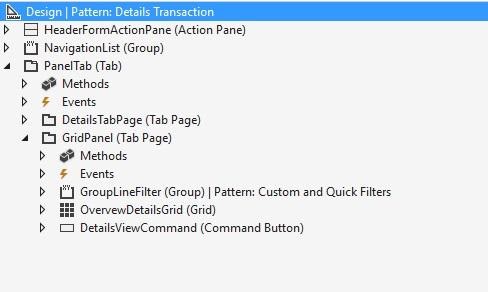

The next modification was the creation of a new form. Now, when creating a form, it was necessary to specify its pattern. A pattern is a pattern that fully defines the design structure of a form. Until we completely reproduce the structure laid down in the template, our form will not be compiled. It is impossible to change the template of the finished form. Therefore, before starting development, we thought in advance how our form would look.

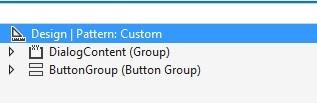

We also retained the ability to manage the design of the form ourselves. If we indicated pattern – Custom, then we were fully responsible for the design of the form: what objects were on it and with what nesting.

Conclusions after the first iteration

1. Do not change the standard, but only expand it.

2. Refer to the model if we use its objects in another model. This is one of the differences between D365 models from previous versions: an object exists in only one model.

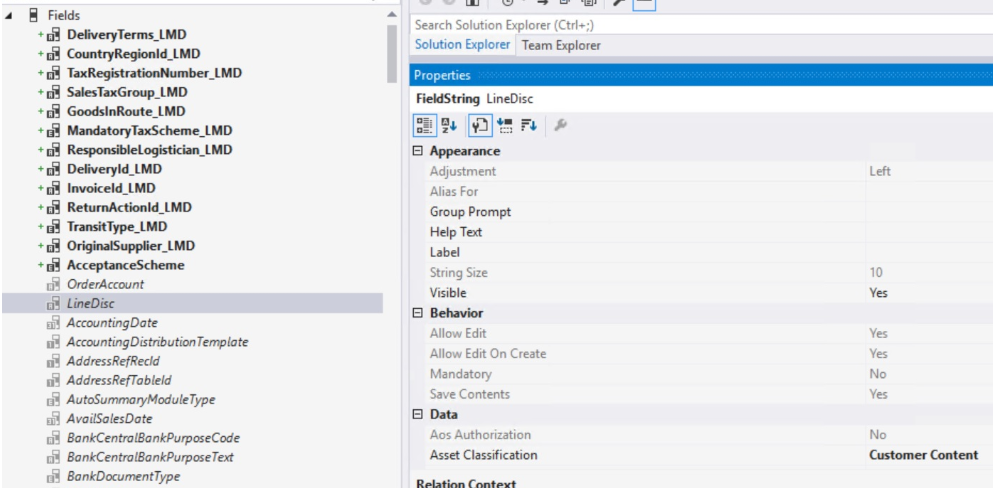

3. There are limitations in changing the properties of standard objects. Not all properties of standard fields can be changed in their extensions of standard objects. For example, the extension of the PurchTable table is the LineDisc field. We can control the visibility of the field and the label, and properties such as mandatory and editable cannot be changed.

4. There are no jobs in D365, everything is done through classes.

5. We beat the models too finely, and it turned out that our principle of “one modification = one model” does not work.

Second iteration and transition to one model

At the beginning of the second iteration, we had two models that referred to each other. Because of this, we could no longer transfer these modifications independently. Therefore, we decided to work in one new model, in which it was necessary to transfer all existing modifications.

A model in D365 is a collection of source files located in a separate directory. When compiling, they are collected in a separate library that has a connection with other libraries.

Therefore, merging into one model on DevBox came down to transferring files from one directory to another and deleting old directories.

So, we built a new model, got its latest version on each DevBox, after which we continued to work within the framework of one model on development environments.

Naturally, we have already transferred a couple of models for testing on the SAT environment. Therefore, it was necessary to remove them, and release one new one.

All updates to the SAT environment were made using packages, including the removal of models. We created a package with empty models that need to be removed, and added a script with the names of these models to it. Then we collected a package with a new model and rolled it onto the SAT environment. Thus, SAT got a new model.

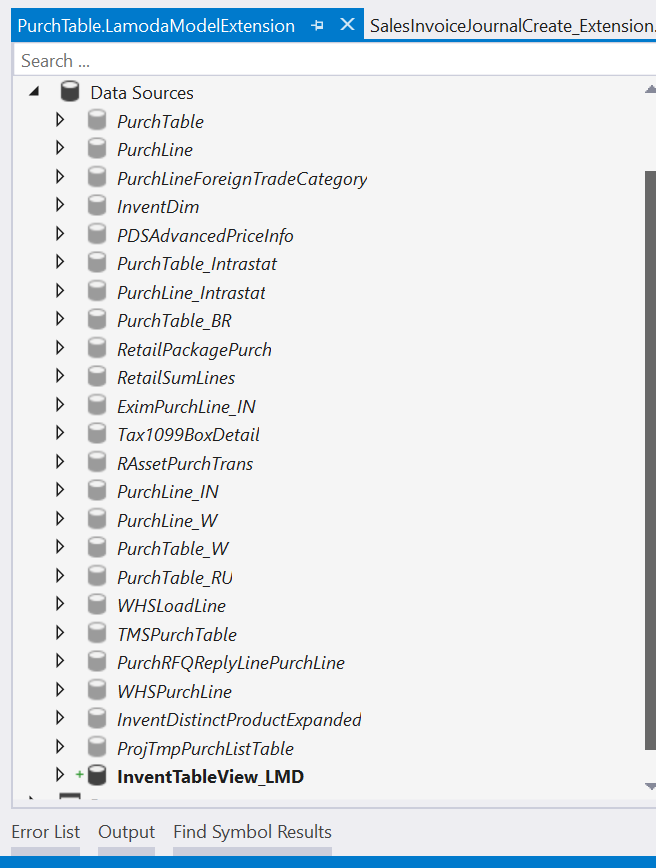

When the models were combined, we noticed that each developer names the extensions of objects in their own way. We agreed on the rules for naming objects according to the template: PurchTable.LamodaModelFormExtension, PurchTableTypeLamodaModelClass_Extension.

We also agreed in the team that for one standard object we create only one extension and make changes to it all.

I selected some interesting modifications that we made at this stage. They show possible development approaches in D365.

Task 1

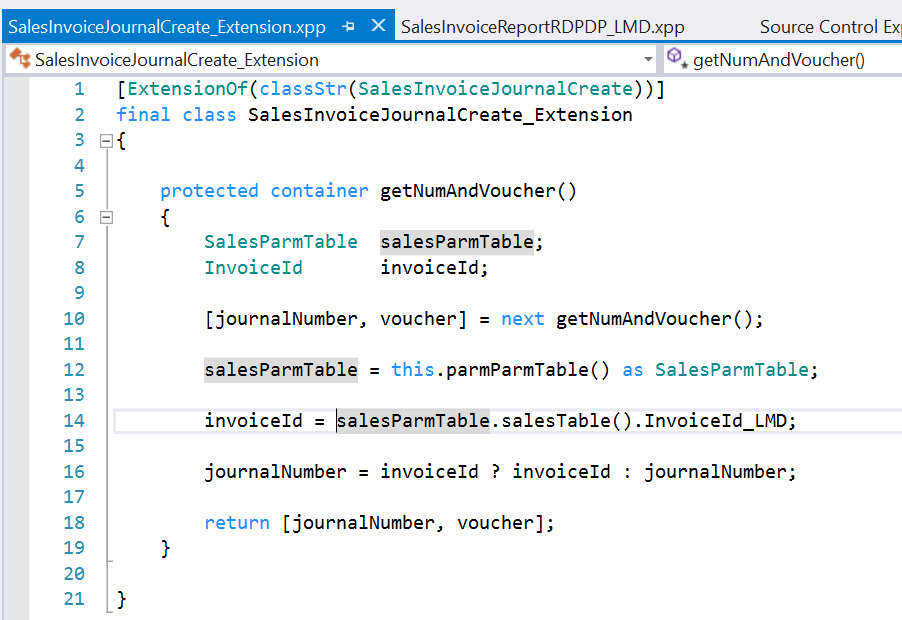

In the posting of the invoice for the sales order, it was necessary to replace the invoice number with the number from the order. To do this, we defined a standard class with the possibility of extension, which allowed us to perform this modification.

We made an extension to the standard SalesInvoiceJourCreate class. There is Next in his getNumAndVoucher () method – this is our new super, he talks about calling the standard method code. After calling the standard code, we replaced the invoice number with the desired value.

This is one of our development approaches: using extensions and adding our own code before or after (as an option – both before and after) the execution of standard code.

Task 2

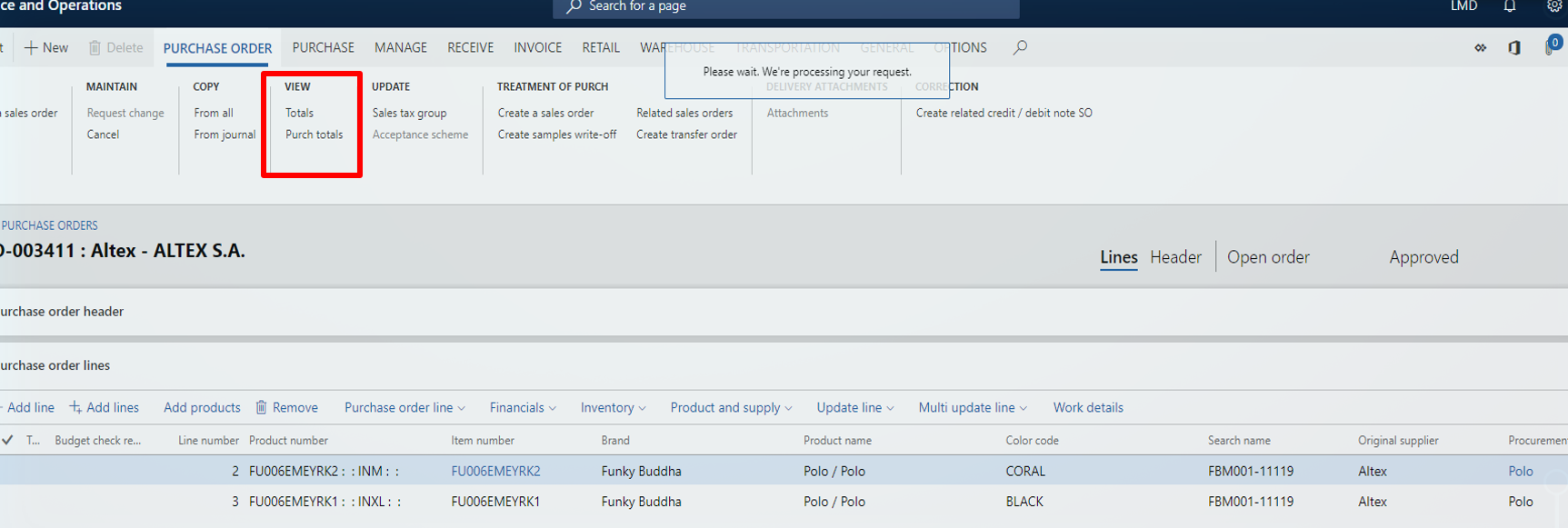

It was necessary to change the display of the totals of the purchase order: group the totals by the supplier’s invoice number from the purchase order lines. In this case, we did not find a place for expansion without halving the performance, therefore, we made our own version of the results without touching the standard ones.

Task 3

Another interesting modification: in the lines of the purchase order form, it was necessary to add fields from the nomenclature directory with the ability to filter. In previous versions, the modification was completely uninteresting and was solved by simply adding a table as a datasource to the form and overlapping the two methods.

In version 7.3, we could not extend the methods to a standard form datasource. To do filtering and not create a new form for this, we added view as a datasource to the form.

The ability to extend methods to datasource appeared in version 8.1 of D365.

Conclusions after the second iteration

At this stage, we have developed the basic modifications necessary to launch the project.

- We introduced the rules for naming extensions. They not only helped to make the names consistent and understandable, but further simplified the update, since our names did not coincide with the names of standard objects from the service pack.

- I was pleased how quickly cross-referencing occurs when building a project or model – it’s very conveniently organized in this version.

- Updating models in different types of environments occurs in different ways. We were convinced of it on an example of merging of models.

- Before starting the development of a new modification, you need to get the latest version of the model, since development is carried out within the framework of one model.

- The mechanism of the data entity for loading and unloading data in Excel when updating data on the prod turned out to be very convenient. Our Data & Analytics department is now using it to retrieve data from our cloud-based D365.

We did the main development on time. Go Live came out, the model is in prod. And we faced the problem of releases of only tested modifications within the model. We also wanted to facilitate the debugging process during testing of modifications and speed up the update of the test environment.

How it works now

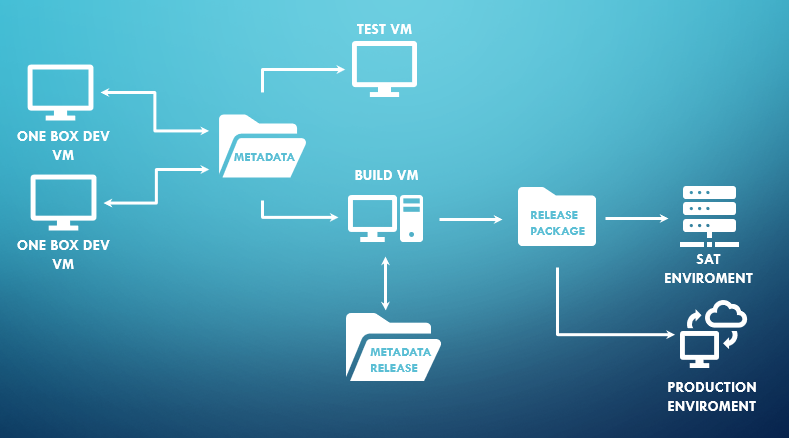

In the last iteration, we added two environments: build and test. After all environments were configured and verified, we simplified testing and learned to release only tested modifications within the model.

For testing, we deployed a 1-tier environment and connected it to the development branch in the version control system. The update now consisted of obtaining the latest version of the model itself and its assembly. In this environment, we debuted, as in the usual DevBox.

Build packages for release now carried out on a new build environment. The tested modifications were transferred to a new branch in the change control’s version control system (change packages uploaded to the version control system), from the earliest to the latest.

Then we deployed the package to the SAT environment where user testing took place, after which we scheduled the package on the LCS portal for release on the prod. So we set up the release process using the build environment.

We also decided to review not the projects, but the changeset’s for modification, uploaded to version control.

The first cloud version update

We worked on the cloud version, so we needed to be updated regularly. The first update was a transition from version 7.3 to version 8.0. It took about two weeks.

Of course, we created the main problems for ourselves, but we also won:

- We did not immediately agree on the rules for naming standard objects. In the first update, our object names coincided with the names of objects in the service pack.

- When updating cloud environments, we always logged out from AOS-machines, otherwise the update process could not be completed with a logged in user.

- The update package for prod and SAT environments needed to be combined with the model package.

Today, updating all our environments takes about 3-4 days and takes place without the involvement of developers. We can even release a release at the same time as the update, the main thing is that the build, SAT and prod have the same version.

The update process consists of downloading the update package on the lcs portal. The DevBox and the test are updated first, then the build is updated, the last are SAT and prod.

Results of the entire first project

- We have gained experience in building the D365 application architecture.

- Developed a new approach to code review.

- We made the rules for transferring databases to DevBox (in D365 it is important to conduct initial testing on DevBox, and now we are even testing developers on DevBox).

- Wrote development guidelines at D365.

- Learned to develop in the cloud.

All this experience helped us develop the project more thoughtfully. Now we know the capabilities of the system, we can more correctly build the architecture, or rather set tasks. The built-up processes around the project make it easy enough to connect developers who are writing for the first time under D365.