Creation of aggregators of scientific articles

Hi all! In this post I want to talk about some possibilities for automating tasks that a research programmer faces.

In my work, there is often a need to study scientific articles. This helps you stay up to date as well as explore new techniques and areas.

It can be very useful to record the information you read so that you don’t have to constantly return to the same material to find something. I usually take notes, but transferring links to articles there, downloading them, and creating tables can be tedious. The creators of GitHub repositories-aggregators of articles on certain topics have done a great job. For example, articles about code clones or articles about fuzzing. These repositories got me thinking about automating the creation of such lists of articles. As a result, I have determined the sequence of actions that I perform when researching a new topic:

Step 1. Search for articles in well-known sources (for example, Semantic Scholar or arxiv)

Step 2. Reading abstracts of articles, which can often be used to determine whether they are relevant to the topic.

Step 3. Isolating important objects from articles: these can include links to GitHub, graphs, pictures (so that you can, for example, refer to the results of the article in your presentation without spoiling the quality of the object when inserting), as well as the year of writing and the journal for understanding the level of the article and relevance.

Step 4. Detailed study of selected articles.

The collection of information for the first three steps can be automated. Let's take a closer look at them.

Search articles

Semantic Scholar And arxiv have a special API for searching articles on request. Using it, you can find out basic information about articles and download them if they are open access.

For example, based on the documentation for arxiv, to search for articles by query, you will need to write code like this:

import arxiv

# Construct the default API client.

client = arxiv.Client()

# Search for the 10 most recent articles matching the keyword "quantum."

search = arxiv.Search(

query = "quantum",

max_results = 10,

sort_by = arxiv.SortCriterion.SubmittedDate

)

results = client.results(search)For found articles, you can get an abstract, authors, year of publication, download link in case of open access, etc.:

papers = [

{

"title": result.title,

"authors": ", ".join([author.name for author in result.authors]),

"journal": result.journal_ref,

"year": result.published.year,

"abstract": result.summary,

"openAccessPdf": {"url": result.pdf_url},

"paperId": result.entry_id.split("/")[-1], # last part of url after slash

} for result in results

]Articles can be downloaded using the following code:

results = client.results(search)

paper_to_download = next(results)

paper_to_download.download_pdf(dirpath=".", filename="some-article.pdf")For Semantic Scholar, there are examples of using the API in repositories.

Code similar in content to the Semantic Scholar API might look like this:

import requests

import json

from datetime import datetime

x_api_key = "your-api-key" # you can get it from official site

class SemanticScholarClient:

def __init__(self):

self.base_url = "https://api.semanticscholar.org/graph/v1"

def search(self, query, max_results=10, sort_by="relevance"):

endpoint = f"{self.base_url}/paper/search"

params = {

"query": query,

"limit": max_results,

"fields": "paperId,title,isOpenAccess,openAccessPdf,abstract,year,authors,citationCount,url,venue",

"sort": sort_by

}

headers = {

"X-API-KEY": x_api_key

}

response = requests.get(endpoint, params=params, headers=headers)

response.raise_for_status()

return response.json()['data']

class SemanticScholarSearch:

def __init__(self, query, max_results=10, sort_by="relevance"):

self.query = query

self.max_results = max_results

self.sort_by = sort_by

# Usage

client = SemanticScholarClient()

# Search for the 10 most recent articles matching the keyword "quantum."

search = SemanticScholarSearch(

query="quantum",

max_results=10,

sort_by="publicationDate:desc" # Sort by publication date, descending

)

results = client.search(search.query, search.max_results, search.sort_by)

papers = [

{

"title": result['title'],

"authors": ", ".join([author['name'] for author in result['authors']]),

"journal": result.get('venue', ''),

"year": result['year'],

"abstract": result.get('abstract', ''),

"openAccessPdf": {"url": result.get('openAccessPdf', {}).get('url', '')} if result.get('openAccessPdf', {}) else None,

"paperId": result['paperId'],

} for result in results if result

]

# Download papers

for paper in papers:

if paper['openAccessPdf']:

print(f"Title: {paper['title']}")

response = requests.get(paper['openAccessPdf']['url'])

response.raise_for_status()

filepath = f"{paper['title']}.pdf"

with open(filepath, 'wb') as f:

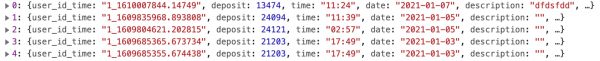

f.write(response.content)Selecting objects

To automate the selection of objects from an article, you can use the library pdfplumber. This article was very helpful in writing code using this library. Using parsing elements in PDF and regular expressions, you can extract links to Github existing in the article:

import pdfplumber

import re

def extract_text_from_pdf(pdf):

text = ""

for page in pdf.pages:

text += page.extract_text() + "\n"

return text.strip()

pdf = pdfplumber.open("some-article.pdf")

text_from_pdf = extract_text_from_pdf(pdf)

github_regex = r"https?://(?:www\.)?github\.com/[\w-]+/[\w.-]+[\w-]"

github_links = re.findall(github_regex, text_from_pdf)A library is suitable for extracting images PyMuPDF. Here's the detailed one guide about using the library. To save pictures from an article, you can write the following code:

import fitz

doc = fitz.Document("some-article.pdf")

for i in tqdm(range(len(doc)), desc="pages"):

cnt = 0 # counter to divide images on one page

for img in tqdm(doc.get_page_images(i), desc="page_images"):

xref = img[0]

image = doc.extract_image(xref)

pix = fitz.Pixmap(doc, xref)

pix.save("img_%s_%s.png" % (i, cnt))

cnt += 1Not in all cases, the specified method is able to extract all images from articles, so in this task there is a huge space for thought and ideas for improving the method.

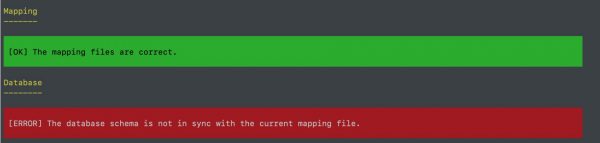

The listed stages are implemented in the repository Researcher-Helper. It currently supports searches in Semantic Scholar and arxiv. As a result, it allows you to generate a table with articles in html format upon request. I will be glad to receive feedback, ideas for improvement and advice in implementing the described stages!