Building Kubernetes clusters using Kubernetes itself

Do you think I’m out of my mind? I have already experienced this reaction when I first suggested deploying Kubernetes clusters using Kubernetes.

But I am convinced that there is no more effective tool for automating cloud infrastructure than Kubernetes itself. With one central K8s cluster, we can create hundreds of other controlled K8s clusters. In this article, I will show you how to do this.

Note. SAP Concur uses AWS EKS, but the concepts discussed here also apply to Google GKE, Azure AKS, and any other cloud provider Kubernetes implementations.

Ready for production in a production environment

Creating a Kubernetes cluster with any of the common cloud providers has never been easier. For example, in AWS EKS, a cluster is raised with one command:

$ eksctl create cluster

It is quite a different matter if you need to get a Kubernetes cluster ready for production in a production environment – “production-ready” The concept of “production-ready” can be interpreted in different ways, but SAP Concur uses the following four steps to create and provide Kubernetes clusters ready for operation in a working environment.

Four stages of assembly

Preliminary testing. A checklist of simple tests on the target AWS environment that validate all prerequisites before starting to build a cluster. For example, it checks available IP addresses on subnets, exported parameters for AWS, SSM parameters, or other variables.

EKS control plane and node group. Directly assembling an AWS EKS cluster with connecting worker nodes.

Installing add-ons. Let’s add our favorite seasoning to the cluster. 🙂 Optionally, you can install add-ons such as Istio, Logging Integration, Autoscaler, etc.

Cluster validation. At this stage, we validate the cluster (EKS core components and add-ons) from a functional point of view before putting it into production. The more tests you write, the more soundly you sleep. (Especially if you are on duty in technical support!)

Glue it all together

The four build steps involve different tools and techniques (we’ll come back to them later). We needed a universal tool for all steps that would glue everything together, support sequential and parallel execution, be event-driven, and preferably render the assembly.

As a result, we found a family of solutions Argoin particular tools Argo Events and Argo workflows… They both run on Kubernetes as CRDs and rely on the declarative YAML concept like many other Kubernetes deployments.

We’ve got the perfect combination: imperative orchestration and declarative automation

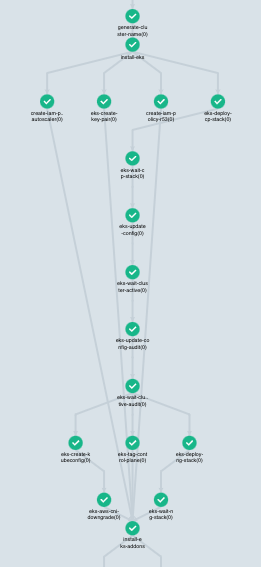

Phased implementation of a process in Argo Workflows

Argo workflows Is an open source, native containerized workflow engine for orchestrating parallel jobs in Kubernetes. Argo Workflows is implemented as Kubernetes CRD.

Note. If you are familiar with K8s YAML, I promise you will figure it out.

Let’s see how all of these four build steps might look like in Argo Workflows.

1. Preliminary testing

We write tests for BATS framework… Writing a preliminary test in BATS is very simple:

#!/usr/bin/env bats

@test “More than 100 available IP addresses in subnet MySubnet” {

AvailableIpAddressCount=$(aws ec2 describe-subnets --subnet-ids MySubnet | jq -r ‘.Subnets[0].AvailableIpAddressCount’)

[ “${AvailableIpAddressCount}” -gt 100 ]

}Running the above BATS test file (avail-ip-addresses.bats) along with three other fictional BATS tests via Argo Workflows looks like this:

— name: preflight-tests

templateRef:

name: argo-templates

template: generic-template

arguments:

parameters:

— name: command

value: “{{item}}”

withItems:

— bats /tests/preflight/accnt-name-export.bats”

— bats /tests/preflight/avail-ip-addresses.bats”

— bats /tests/preflight/dhcp.bats”

— bats /tests/preflight/subnet-export.bats”2. EKS control plane and node group

Any convenient tool can be used to build an EKS cluster. For example, eksctl, CloudFormation or Terraform. Building a basic EKS cluster with dependencies in Argo Workflows in two steps using CloudFormation templates (eks-controlplane.yaml and eks-nodegroup.yaml) is implemented as follows.

— name: eks-controlplane

dependencies: [“preflight-tests”]

templateRef:

name: argo-templates

template: generic-template

arguments:

parameters:

— name: command

value: |

aws cloudformation deploy

--stack-name {{workflow.parameters.CLUSTER_NAME}}

--template-file /eks-core/eks-controlplane.yaml

--capabilities CAPABILITY_IAM

- name: eks-nodegroup

dependencies: [“eks-controlplane”]

templateRef:

name: argo-templates

template: generic-template

arguments:

parameters:

— name: command

value: |

aws cloudformation deploy

--stack-name {{workflow.parameters.CLUSTER_NAME}}-nodegroup

--template-file /eks-core/eks-nodegroup.yaml

--capabilities CAPABILITY_IAM3. Installing add-ons

To install add-ons, you can apply kubectl, helm, kustomize or a combination of both. For example, installing the add-on metrics-server with template helm and kubectlprovided that the installation is requested metrics-servermight look like this in Argo Workflows.

— name: metrics-server

dependencies: [“eks-nodegroup”]

templateRef:

name: argo-templates

template: generic-template

when: “‘{{workflow.parameters.METRICS-SERVER}}’ != none”

arguments:

parameters:

— name: command

value: |

helm template /addons/{{workflow.parameters.METRICS-SERVER}}/

--name “metrics-server”

--namespace “kube-system”

--set global.registry={{workflow.parameters.CONTAINER_HUB}} |

kubectl apply -f -4. Cluster validation

We use the BATS library to validate add-ons DETIKwhich makes writing tests for K8s much easier.

#!/usr/bin/env bats

load “lib/utils”

load “lib/detik”

DETIK_CLIENT_NAME=”kubectl”

DETIK_CLIENT_NAMESPACE="kube-system"

@test “verify the deployment metrics-server” {

run verify “there are 2 pods named ‘metrics-server’”

[ “$status” -eq 0 ]

run verify “there is 1 service named ‘metrics-server’”

[ “$status” -eq 0 ]

run try “at most 5 times every 30s to find 2 pods named ‘metrics-server’ with ‘status’ being ‘running’”

[ “$status” -eq 0 ]

run try “at most 5 times every 30s to get pods named ‘metrics-server’ and verify that ‘status’ is ‘running’”

[ “$status” -eq 0 ]

}Running the above BATS DETIK test file (metrics-server.bats), provided that the add-on is installed metrics-server, can be implemented in Argo Workflows like this:

— name: test-metrics-server

dependencies: [“metrics-server”]

templateRef:

name: worker-containers

template: addons-tests-template

when: “‘{{workflow.parameters.METRICS-SERVER}}’ != none”

arguments:

parameters:

— name: command

value: |

bats /addons/test/metrics-server.batsJust imagine how many more tests you can plug in here. Tests needed Sonobuoy, Popeye or Fairwinds Polaris? Just connect them through Argo Workflows!

At this point, you should have a fully functional, production-ready AWS EKS cluster with the add-on installed. metrics-server… All tests have been passed, the cluster can be put into operation. It is done!

But we are not saying goodbye yet – I left the most interesting thing for last.

Workflow templates

Argo Workflows supports reusable WorkflowTemplates. Each of four stages of assembly is such a pattern. In fact, we have received assembly elements that can be arbitrarily combined with each other. All stages of the build can be performed in order through the main workflow (as in the example above), or they can be run independently of each other. This flexibility is made possible by Argo Events.

Argo Events

Argo Events Is an event-driven framework for Kubernetes that allows you to initiate K8s objects, Argo Workflows, serverless workloads and other operations based on various triggers such as webhooks, S3 events, schedules, message queues, Google Cloud Pub / Sub, SNS, SQS, etc.

The cluster assembly is triggered by an API call (Argo Events) using a payload from JSON. In addition, each of the four build steps (WorkflowTemplates) has its own API endpoint. Kubernetes operators (that is, people) get clear advantages:

Not sure what state your cloud is in? Call the Pre-Test API…

Want to build a bare EKS cluster? Call the eks-core API (control-plane and nodegroup)…

Want to install or reinstall add-ons on your existing EKS cluster? Call the add-ons API…

The cluster has started to freak and you need to quickly test it? Call the testing API…

Argo features

Solutions Argo Events and Argo workflows offer a wide range of functionality right out of the box, without burdening you with unnecessary work.

Here are seven of the most requested features:

Parallelism

Dependencies

Retries (see pre-tests and validation tests highlighted in red in the pictures above: they failed, but Argo retried until they passed)

Conditions

S3 support

Workflow templates

Event sensor parameters

Conclusion

We made friends with many different tools and were able to imperatively set the desired state of the infrastructure through them. We have received a flexible, uncompromising and quick-to-implement solution based on Argo Events and Workflows. The plans are to adapt these tools for other automation tasks. The possibilities are endless.

The translation of the material was prepared as part of the course “Infrastructure platform based on Kubernetes”… We invite everyone to a two-day online intensive “Primitives, controllers and security models k8s”… It will provide an overview and practice on the main primitives and controllers of k8s. Let’s consider how they differ and in what cases they are used. check in here