Almost safe: a word on pseudo-normal floating point numbers

Floating point arithmetic is a popular esoteric topic in computer science. It’s safe to say that every software engineer has at least heard of floating point numbers. Many have even used them at some point. But few would argue that they really understand them well enough, and a much smaller number would say they know all borderline cases. This last category of engineers is probably mythical or, at best, very optimistic. I’ve dealt with floating point issues in the GNU C library in the past, but I wouldn’t call myself an expert on this topic. And I definitely didn’t expect to find out about the new type of numbers that I heard about a couple of months ago.

This article describes new types of floating point numbers that do not correspond to anything in the physical world. The numbers that I call pseudo-normal numbers, can create problems for programmers that are difficult to track down, and even make it onto the infamous list Common Vulnerabilities and Exposures (CVE)…

Brief background: double IEEE-754

Almost every programming language implements 64-bit doubles (double precision floating point numbers) using the format IEEE-754… The format defines a 64-bit storage that has one sign bit, 11 exponent bits and 52 significand bits. Each bit pattern belongs to one and only of these types of floating point numbers:

Normal number: the exponent is set (to one) at least one bit (but not all bits). The mantissa and sign bits can have any value.

Denormal / subnormal (denormal) number: the exponent has all the bits cleared (to zero). The mantissa and sign bits can have any value.

Infinity: The exponent has all its bits set. In the mantissa, all bits are cleared, and the sign bit can be any value.

Uncertainty / Not a Number – (NaN): The exponent has all bits set. The mantissa has at least one bit set, and the sign bit can be any value.

Zero: exponent and mantissa have all bits cleared. The sign bit can be any value, which is where the beloved concept of signed zeros comes from.

The mantissa bits describe only the fractional part; for denormalized numbers and zeros, the first bit of the mantissa (in the original “integer”) is by convention equal to zero, and for all other numbers it is equal to one. Programming languages perfectly map representations to these categories of numbers. There is no double precision floating point number for which, at least, its classification is not defined. Because of its widespread adoption, you can rely heavily on consistent behavior across different hardware platforms and runtimes.

If only we could say the same about an older brother like double: type long double… The IEEE-754 extended precision format exists, but it does not define encodings and is not a standard for all architectures. However, we are not here to lament this state of affairs; we’re exploring a new kind of numbers. We can find them in double extended-precision floating point format from Intel.

Double Extended Precision Floating Point Format from Intel

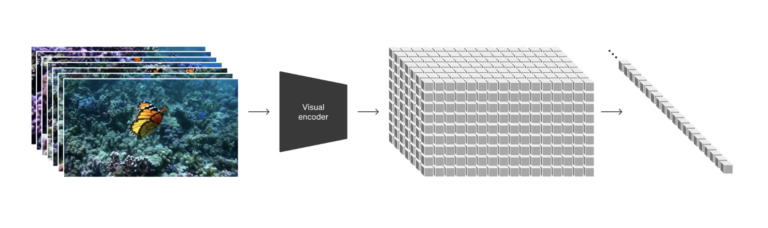

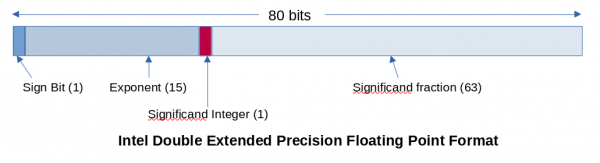

Section 4.2 in Software Developer’s Guide for Intel 64 and IA-32 Architectures defines the format of a double extended precision floating point number as an 80-bit value with the circuit shown in Figure 1.

Given this definition, our robust number classifications that we rely on map to the long-double format as follows:

Normal number: The exponent has at least one bit set (but not all bits). The mantissa and sign bits can have any value. The first bit of the mantissa (integer bit) is set to one…

Denormalized number: exponent has all bits cleared. The mantissa and sign bits can have any value. The first bit of the mantissa is reset to zero…

Infinity: The exponent has all its bits set. In the mantissa, all bits are cleared, and the sign bit can be any value. The first bit of the mantissa is set to one…

Uncertainty (NaN): The exponent has all its bits set. The mantissa has at least one bit set, and the sign bit can be any value. The first bit of the mantissa is set to one…

Zero: exponent and mantissa have all bits cleared. The sign bit can have any value. The first bit of the mantissa is reset to zero…

Identity crisis

An observant observer can ask two very reasonable questions:

What if a normal number, infinity, or NaN has the first bit of the mantissa set to zero?

What if a denormalized number has the first bit of the mantissa set to one?

With these questions, you will discover a new set of numbers. Congratulations!

In section 8.2.2 “Unsupported double extended precision floating point encodings and pseudo-denormalized numbers” Intel 64 and IA-32 architectures developer guides describe these numbers, so they are known. Thus, Intel’s floating point unit (FPU) math coprocessor will throw an invalid operation exception if it encounters pseudo-NaN (that is, NaN with the first bit of the mantissa zero), pseudo-infinity (infinity with the first zero bit of mantissa) or abnormal / unnormal (normal number with zero first bit of mantissa). FPU continues to support pseudo-denormalized values (denormalized numbers with a one in the first bit of the mantissa) just like normal denormalized numbers, by throwing a denormalized operand exception. This has been the case since 387 FPU.

Pseudo-denormalized numbers are less interesting because they are treated in the same way as denormalized ones. The rest are not supported; the manual states that FPU will never generate these numbers and does not bother assigning a generic name to them. However, in this article we need to refer to them somehow, so I will call them pseudo-normal numbers…

How do you classify pseudo-normal numbers?

By now, it is clear that these numbers open up a gap in our worldview of floating point numbers in our programming environment. Is pseudo-NaN also NaN? Is pseudo-infinity also infinity? What about unnormal numbers? Should they be put into their own class of numbers? Should each of these types be represented by its own class of numbers? Why didn’t I spit on this still before the third question?

Changing the programming environment to introduce a new class of numbers is not a worthwhile decision for any architecture, so it is out of the question. The assignment of these numbers to existing classes may depend on which class they belong to. Alternatively, we could collectively treat them as NaNs (in particular, signaling NaN), because, like signaling NaNs, working with them generates an invalid operation exception.

Undefined behavior?

This raises the pressing question of whether we should think about it at all. Standard C, for example in section 6.2.6 Type Representations, states that “Some specific representations of objects do not have to represent the value of an object type,” which is appropriate for our situation. FPU will never generate these representations for long double, so it can be argued that the transfer of these representations to long double is undefined. This is one way to answer the classification question, but it essentially prevents the user from understanding the hardware specification. This implies that every time the user reads a long double from a binary or network, he needs to check if the view actually matches the underlying architecture. This is what fpclassify function and others like her should do, but, unfortunately, they do not.

If there are many answers, you will get many answers.

To determine if an input is NaN, the GNU Compiler Collection (GCC) feeds the response the CPU gave it. That is, it implements __builtin_isnanl by performing a floating point comparison with the input. When an exception is thrown (as is the case with NaN), the parity flag is set, which indicates an unordered result, thus indicating that the input value is NaN. When the input is any of the pseudo-normal numbers, it will throw an invalid operation exception, so these numbers are all classified as NaN.

The GNU C library (glibc), on the other hand, looks at the bit pattern of a number to determine its classification. The library evaluates all the bits of a number to decide if the number is NaN in __isnanl or __fpclassify… At the time of this evaluation, the implementation assumes that the FPU will never generate pseudo-normal numbers, and ignores the first bit of the mantissa. As a result, when the input is any of the pseudo-normal numbers (except, of course, pseudo-NaN), the implementation “fixes” the numbers in their non-pseudo counterparts and makes them valid!

Almost (but not completely) safe

The glibc implementation of isnanl assumes that it always receives a properly formatted long double. This is not an unreasonable assumption, but it does make it the responsibility of every programmer to validate long double binary data read from files or the network before passing it to isnanl, which, ironically, is a validation function.

These assumptions led to CVE-2020-10029 and CVE-2020-29573… In both of these CVE functions (trigonometric functions in the first and the printf family of functions in the second) rely on valid input and eventually lead to potential stack overflows. We fixed CVE-2020-10029 to treat pseudo-normal numbers as NaN. The functions check the first bit of the mantissa and fail if it is zero.

The fix history for CVE-2020-29573 is a little more interesting. A few years ago as a glibc cleanup replaced using isnanf, isnan and isnanl to a standard C99 macro isnanwhich expands to the corresponding function based on the input. Subsequently, another patch was released for optimizing isnan C99 macro definition, for him to use __builtin_isnanwhen it’s safe. This inadvertently fixed CVE-2020-29573 because the validation now stopped working for pseudo-normal numbers.

Solution agreement

The CVEs prompted us (the GNU tool community) to talk more seriously about the classification of these numbers in relation to the C library interface. discussed it in the glibc and GCC communities and agreed that these numbers should be considered signaling NaNs in the context of C library interfaces. However, this does not mean that libm will strive to consistently treat these numbers as NaNs internally or provide exhaustive coverage. The goal is not to determine the behavior of these numbers; this is only to make the classification consistent across the entire instrument chain. More importantly, we have agreed on guidelines for cases where misclassification of these numbers leads to crashes or security issues.

This, friends, is a story of abnormal numbers, pseudo-NaN and pseudo-infinity. I hope you never run into them, but if you do, I hope we’ve made it easier for you to deal with them.

Do you want to know why the C ++ language is better than other programming languages? Then I invite everyone to register for a free intensive, within which we will configure our http-server and analyze it, which is called “from and to”; And on the second day we will make all the necessary measurements and make our server super fast.

Intensive will take place within the course “C ++ Developer. Basic”