AI-synthesized voices of actors make it possible to do without foreign voice acting

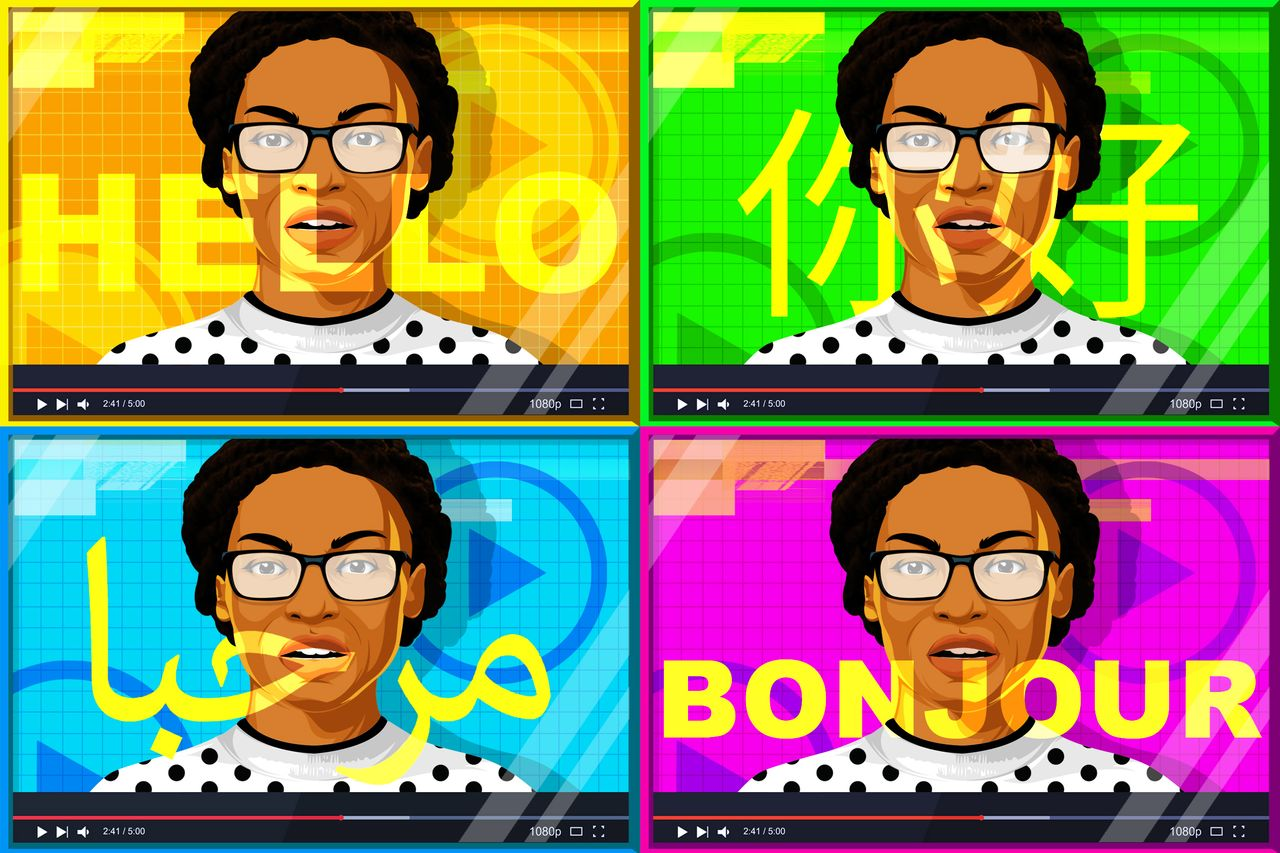

The next movie or TV show you watch may be the “work” of artificial intelligence. Imagine actors in Hollywood, Bollywood, or any other studio speaking your native language in any new movie or show. In fact, this is a very real story, only the voices may not belong to a person – all these are deepfakes, however, not fraudulent, but created by the film studios themselves using AI.

Already, video and voice deepfakes copy the original quite tolerably well. In the near future, these technologies will become even more advanced. Companies will be able to receive copies of the voices of actors speaking a wide variety of languages. And these will not be devoid of expression “voices” like modern digital agents, but very realistic voices, supplied with emotions. Digital actors will be able to cry, laugh, mumble – and as close to the original voice acting as possible.

All these are not fantasies, but the reality of today. The more movies and TV shows are released, the more profitable dubbing becomes. AI is gradually infiltrating this industry, synthesizing more than just the voices of actors. Technologies make it possible to “rejuvenate” the voice, that is, a film in which an elderly actor is filmed can be provided with a “young” voice of this person. In addition, you can create completely artificial voices that belong to already deceased actors or those of them who have lost their voice. Well, yes, the actors themselves can sit at home and drink tea without bothering to go to the recording studio. And in this case, a home studio is not needed at all.

At the same time, the producer of the film is not at all obliged to tell the viewer that the voice is synthesized. Call center robots are one thing, and voice acting for movies or TV shows is quite another.

Everything that is said here is not fiction at all. Most recently, the film “Every Time I Die” of 2019 was dubbed into different languages using AI. It was one of the first attempts to replace the voice of a dub with a digital agent. And everything worked out – even the developer of this technology was far from always able to distinguish a synthesized voice from a real one.

Most likely, this trend will only intensify over time, so that the very role of the dubbing actor will become less and less in demand. It’s easy to train a neural network that will serve as the basis for the next voice of an actor. This requires only 5 minutes of recording, where the translator speaks the text over the original English.

The technology is already being used now. For example, it was used in The Mandalorian when Luke Skywalker was shown. Deepfek replaced the voice of the real Mark Hamill, who is already 70 years old. The studio did not particularly spread about this, but this is a very real project. Moreover, the overwhelming majority of viewers did not realize that the voice was fake.

Hammil himself gave permission to use his voice. And the Respeecher company. I took advantage of this permission by training a neural network based on fragments of a 40-year-old actor’s voice recording. The sources were footage from the film, an old radio show, and Hamill’s taped voice.

According to the creator of the technology, usually a person picks up a voice synthesized by AI. It is shown by a number of signs, including lack of emotion, a kind of “metallicity” and so on. Now these barriers do not exist and it has become very difficult for a person to distinguish a real voice from a deepfake.

Several companies are engaged in similar projects in parallel. For example, London-based company Sonatic recently played Val Kilmer’s voice. This famous actor has lost the former timbre, tonality, etc. due to problems with the larynx. The simulation turned out to be so real that his own son cried when he heard his father’s voice. “The idea of being able to customize voice content, change emotions, pitch, direction, tempo, style, accents – it’s now possible there,” says Sonantic CEO Zina Qureshi.

Deepfakes of this kind can be used not only for scoring films, but also for other content, including video games. You can also re-sound old films with a damaged or not very natural audio track. AI copes with these tasks without too many problems.

Of course, risks cannot be avoided here – the deepfake industry is developing very actively. If you do not take into account the falsification of the voices of politicians, heads of companies, etc., which we will talk about in one of the following materials, you can still face illegal actions of enterprising businessmen. Recently, Morgan Freeman’s voice was used in advertisements associated with a company that didn’t even try to contact the actor.

But be that as it may, artificial voices are gradually occupying their niche – more and more companies are requesting permission from actors to use their voices. Among them are both film studios and ordinary advertising agencies. In the near future, AI dubbing studios are likely to become highly demanded services. If they have not yet, dozens of companies are already actively using their services.