A2 VMs – Largest Cloud Images with NVIDIA A100 GPUs Now Available to Everyone

Recently, in our Google Cloud blogWe announced that A2 VMs powered by NVIDIA Ampere A100 GPUs with tensor cores have appeared in the Compute Engine service. With their help, users will be able to perform machine learning and high performance computing Powered by NVIDIA CUDA architecture, increasing workloads in less time and cost.

In this article, we want to tell you more about what A2 virtual machines are, about their performance and features. And tell our colleagues and partners how we use these machines.

Highest performance

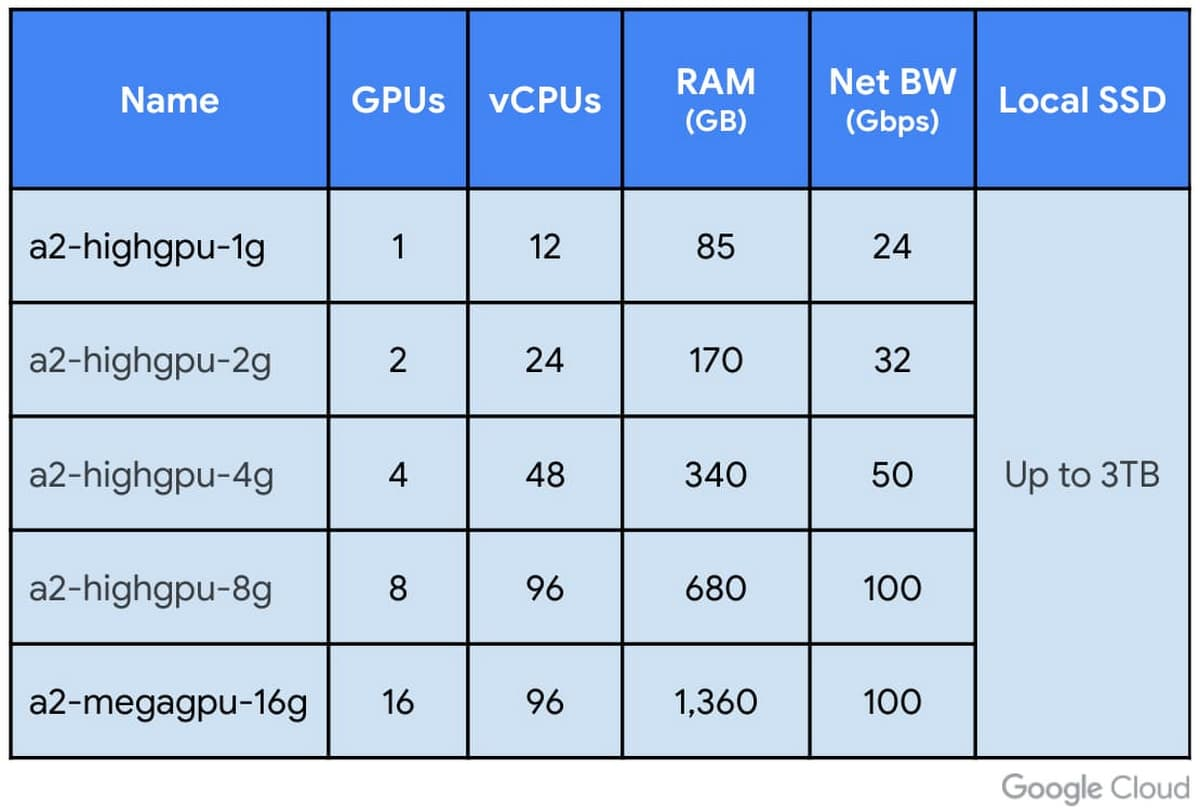

One A2 VM supports up to 16 NVIDIA A100 GPUs… It is the highest performing GPU on a single node to date of all competing solutions from the largest cloud providers. Depending on the size of your workload, you can also opt for A2 VMs with fewer GPUs (1, 2, 4, and 8).

This allows researchers, data scientists, and developers to dramatically increase the performance of scalable workloads (such as machine learning, inference, and high performance computing) on the CUDA architecture. The A2 VM family on the Google Cloud Platform can meet the needs of the most demanding HPC applications, such as CFD simulations in Altair ultraFluidX…

For those looking for super-performance systems, Google Cloud offers clusters of thousands of GPUs for distributed machine learning, as well as optimized NCCL libraries for horizontal scaling. VM version with 16 A100 GPUs linked via bus NVIDIA NVLink, Is a unique offering from Google Cloud. If you need to scale demanding workloads vertically, you can start with a single A100 GPU and go up to 16 without setting up multiple ML VMs on a single node.

To meet the needs of different applications, lower-performance A2 VM configurations are available with an onboard 3TB SSD, which speeds up the delivery of data to the GPU. For example, the A100 GPU in Google Cloud more than 10x faster BERT-Large pretraining compared to the previous generation NVIDIA V100. At the same time, in configurations with the number of GPUs from 8 to 16, there is a linear increase in performance. In addition, developers can use pre-configured software in containers from NVIDIA storage NGC to quickly launch A100 instances in the Compute Engine.

User reviews

We started offering A2 VMs with A100 GPUs to our partners in July 2020. Today, we work with many organizations to help them reach new heights in machine learning, visualization and high performance computing. Here’s what they say about the A2 virtual machines:

Company Dessa recently acquired the Square holding. She is engaged in AI research and was one of the first to use the A2 VM. Based on her experimentation and innovation, Square develops personalized services and smart tools for Cash App that use AI to help non-experts. make better financial decisions…

“Thanks to Google Cloud, we gained the necessary control over our processes,” says Kyle de Freitas, senior software engineer at Dessa. “We understood that the GPU-based A2 VMs offered in the Compute Engine NVIDIA A100 with tensor kernels are able to drastically reduce the computation time and significantly speed up our experiments. The NVIDIA A100 processors used in the Google Cloud AI Platform enable us to innovate effectively and bring new ideas to life for our customers. “

Hyperconnect Is a global video communications technology (WebRTC) and AI company. Hyperconnect seeks to connect people around the world by creating services based on various video and AI technologies.

“The A2 Instances with the new NVIDIA A100 GPUs on Google Cloud take performance to a whole new level when setting up deep learning models. We’ve easily migrated from the previous generation of V100 GPUs. With the A2-MegaGPU VM configuration, we’ve not only accelerated learning by more than twice the V100, but also got the ability to vertically scale large neural network workloads in the Google Cloud. These innovations will help us optimize models and improve usability of Hyperconnect services, “said Kim Bemsu, Machine Learning Researcher at Hyperconnect …

Deepmind (a subsidiary of Alphabet) is a team of scientists, engineers, machine learning specialists and other experts who develop AI technologies.

“DeepMind is a AI developer. Our researchers are experimenting with hardware accelerators. Google Cloud gives us access to a new generation of NVIDIA GPUs, and the A2-MegaGPU-16G virtual machine can train models faster than ever. “We are excited to continue working with the Google Cloud platform to help us build the future machine learning and AI infrastructure.” – Koray Kavukcuoglu, Vice President Research, DeepMind.

AI2 Is a non-profit research institute dedicated to advanced AI research and development for the greater good.

“Our main mission is to empower computers. In this regard, we are faced with two fundamental problems. First, modern AI algorithms require enormous computing power. Second, specialized hardware and software in this area is rapidly changing. The A100 in the GCP is four times as powerful as our current systems and does not require major coding to be used. By and large minimal changes are sufficient. The A100 GPU in Google Cloud can significantly increase the amount of compute per dollar. , we can do more experimentation and use more data, ”says Dirk Gruneveld, senior developer at the Allen Institute for Artificial Intelligence.

OTOY Is a cloud graphics computing company. It develops innovative technologies for creating and delivering content for the media and entertainment industry.

“For nearly a decade, we’ve been pushing the boundaries of what is possible in graphics rendering and cloud computing and striving to remove the limits of artistic creativity. With NVIDIA A100 processors in the Google Cloud with massive VRAM and the highest OctaneBench rating ever, we were the first to reach the level where artists no longer have to worry about rendering complexity when realizing your vision. OctaneRender’s rendering engine has reduced the cost of special effects. It allows anyone with an NVIDIA GPU to create stunning cinematic visuals. NVIDIA A100 virtual machines in Google Cloud give OctaneRender and RNDR users access to modern NVIDIA GPUs previously only available to major Hollywood studios, ”says Jules Urbach, Founder and CEO of OTOY.

GPU pricing and availability

NVIDIA A100 Instances are now available in the following regions: us-central1, asia-southeast1, and europe-west4. Additional regions will be added to them during 2021. A2 VMs on Compute Engine are available upon request with preemptive discounted instances and commitment to use, and are fully supported on Google Kubernetes Engine (GKE), Cloud AI Platform, and other Google Cloud services. The A100 is priced at just $ 0.87 per GPU in the displaced A2 VMs. The full price list is available here…

Beginning of work

You can quickly deploy work, start model training, and execute inference workloads on NVIDIA A100 GPUs with VM images for deep learning in the available regions. These images contain all the software you need: drivers, NVIDIA CUDA-X AI libraries, and popular AI frameworks such as TensorFlow and PyTorch. Optimized TensorFlow Enterprise images also includes A100 support for current and past versions of TensorFlow (1.15, 2.1 and 2.3). You don’t need to worry about software updates, compatibility and performance tuning – we take care of all this. On the this page provides information on GPUs available on Google Cloud.

We remind you that when you first sign up for Google Cloud: you have $ 300 worth of bonuses available, and more than 20 free products are always available. More on special link…

We also express our gratitude for the help in preparing the material to our colleagues: Bharat Partasarati, Chris Kleban and Zviad Kardava