A Beginner's Guide to Hallucinations in Large Language Models

Let's take a closer look at the main causes of hallucinations in LLM, including issues related to training data, architecture, and inference strategies.

Problems with training data

A significant factor contributing to hallucinations in LLMs is the nature of the training data. LLMs such as GPT, Falcon, and LlaMa undergo extensive training without any meaningful supervision using large and diverse datasets from different sources.

Verifying that this data is fair, unbiased, and factually correct is a challenging task. Because these models learn to generate text, they can also catch and reproduce factual inaccuracies in the training data.

This results in models being unable to distinguish truth from fiction and may produce results that deviate far from the facts or logical reasoning.

LLMs trained on datasets obtained from the Internet may contain biased or incorrect information. This misinformation may end up in the model output because the model does not distinguish between accurate and inaccurate data.

For example, the Bard error regarding the James Webb Space Telescope shows how reliance on incomplete data can lead to confident but incorrect statements.

Architecture and learning objectives

Hallucinations can arise due to flaws in the model architecture or suboptimal learning objectives.

For example, architectural flaws or an incorrectly stated training objective may cause the model to produce results that do not match the intended use or expected performance.

This mismatch can result in the model generating content that is either meaningless or factually incorrect.

Problems at the output stage

At the inference stage, hallucinations can be caused by several factors.

These include flawed decoding strategies and the randomness inherent in the sampling methods used by the model.

Additionally, issues such as insufficient attention to context or the softmax decoding bottleneck may result in outputs not being adequately related to the context or training data.

Prompt engineering

The occurrence of hallucinations can also be influenced by how the prompts are composed.

LLM may generate an incorrect or unrealistic answer if the prompt lacks adequate context or is ambiguously worded.

Writing effective prompts requires clarity and specificity to guide the model to generate relevant and accurate responses.

Pro Tip: Check out effective methods for creating prompts.

Stochastic nature of decoding

When generating text, LLMs use strategies that can introduce randomness into the result.

For example, high “temperature” can increase creativity, but also the risk of hallucinations, as observed in models generating completely new plots or ideas.

Stochastic methods can sometimes produce unexpected or bizarre answers, reflecting the probabilistic nature of the model's decision-making (choosing a ready-made answer).

Handling ambiguous input data

Models can generate hallucinations when faced with unclear or imprecise input data.

In the absence of explicit information, models can fill in the gaps with fictitious data, as demonstrated by the case of ChatGPT creating false accusation against professor because of the ambiguous clue.

Over-optimization of the model for specific purposes

Sometimes LLMs are optimized to achieve certain results, such as longer search results, which can result in wordy and irrelevant answers.

This over-optimization can result in models not providing concise and accurate information, but rather producing more content that may include hallucinations.

Addressing these factors involves improving data quality, refining model architectures, improving decoding strategies, and more efficient prompt design to reduce the frequency and impact of hallucinations.

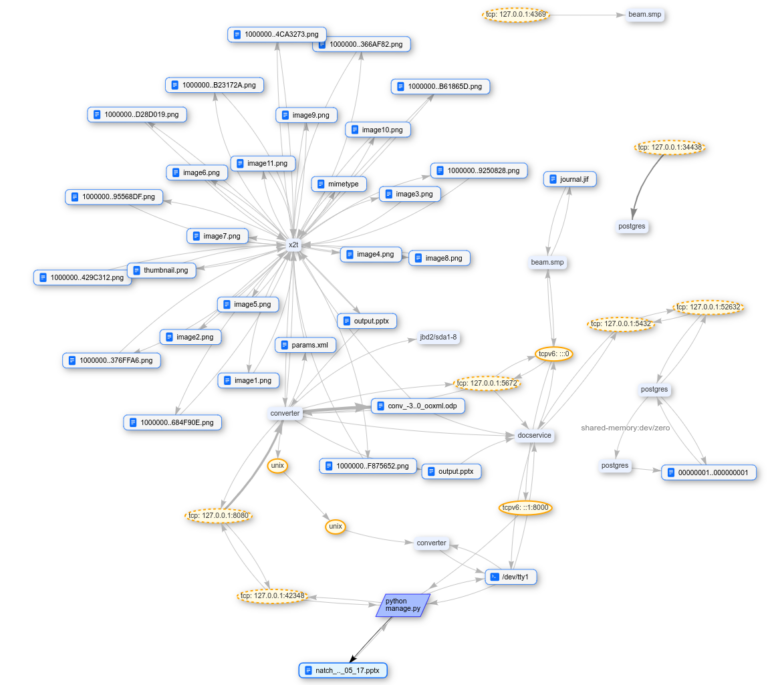

Stage | Substage | Type | Approximate reason | Example from real model output |

Data | Poor quality data source | Misinformation and Prejudice | Training on incorrect data can lead to spurious results. | A model that named Thomas Edison as the sole inventor of the light bulb due to repeated misinformation in the training data. |

Being on the edge of knowledge | The lack of current facts leads to limitations in specialized areas. | The lack of up-to-date facts leads to limitations in specialized areas. | LLM providing outdated information about the last Olympic host country due to static, non-updated knowledge from the training data. | |

Model training | Pre-training | Disadvantages of architecture | A one-way view can limit understanding of context | LLM that generates one-sided statements without taking into account the full context, resulting in partial or biased content. |

Exposure Bias | Mismatch between training and inference (inference, model performance) can lead to cascading errors. | During inference, LLM continues to generate errors based on just one invalid token it produced. | ||

Alignment | Mismatch of capabilities | Giving LLMs opportunities beyond their training can lead to mistakes. | LLM producing content in a specialized area without the necessary data, resulting in the generation of fictitious statements. | |

Discrepancy with data presentation | The output data diverges from the internal representation of the data in LLM, leading to inaccuracies. | LLM panders to user opinions by generating content that it “knows” is incorrect. | ||

Inference (model operation and data output) | Decoder | Internal randomness / randomness of the sample | Randomness in the selection of successive tokens may lead to less frequent but incorrect results. | LLM selects unlikely lexemes/tokens during generation, resulting in unexpected or irrelevant content. |

Imperfect representation of decoding | Over-reliance on partially generated content and the softmax bottleneck problem | LLM places too much emphasis on recent lexemes or fails to grasp complex relationships between words, leading to accuracy errors. |

Table 3: Summary of hallucination causes in LLM at data, training, and inference stages (source)

Table 3 summarizes the different types of nuances of hallucination causes in large language models from the outstanding research work of Lei Huang and his team. I highly recommend reading this paper as it goes into more detail about hallucination causes with examples of model output.